包阅导读总结

1. 关键词:Alibaba、Qwen2、Language Models、Math、Voice Chat

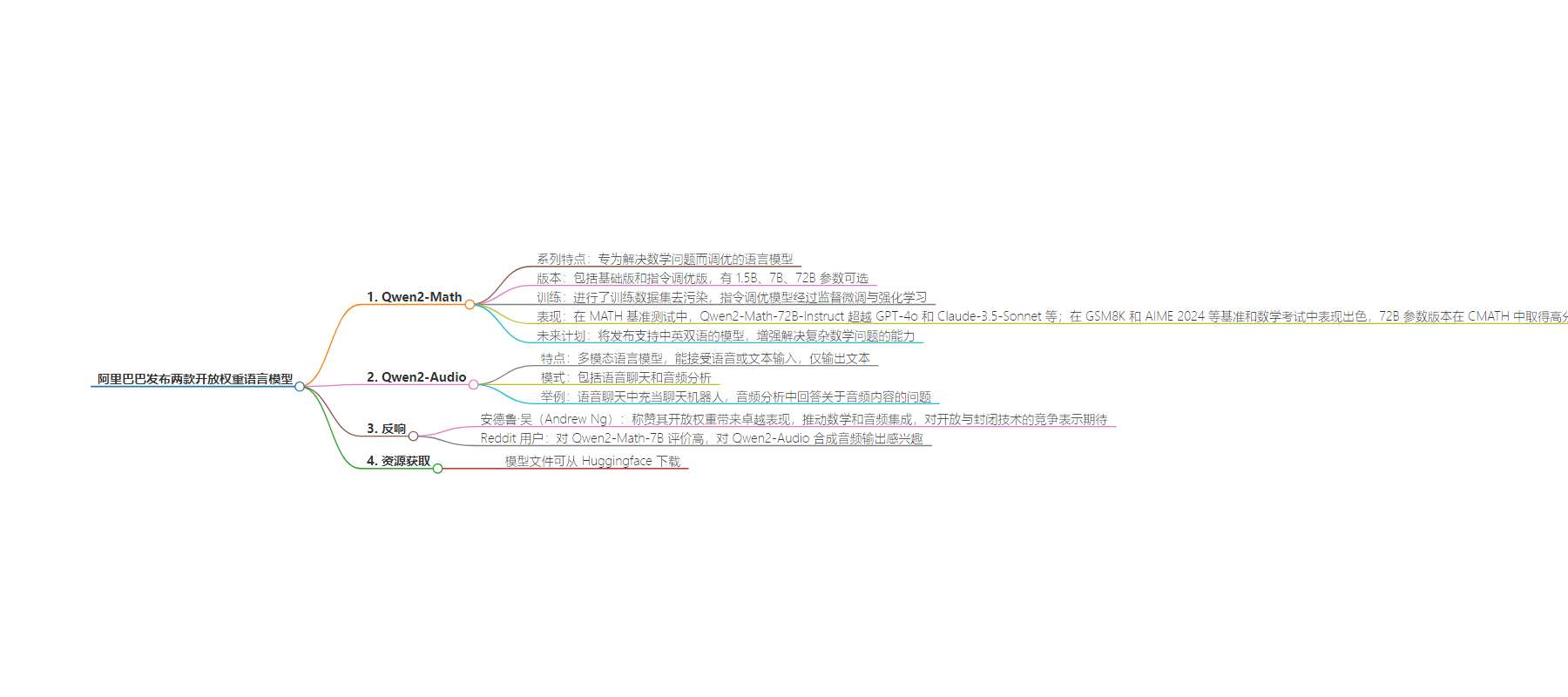

2. 总结:Alibaba 发布了两个开放权重语言模型家族,Qwen2-Math 用于解决数学问题,Qwen2-Audio 支持语音或文本输入。Qwen2-Math 在数学基准测试中表现出色,计划推出双语和多语言模型。两个模型在网上引发讨论,模型文件可从 Huggingface 下载。

3. 主要内容:

– Alibaba 发布语言模型

– Qwen2-Math:用于解决数学问题,有基础版和指令调优版,不同参数可选,在数学基准测试中表现优异,计划推出双语和多语言模型,提升解决复杂数学问题能力。

– Qwen2-Audio:多模态,接受语音或文本输入,只能输出文本,有两种模式,发布了技术报告。

– 相关评价与讨论

– 在数学测试中表现优于其他模型。

– 受到 Andrew Ng 及 Reddit 用户关注和讨论。

– 模型获取

– 模型文件可从 Huggingface 下载。

思维导图:

文章来源:infoq.com

作者:Anthony Alford

发布时间:2024/9/3 0:00

语言:英文

总字数:509字

预计阅读时间:3分钟

评分:91分

标签:语言模型,AI 应用,阿里巴巴,Qwen2 系列,数学问题解决

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Alibaba released two open-weight language model families: Qwen2-Math, a series of LLMs tuned for solving mathematical problems; and Qwen2-Audio, a family of multi-modal LLMs that can accept voice or text input. Both families are based on Alibaba’s Qwen2 LLM series, and all but the largest version of Qwen2-Math are available under the Apache 2.0 license.

Qwen2-Math is available in a base version and an instruction-tuned version, each with a choice of 1.5B, 7B, or 72B parameters. Because most benchmark datasets are available on the internet, Alibaba conducted decontamination on their training datasets to remove mathematical problem-solving benchmark examples. After pre-training, the instruction-tuned models were trained with both supervised fine-tuning and reinforcement learning. On the popular MATH benchmark, the largest model, Qwen2-Math-72B-Instruct, outperformed state-of-the-art commercial models including GPT-4o and Claude-3.5-Sonnet. According to Alibaba,

Given the current limitation of English-only support, we plan to release bilingual models that support both English and Chinese shortly, with the development of multilingual models also in the pipeline. Moreover, we will continue to enhance our models’ ability to solve complex and challenging mathematical problems.

Besides MATH, Alibaba evaluated Qwen2-Math on benchmarks and mathematics exams, such as GSM8K and AIME 2024. They found that Qwen2-Math-Instruct had better performance than other baseline models of comparable size, “particularly in the 1.5B and 7B models.” The 72B parameter version achieved a score of 86.4on the Chinese-language math exam benchmark CMATH, which Alibaba claims is a new high score. They also claim that it outperformed Claude, GPT-4, and Gemini on the AIME 2024 exam.

Alibaba published a technical report with more details on Qwen2-Audio. The model accepts both text and audio input, but can only output text. Depending on the type of audio input provided, the model can operate in two modes, Voice Chat or Audio Analysis. In Voice Chat mode, the input is a user’s speech audio, and the model acts as a chatbot. In Audio Analysis mode, the model can answer questions about the content of audio input. For example, given a clip of music, the model can identify the tempo and key of the song.

Andrew Ng’s newsletter The Batch covered Alibaba’s release, saying:

Qwen2 delivered extraordinary performance with open weights, putting Alibaba on the map of [LLMs]. These specialized additions to the family push forward math performance and audio integration in AI while delivering state-of-the-art models into the hands of more developers. It’s thrilling to see models with open weights that outperform proprietary models. The white-hot competition between open and closed technology is good for everyone!

Users on Reddit discussed both model series. One user described Qwen2-Math-7B as “punching really high and hard for its size.” Another user said of Qwen2-Audio:

It would be very interesting to try to synthesize audio output using this model. The audio encoder is almost identical to WhisperSpeech one. Although Qwen2 is using Whisper-large-v3 which would probably require retraining of the WhisperSpeech acoustic model. If successful, that would be basically equivalent to GPT4o advanced voice mode running locally.

The model files for Qwen2-Math and Qwen2-Audio can be downloaded from Huggingface.