包阅导读总结

1. 关键词:

– Dynamic Scaling

– Shifting Right

– Runtime Automation

– Workload Rightsizing

– Cloud Infrastructure

2. 总结:

本文探讨了软件开发中“shifting right”(右移)的策略,指出在动态缩放和工作负载调整等方面,于运行时定义特定设置比早期开发阶段定义更具优势,介绍了其好处、案例及实现方式,强调通过这种方式能优化资源利用、节省成本并提升性能。

3. 主要内容:

– 软件开发常强调“shift left”,但“shifting right”在某些场景有益

– 介绍“shifting right”,即在运行时定义特定设置

– 动态缩放适合运行时配置

– 因日常使用情况不同,静态配置易导致资源利用低效

– 举例说明通过运行时自动化动态调整资源可提升效率和降低成本

– 实现运行时自动化

– 需建立系统自主运行的保障措施,与IaC原则不冲突且互补

– 避免设置错误,采用动态实例大小调整以优化资源利用和成本效率

– 工作负载调整在运行时效果最佳

– 基于实际使用调整资源请求和限制,新Kubernetes版本将解决相关限制

– 监测工具能生成合适资源设置,不同环境要求不同

– 结论

– 运行时自动化能节省成本和提升性能,优化资源利用,使云基础设施更具弹性和成本效益,并举例说明

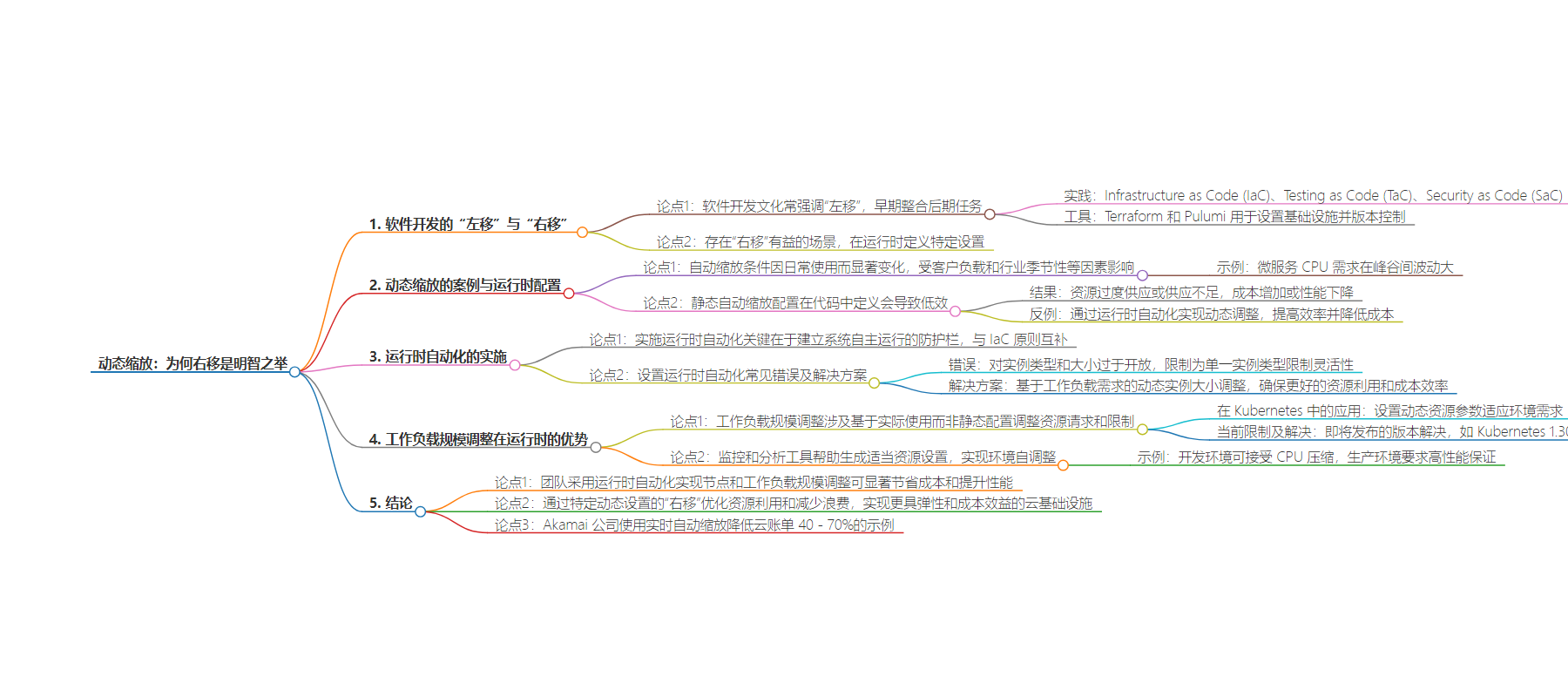

思维导图:

文章地址:https://thenewstack.io/dynamic-scaling-why-shifting-right-is-the-smart-approach/

文章来源:thenewstack.io

作者:Phil Andrews

发布时间:2024/8/21 15:29

语言:英文

总字数:611字

预计阅读时间:3分钟

评分:88分

标签:动态扩展,运行时配置,云效率,Kubernetes,自动扩展

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

The software development culture often emphasizes the “shift left” approach, where tasks traditionally performed later in the development lifecycle are integrated early. This includes practices like Infrastructure as Code (IaC), Testing as Code (TaC), and Security as Code (SaC). Tools like Terraform and Pulumi are used to set up infrastructure, which is then version-controlled and stored in source control systems.

However, there are scenarios where “shifting right” is beneficial. Shifting right involves defining specific settings at runtime rather than during the initial stages of development.

This approach is particularly advantageous for dynamic aspects of a system, such as autoscaling conditions. Unlike the cardinal sin of testing in production, setting autoscaling parameters at runtime is practical and often necessary.

Keep reading to explore two compelling cases for shifting right: dynamic scaling and workload rightsizing.

Dynamic Scaling: A Case for Runtime Configuration

Autoscaling conditions vary significantly based on day-to-day usage, influenced by factors like customer load and industry seasonality. For instance, a microservice’s CPU requirements might fluctuate by as much as 1,000 CPUs between peaks and valleys.

Static autoscaling configurations defined in code can lead to inefficiencies. The system either overprovisions or underprovisions resources, leading to increased costs or degraded performance.

In a real-world example, a cluster with over 8,000 pods and 600 unique workloads experienced CPU utilization ranging from 13% during low usage to 26% during peak times. By implementing runtime automation for autoscaling, the cluster could dynamically adjust resources, significantly improving efficiency and reducing costs.

Implementing Runtime Automation

To effectively implement runtime automation, it’s crucial to establish guardrails within which the system can operate autonomously. This approach doesn’t conflict with the principles of IaC but rather complements it by providing flexibility within defined boundaries.

Common mistakes in setting up runtime automation include being more open with instance types and sizes.

For example, restricting to a single instance type (the “node group” mentality) can limit flexibility. AWS alone offers over 800 instance types, many of which share similar CPU and memory characteristics but differ in additional features.

The solution? Dynamic instance sizing based on workload requirements ensures better resource utilization and cost efficiency.

Workload Rightsizing Works Best at Runtime, Too

Workload rightsizing involves adjusting resource requests and limits based on actual usage rather than static configurations. In Kubernetes, this means setting dynamic resource parameters that adapt to the environment’s needs.

Current limitations, such as restarting workloads to apply new settings, are being addressed in upcoming Kubernetes versions (e.g., Kubernetes 1.30).

Tools that monitor and analyze real CPU and memory usage can help generate appropriate resource settings. They enable environments to self-tune, ensuring optimal performance and cost savings. For example, in a development environment, CPU compression is acceptable to reduce costs, while production environments require higher performance guarantees.

Conclusion

Teams adopting runtime automation for node and workload sizing can achieve significant cost savings and performance improvements. By shifting right for certain dynamic settings, they can leverage real-time adjustments to optimize resource utilization and reduce waste.

Overall, the compounded gains from logical and physical resource adjustments lead to a more resilient and cost-effective cloud infrastructure.

How does this type of automation work in practice?

The graph below shows an auto scaler scaling cloud resources up and down in line with real-time demand, giving enough headroom to meet the application’s requirements.

This example comes from Akamai, one of the world’s largest and most trusted cloud delivery platforms. The company used real-time autoscaling to reduce its cloud bill by 40-70%, depending on the workload. Check out the complete case study to learn more.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.