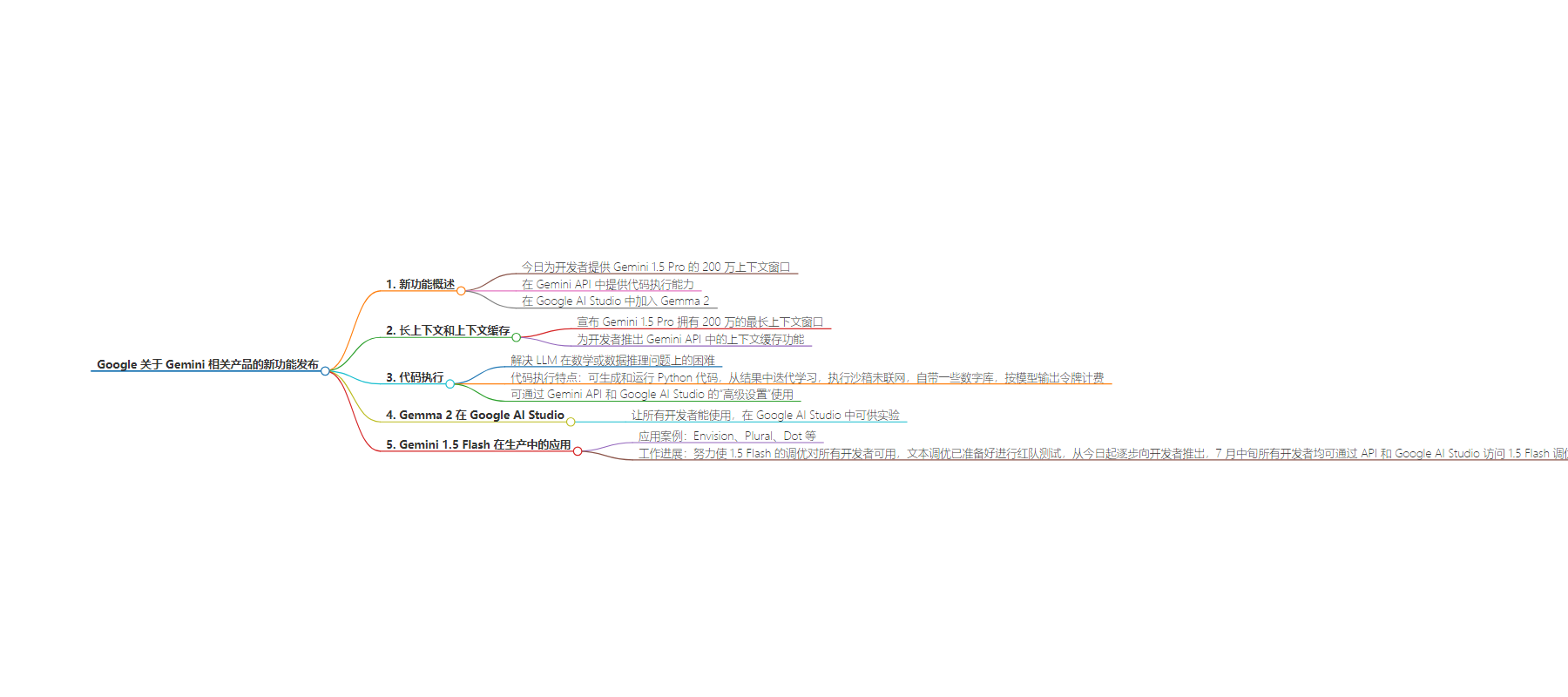

包阅导读总结

1. 关键词:Gemini 1.5 Pro、Code Execution、Gemma 2、Context Window、Google AI Studio

2. 总结:Gemini 1.5 Pro 的 200 万上下文窗口、代码执行能力及 Gemma 2 今日开放,介绍了长上下文和缓存、代码执行的特点及 Gemma 2 在 Google AI Studio 中的情况,还提到 Gemini 1.5 Flash 在生产中的应用及相关进展。

3. 主要内容:

– Gemini 1.5 Pro

– 开放 200 万上下文窗口供所有开发者使用

– 在 Gemini API 中推出上下文缓存以降低成本

– 代码执行能力

– 有助于解决数学和数据推理问题

– 可通过模型生成和执行 Python 代码,执行沙箱未联网,开发者按输出令牌计费

– 通过 Gemini API 和 Google AI Studio 的“高级设置”可用

– Gemma 2

– 在 Google AI Studio 中可供开发者试验

– Gemini 1.5 Flash

– 满足开发者对速度和经济性的需求

– 被用于多个实际应用

– 文本调优已准备好进行红队测试,7 月中旬所有开发者可通过 API 和 Google AI Studio 访问调优

思维导图:

文章地址:https://developers.googleblog.com/en/new-features-for-the-gemini-api-and-google-ai-studio/

文章来源:developers.googleblog.com

作者:Logan Kilpatrick,Shrestha Basu Mallick,Ronen Kofman

发布时间:2024/6/27 0:00

语言:英文

总字数:629字

预计阅读时间:3分钟

评分:86分

标签:Gemini API,长上下文窗口,代码执行,Gemma 2,Google AI Studio

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Today, we are giving developers access to the 2 million context window for Gemini 1.5 Pro, code execution capabilities in the Gemini API, and adding Gemma 2 in Google AI Studio.

Long context and context caching

At I/O, we announced the longest ever context window of 2 million tokens in Gemini 1.5 Pro behind a waitlist. Today, we’re opening up access to the 2 million token context window on Gemini 1.5 Pro for all developers.

As the context window grows, so does the potential for input cost. To help developers reduce costs for tasks that use the same tokens across multiple prompts, we’ve launched context caching in the Gemini API for both Gemini 1.5 Pro and 1.5 Flash.

Code execution

LLMs have historically struggled with math or data reasoning problems. Generating and executing code that can reason through such problems helps with accuracy. To unlock these capabilities for developers, we have enabled code execution for both Gemini 1.5 Pro and 1.5 Flash. Once turned on, the code-execution feature can be dynamically leveraged by the model to generate and run Python code and learn iteratively from the results until it gets to a desired final output. The execution sandbox is not connected to the internet, comes standard with a few numerical libraries, and developers are simply billed based on the output tokens from the model.

This is our first step forward with code execution as a model capability and it’s available today via the Gemini API and in Google AI Studio under “advanced settings”.

Gemma 2 in Google AI Studio

We want to make AI accessible to all developers, whether you’re looking to integrate our Gemini models via an API key or using our open models like Gemma 2. To help developers get hands-on with the Gemma 2 model, we’re making it available in Google AI Studio for experimentation.

Gemini 1.5 Flash in production

Gemini 1.5 Flash was built to address developers’ top request for speed and affordability. We continue to be excited by how developers are innovating with Gemini 1.5 Flash and using the model in production:

- Envision empowers people who are blind or have low vision to better understand their immediate environment through an app or smart glasses and ask specific questions. Leveraging the speed of Gemini 1.5 Flash, Envision’s users are able to get real time descriptions of their surroundings, which is critical to their experience navigating the world.

- Plural, an automated policy analysis and monitoring platform, uses Gemini 1.5 Flash to summarize and reason with complex legislation documents for NGOs and policy-interested citizens, so they can have an impact on how bills are passed.

- Dot, an AI designed to grow with a user and become increasingly personalized over time, leveraged Gemini 1.5 Flash for a number of information compression tasks that are key to their agentic long-term memory system. For Dot, 1.5 Flash performs similarly to more expensive models at under one-tenth the cost for tasks like summarization, filtering & re-ranking.

In line with our previous announcement last month, we’re working hard to make tuning for Gemini 1.5 Flash available to all developers, to enable new use cases, additional production robustness and higher reliability. Text tuning in 1.5 Flash is now ready for red-teaming and will be rolling out gradually to developers starting today. All developers will be able to access Gemini 1.5 Flash tuning via the Gemini API and in Google AI Studio by mid-July.

We are excited to see how you use these new features, you can join the conversation on our developer forum. If you’re an enterprise developer, see how we’re making Vertex AI the most enterprise-ready genAI platform.