包阅导读总结

1. 关键词:Generative AI、Runnable Markdown、VSCode、Docker、Developer Tools

2. 总结:本文探讨了利用生成式人工智能创建可运行的 Markdown,介绍了在 Docker 项目中的相关探索,包括在 VSCode 中的扩展应用,强调其对开发者的帮助,还提及反馈循环和可操作性。

3. 主要内容:

– 探索 AI 开发者工具领域

– 认为 AI 工具在软件全生命周期有协助潜力

– 利用生成式 AI 生成内容

– 为新项目生成工具使用文档

– 结合专家提示和项目知识提升生成效果

– 根据项目具体情况优化提示

– 可运行的 Markdown

– 在 VSCode 扩展中添加运行代码块的功能

– 视 Markdown 文件为脚本,绑定快捷键

– 形成助手、生成内容和开发者的反馈循环

– 相关资源

– GitHub 仓库和安装说明

– 更多内容的演示和订阅渠道

思维导图:

文章地址:https://www.docker.com/blog/using-generative-ai-to-create-runnable-markdown/

文章来源:docker.com

作者:Docker Labs

发布时间:2024/7/1 6:00

语言:英文

总字数:917字

预计阅读时间:4分钟

评分:88分

标签:AI/ML,Docker实验室GenAI系列,GenAI堆栈

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Using Generative AI to Create Runnable Markdown

This ongoing GenAI Docker Labs series will explore the exciting space of AI developer tools. At Docker, we believe there is a vast scope to explore, openly and without the hype. We will share our explorations and collaborate with the developer community in real-time. Although developers have adopted autocomplete tooling like GitHub Copilot and use chat, there is significant potential for AI tools to assist with more specific tasks and interfaces throughout the entire software lifecycle. Therefore, our exploration will be broad. We will be releasing things as open source so you can play, explore, and hack with us, too.

Generative AI (GenAI) is changing how we interact with tools. Today, we might experience this predominantly through the use of new AI-powered chat assistants, but there are other opportunities for generative AI to improve the life of a developer.

When developers start working on a new project, they need to get up to speed on the tools used in that project. A common practice is to document these practices in a project README.md and to version that documentation along with the project.

Can we use generative AI to generate this content? We want this content to represent best practices for how tools should be used in general but, more importantly, how tools should be used in this particular project.

We can think of this as a kind of conversation between developers, agents representing tools used by a project, and the project itself. Let’s look at this for the Docker tool itself.

Generating Markdown in VSCode

For this project, we have written a VSCode extension that adds one new command called “Generate a runbook for this project.” Figure 1 shows it in action:

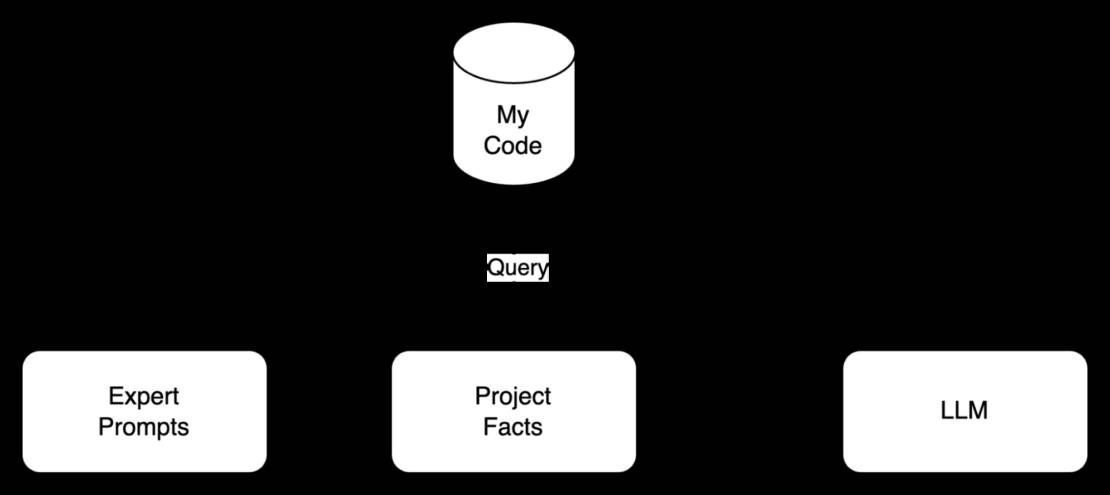

This approach combines prompts written by tool experts with knowledge about the project itself. This combined context improves the LLM’s ability to generate documentation (Figure 2).

Although we’re illustrating this idea on a tool that we know very well (Docker!), the idea of generating content in this manner is quite generic. The prompts we used for getting started with the Docker build, run, and compose are available from GitHub. There is certainly an art to writing these prompts, but we think that tool experts have the right knowledge to create prompts of this kind, especially if AI assistants can then help them make their work easier to consume.

There is also an essential point here. If we think of the project as a database from which we can retrieve context, then we’re effectively giving an LLM the ability to retrieve facts about the project. This allows our prompts to depend on local context. For a Docker-specific example, we might want to prompt the AI to not talk about compose if the project has no compose.yaml files.

“I am not using Docker Compose in this project.”

That turns out to be a transformative user prompt if it’s true. This is what we’d normally learn through a conversation. However, there are certain project details that are always useful. This is why having our assistants right there in the local project can be so helpful.

Runnable Markdown

Although Markdown files are mainly for reading, they often contain runnable things. LLMs converse with us in text that often contains code blocks that represent actual runnable commands. And, in VSCode, developers use the embedded terminal to run commands against the currently open project. Let’s short-circuit this interaction and make commands runnable directly from these Markdown runbooks.

In the current extension, we’ve added a code action to every code block that contains a shell command so that users can launch that command in the embedded terminal. During our exploration of this functionality, we have found that treating the Markdown file as a kind of REPL (read-eval-print-loop) can help to refine the output from the LLM and improve the final content. Figure 3 what this looks like in action:

Markdown extends your editor

In the long run, nobody is going to navigate to a Markdown file in order to run a command. However, we can treat these Markdown files as scripts that create commands for the developer’s edit session. We can even let developers bind them to keystrokes (e.g., type ,b to run the build code block from your project runbook).

In the end, this is just the AI Assistant talking to itself. The Assistant recommends a command. We find the command useful. We turn it into a shortcut. The Assistant remembers this shortcut because it’s in our runbook, and then makes it available whenever we’re developing this project.

Figure 4 shows a real feedback loop between the Assistant, the generated content, and the developer that is actually running these commands.

As developers, we tend to vote with our keyboards. If this command is useful, let’s make it really easy to run! And if it’s useful for me, it might be useful for other members of my team, too.

The GitHub repository and install instructions are ready for you to try today.

For more, see this demo: VSCode Walkthrough of Runnable Markdown from GenAI.

Subscribe to Docker Navigator to stay current on the latest Docker news.