包阅导读总结

1. 关键词:

– Gemma 2

– 负责的 AI

– 模型性能

– 安全分类器

– 模型可解释性

2. 总结:

本文介绍了 Gemma 2 系列新模型,包括性能出色且在多种硬件上灵活部署的 Gemma 2 2B,用于过滤有害内容的 ShieldGemma 安全分类器,以及提供模型决策过程透明度的 Gemma Scope。强调这些成果体现了对负责的 AI 的持续追求。

3. 主要内容:

– Gemma 2 系列

– 发布 27 亿和 9 亿参数大小的模型,27B 模型在 LMSYS Chatbot Arena 表现出色。

– 新成员

– Gemma 2 2B

– 学习自大型模型,性能超越 GPT-3.5,可在多种硬件上高效部署。

– ShieldGemma

– 检测和减轻有害内容,性能领先,有多种尺寸,开放且鼓励协作。

– Gemma Scope

– 利用稀疏自动编码器提供模型决策过程透明度,有互动演示。

– 未来展望

– 强调开放、透明和协作对开发安全有益的 AI 的重要性。

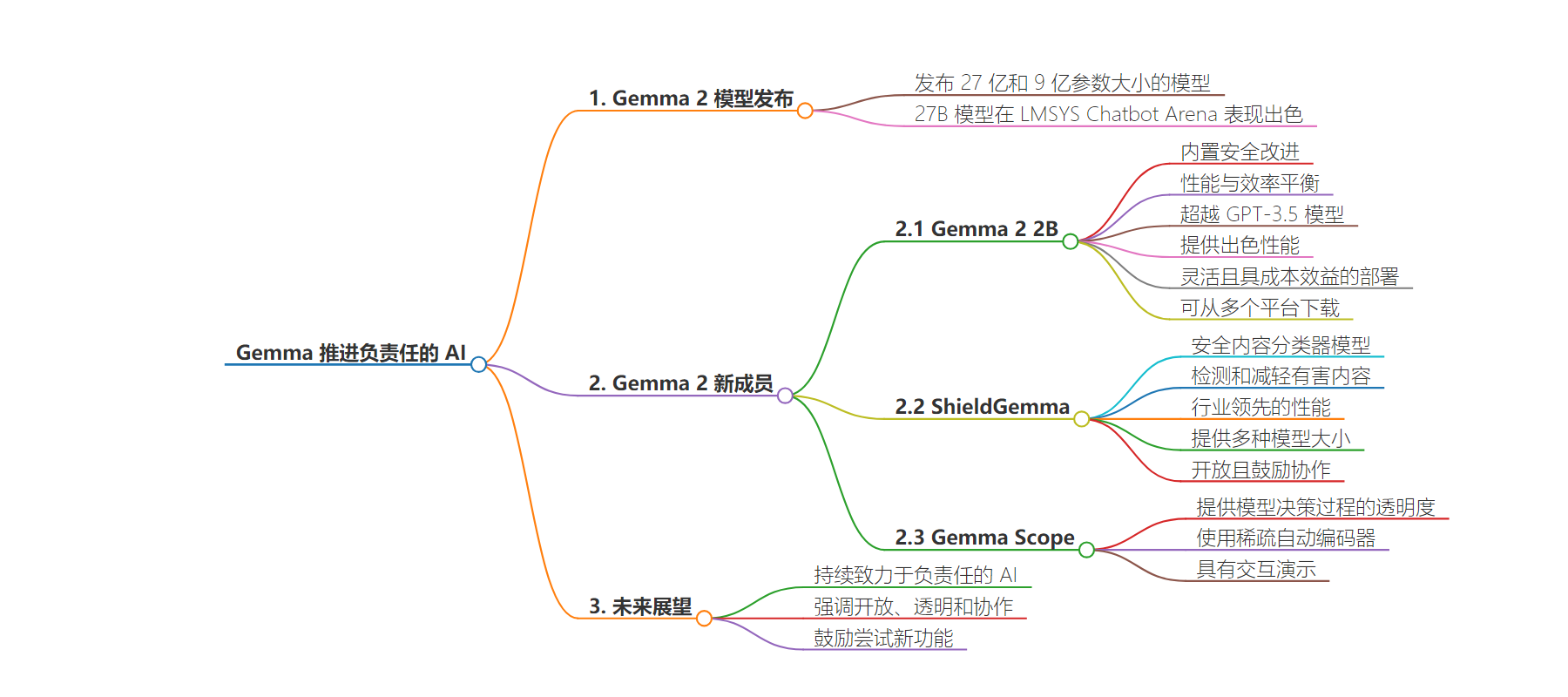

思维导图:

文章来源:developers.googleblog.com

作者:Neel Nanda,Tom Lieberum,Ludovic Peran,Kathleen Kenealy

发布时间:2024/7/31 0:00

语言:英文

总字数:1087字

预计阅读时间:5分钟

评分:92分

标签:AI模型,安全分类器,模型可解释性,AI部署,Google

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

In June, we released Gemma 2, our new best-in-class open models, in 27 billion (27B) and 9 billion (9B) parameter sizes. Since its debut, the 27B model quickly became one of the highest-ranking open models on the LMSYS Chatbot Arena leaderboard, even outperforming popular models more than twice its size in real conversations.

But Gemma is about more than just performance. It’s built on a foundation of responsible AI, prioritizing safety and accessibility. To support this commitment, we are excited to announce three new additions to the Gemma 2 family:

- Gemma 2 2B – a brand-new version of our popular 2 billion (2B) parameter model, featuring built-in safety advancements and a powerful balance of performance and efficiency.

2. ShieldGemma – a suite of safety content classifier models, built upon Gemma 2, to filter the input and outputs of AI models and keep the user safe.

3. Gemma Scope – a new model interpretability tool that offers unparalleled insight into our models’ inner workings.

With these additions, researchers and developers can now create safer customer experiences, gain unprecedented insights into our models, and confidently deploy powerful AI responsibly, right on device, unlocking new possibilities for innovation.

Gemma 2 2B: Experience Next-Gen Performance, Now On-Device

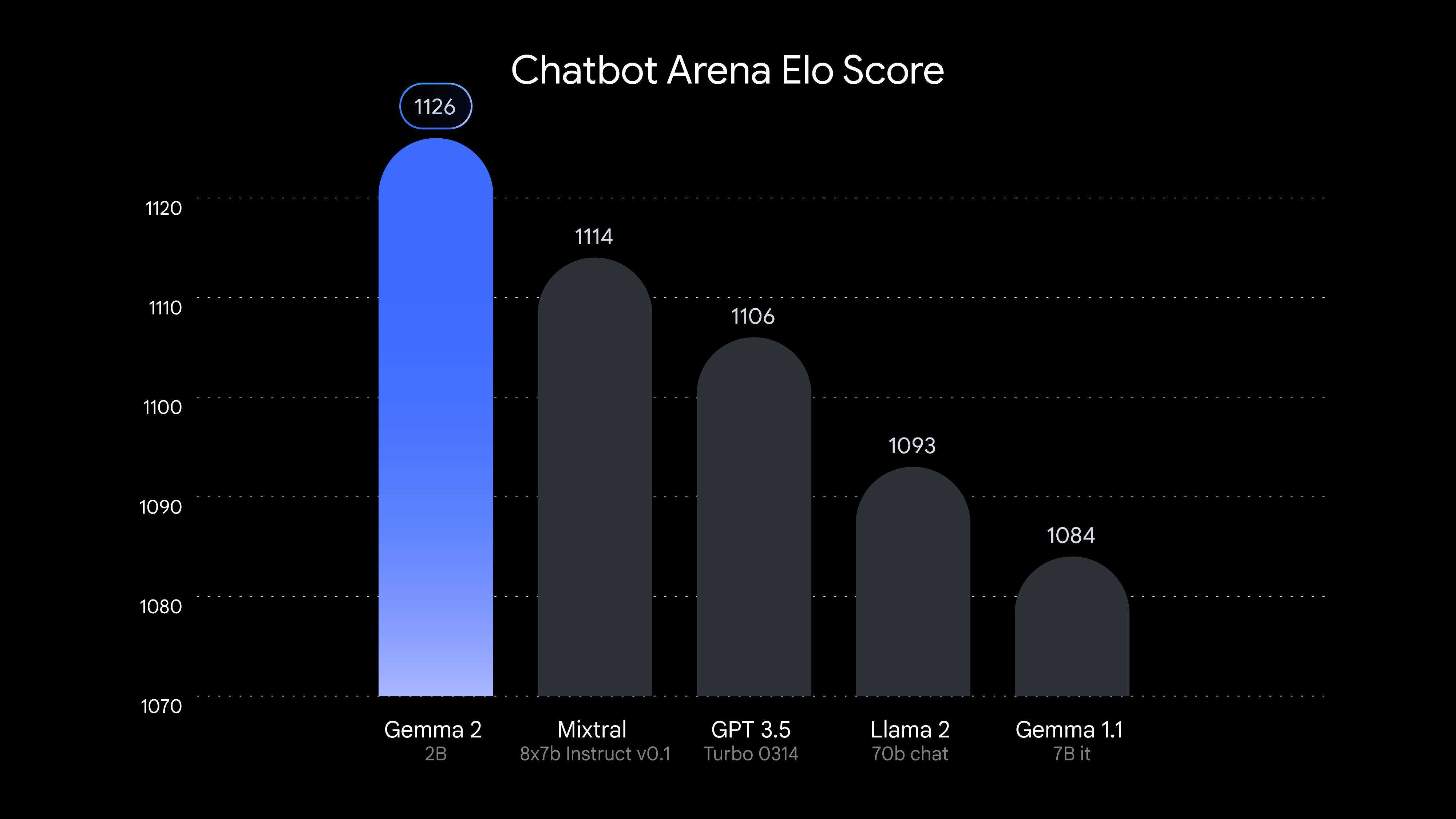

We’re excited to introduce the Gemma 2 2B model, a highly anticipated addition to the Gemma 2 family. This lightweight model produces outsized results by learning from larger models through distillation. In fact, Gemma 2 2B surpasses all GPT-3.5 models on the Chatbot Arena, demonstrating its exceptional conversational AI abilities.

LMSYS Chatbot Arena leaderboard scores captured on July 30th, 2024.Gemma 2 2B score +/- 10.

Gemma 2 2B offers:

- Exceptional performance: Delivers best-in-class performance for its size, outperforming other open models in its category.

- Flexible and cost-effective deployment: Run Gemma 2 2B efficiently on a wide range of hardware—from edge devices and laptops to robust cloud deployments with Vertex AI and Google Kubernetes Engine (GKE). To further enhance its speed, it is optimized with the NVIDIA TensorRT-LLM library and is available as an NVIDIA NIM. This optimization targets various deployments, including data centers, cloud, local workstations, PCs, and edge devices — using NVIDIA RTX, NVIDIA GeForce RTX GPUs, or NVIDIA Jetson modules for edge AI. Additionally, Gemma 2 2B seamlessly integrates with Keras, JAX, Hugging Face, NVIDIA NeMo, Ollama, Gemma.cpp, and soon MediaPipe for streamlined development.

Starting today, you can download Gemma 2’s model weights from Kaggle, Hugging Face, Vertex AI Model Garden. You can also try its capabilities in Google AI Studio.

ShieldGemma: Protecting Users with State-of-the-Art Safety Classifiers

Deploying open models responsibly to ensure engaging, safe, and inclusive AI outputs requires significant effort from developers and researchers. To help developers in this process, we’re introducing ShieldGemma, a series of state-of-the-art safety classifiers designed to detect and mitigate harmful content in AI models inputs and outputs. ShieldGemma specifically targets four key areas of harm:

- Sexually explicit content

These open classifiers complement our existing suite of safety classifiers in the Responsible AI Toolkit, which includes a methodology to build classifiers tailored to a specific policy with limited number of datapoints, as well as existing Google Cloud off-the-shelf classifiers served via API.

Here’s how ShieldGemma can help you create safer, better AI applications:

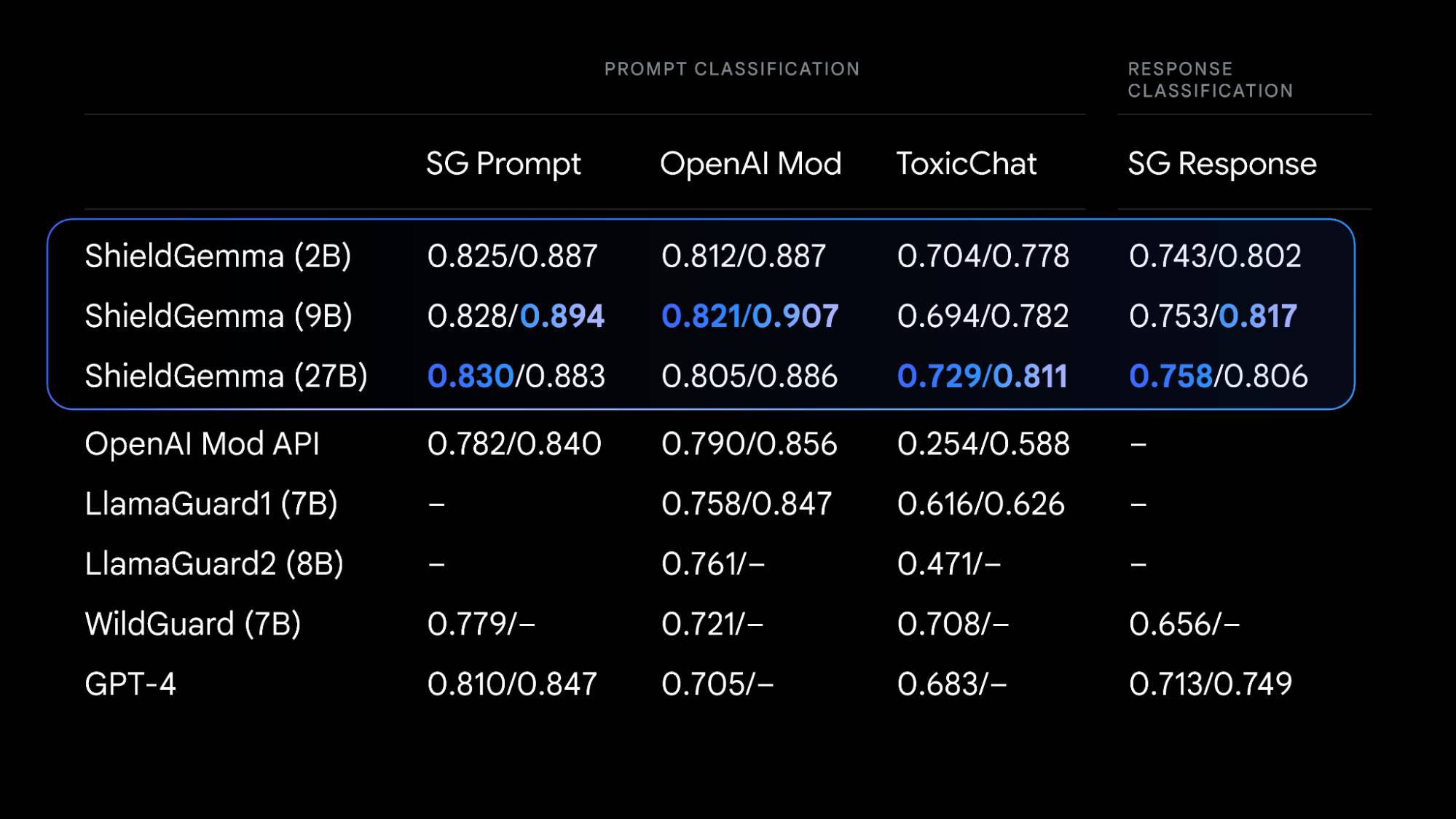

- SOTA performance: Built on top of Gemma 2, ShieldGemma are the industry-leading safety classifiers.

- Flexible sizes: ShieldGemma offers various model sizes to meet diverse needs. The 2B model is ideal for online classification tasks, while the 9B and 27B versions provide higher performance for offline applications where latency is less of a concern. All sizes leverage NVIDIA speed optimizations for efficient performance across hardware.

- Open and collaborative: The open nature of ShieldGemma encourages transparency and collaboration within the AI community, contributing to the future of ML industry safety standards.

“As AI continues to mature, the entire industry will need to invest in developing high performance safety evaluators. We’re glad to see Google making this investment, and look forward to their continued involvement in our AI Safety Working Group.” ~ Rebecca Weiss, Executive Director, ML Commons

Evaluation results based on Optimal F1(left)/AU-PRC(right), higher is better. We use 𝛼=0And T = 1 for calculating the probabilities. ShieldGemma (SG) Prompt and SG Response are our test datasets and OpenAI Mod/ToxicChat are external benchmarks. The performance of baseline models on external datasets is sourced from Ghosh et al. (2024); Inan et al. (2023).

Learn more about ShieldGemma, see full results in the technical report, and start building safer AI applications with our comprehensive Responsible Generative AI Toolkit.

Gemma Scope: Illuminating AI Decision-Making with Open Sparse Autoencoders

Gemma Scope offers researchers and developers unprecedented transparency into the decision-making processes of our Gemma 2 models. Acting like a powerful microscope, Gemma Scope uses sparse autoencoders (SAEs) to zoom in on specific points within the model and make its inner workings more interpretable.

These SAEs are specialized neural networks that help us unpack the dense, complex information processed by Gemma 2, expanding it into a form that’s easier to analyze and understand. By studying these expanded views, researchers can gain valuable insights into how Gemma 2 identifies patterns, processes information, and ultimately makes predictions. With Gemma Scope, we aim to help the AI research community discover how to build more understandable, accountable, and reliable AI systems.

Here’s what makes Gemma Scope groundbreaking:

- Interactive demos: Explore SAE features and analyze model behavior without writing code on Neuronpedia.

Learn more about Gemma Scope on the Google DeepMind blog, technical report, and developer documentation.

A Future Built on Responsible AI

These releases represent our ongoing commitment to providing the AI community with the tools and resources needed to build a future where AI benefits everyone. We believe that open access, transparency, and collaboration are essential for developing safe and beneficial AI.

Get Started Today:

- Try Gemma Scope on Neuronpedia and uncover the inner workings of Gemma 2.

Join us on this exciting journey towards a more responsible and beneficial AI future!