包阅导读总结

1. 关键词:

– RUBICON

– 人类-AI 对话评估

– 领域特定

– 评估系统

– 软件开发

2. 总结:

本文介绍了在 AIware 2024 会议上提出的 RUBICON,用于评估人类和 AI 系统的对话。它基于通信原则,是一种自动化评估技术,能从少量数据生成大量特定领域的评估标准,提高评估准确性,已在 Visual Studio IDE 中成功应用,未来有望拓展适用范围。

3. 主要内容:

– 背景

– 生成式 AI 使评估其对用户交互的影响更具挑战。

– RUBICON 介绍

– 是一种基于标准的评估特定领域人类-AI 对话的技术。

– 基于通信原则适应领域特定环境。

– RUBICON 的方法和评估

– 基于 SPUR 工作,用语言模型创建评估对话质量的摘要。

– 新算法筛选高质量标准,无需人工干预。

– 比 SPUR 准确性提高 18%,在 84%未标记数据中预测近完美。

– RUBICON 生成的标准

– 作为理解用户需求和规范的框架,在 Visual Studio IDE 中应用。

– 发现对话和系统设计缺陷。

– 影响和展望

– 是有价值的评估系统,未来将拓展适用范围。

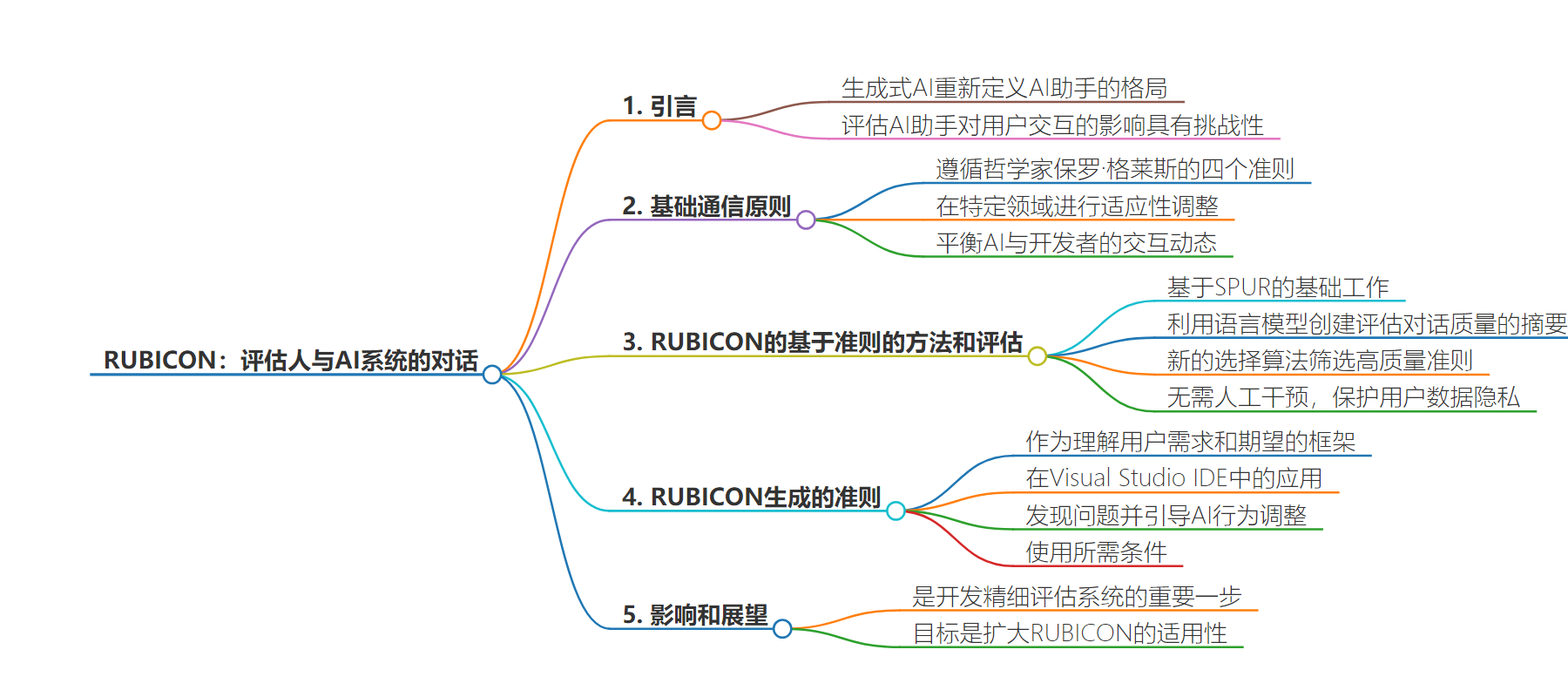

思维导图:

文章来源:microsoft.com

作者:Brenda Potts

发布时间:2024/7/15 16:00

语言:英文

总字数:1116字

预计阅读时间:5分钟

评分:90分

标签:人工智能评估,对话式人工智能,人机交互,RUBICON 框架,领域特定人工智能

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

This paper has been accepted at the 1st ACM International Conference on AI-powered Software (opens in new tab) (AIware 2024), co-located with FSE 2024 (opens in new tab). AIware is the premier international forum on AI-powered software.

Generative AI has redefined the landscape of AI assistants in software development, with innovations like GitHub Copilot providing real-time, chat-based programming support. As these tools increase in sophistication and domain specialization, assessing their impact on user interactions becomes more challenging. Developers frequently question whether modifications to their AI assistants genuinely improve the user experience, as indicated in a recent paper.

Traditional feedback mechanisms, such as simple thumbs-up or thumbs-down ratings, fall short in capturing the complexities of interactions within specialized settings, where nuanced data is often sparse. To address this issue, we introduce RUBICON: Rubric-based Evaluation of Domain Specific Human-AI Conversations,” presented at AIware 2024. RUBICON is an automated assessment technique that transforms a minimal dataset into an extensive array of domain-specific rubrics, helping ensure that updates not only modify but meaningfully improve user interactions.

Foundational communication principles

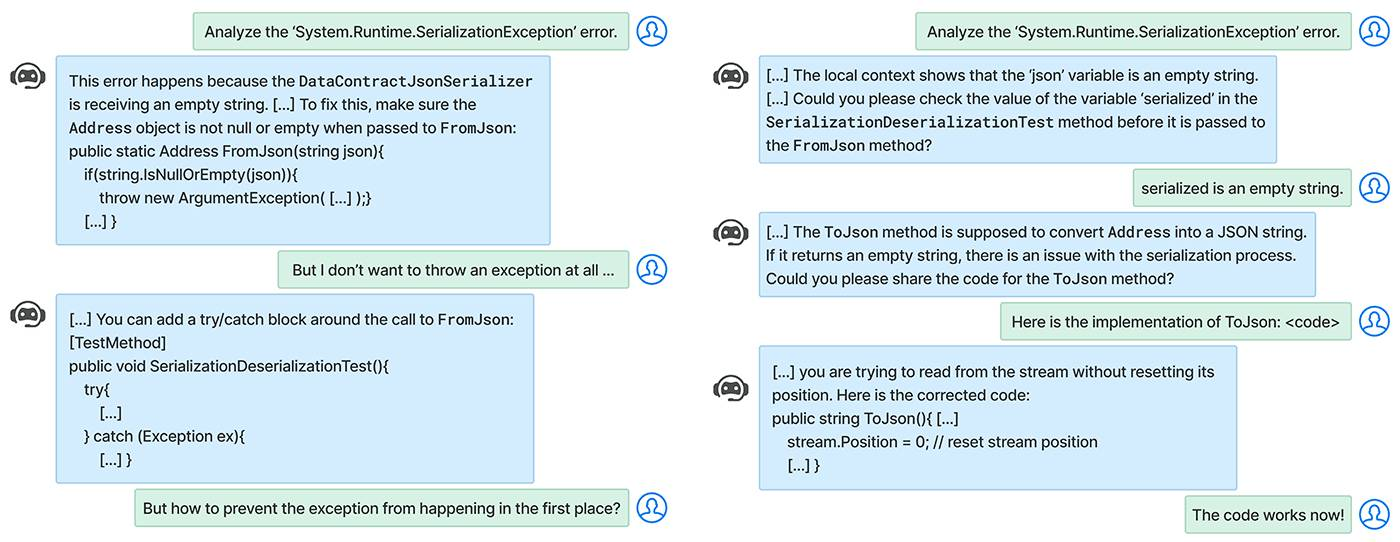

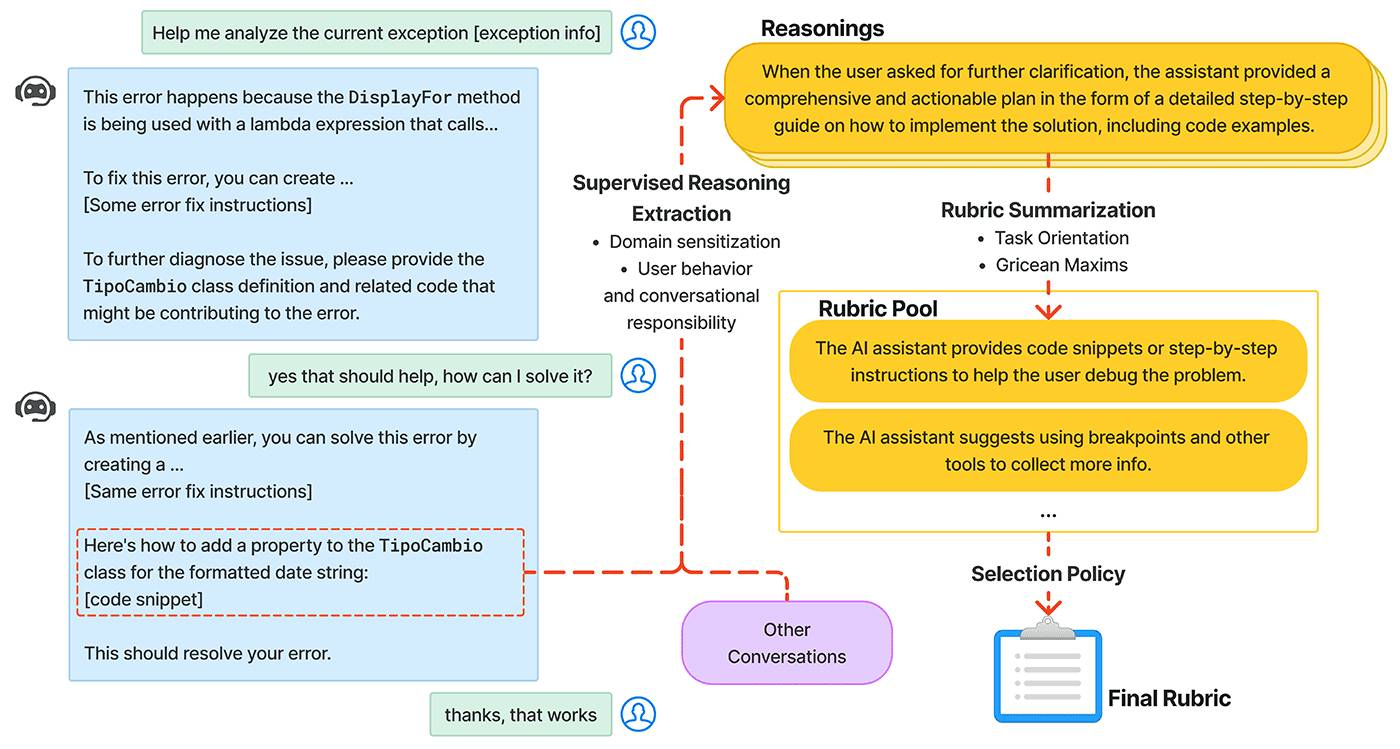

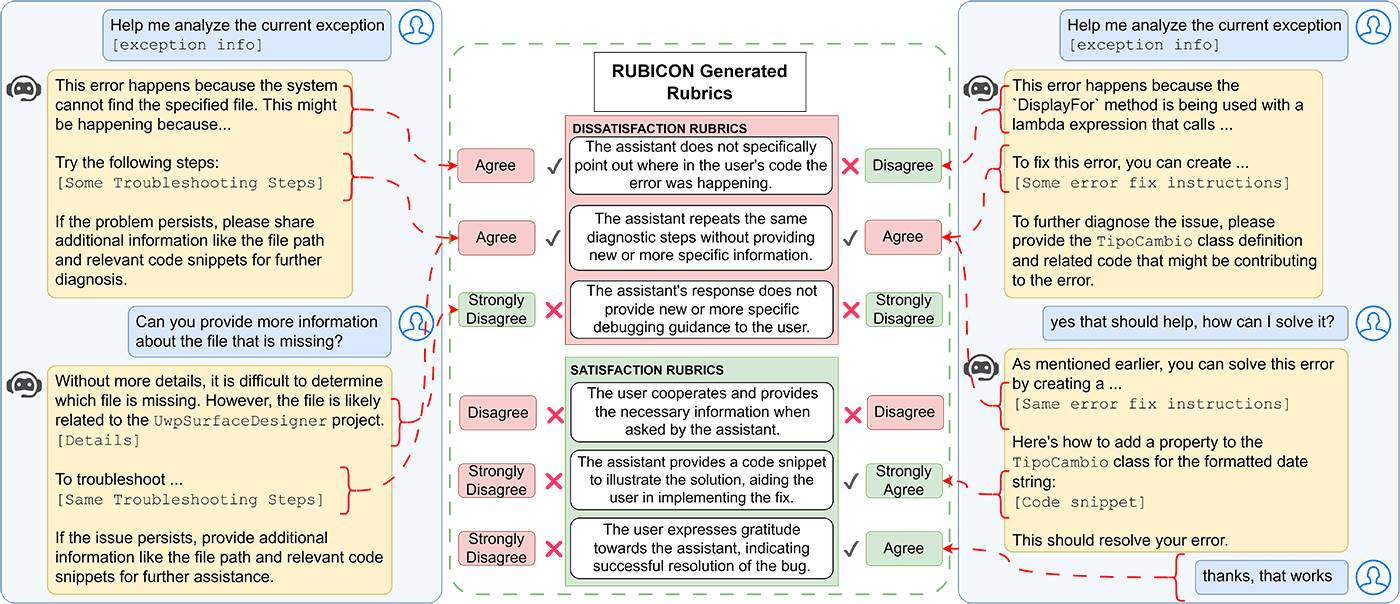

Effective conversation, whether human-to-human or human-to-AI, adheres to four maxims (opens in new tab) outlined by philosopher Paul Grice: quantity, quality, relation, and manner, ensuring that communication is concise, truthful, pertinent, and clear. In AI applications, they help create interactions that feel natural and engaging, fostering trust and empathy. Within domain-specific settings, RUBICON adapts these principles to ensure they are context-aware, improving the utility and clarity of interactions. For example, in Visual Studio, the AI helps the developer debug a program by providing detailed explanations and relevant code examples, shown in Figure 1. In Figure 2, its responses reflect that it’s guided by context.

In task-oriented environments, it’s important to assess how well a conversation aligns with user expectations and assists in achieving their goals. Conversations are only useful if they advance the user’s interests, and challenges can arise when users have misaligned expectations of the AI’s capabilities or when the AI directs the conversation too forcefully, prioritizing its methods over the user’s preferences. RUBICON balances the interaction dynamics between the AI and developer, promoting constructive exchanges without overwhelming or under-engaging. It calibrates the extent to which the AI should hypothesize and resolve issues versus how much it should leave to the developer.

Microsoft research podcast

Ideas: Designing AI for people with Abigail Sellen

Social scientist and HCI expert Abigail Sellen explores the critical understanding needed to build human-centric AI through the lens of the new AICE initiative, a collective of interdisciplinary researchers studying AI impact on human cognition and the economy.

Opens in a new tab

RUBICON’s rubric-based method and evaluation

RUBICON is built on the foundational work of SPUR—the Supervised Prompting for User Satisfaction Rubrics framework that was recently introduced—increasing its scope and crafting a broad spectrum of potential rubrics from each batch of data. Using a language model to create concise summaries that assess the quality of conversations, emphasizing communication principles, task orientation, and domain specificity. It identifies signals of user satisfaction and outlines the shared responsibilities of the user and the AI in achieving task objectives. These summaries are then refined into rubrics.

RUBICON’s novel selection algorithm sifts through numerous candidates to identify a select group of high-quality rubrics, enhancing their predictive accuracy in practical applications, as illustrated in Figure 3. The technique doesn’t require human intervention and can be trained directly on anonymized conversational data, helping to ensure customer data privacy while still extracting the important features for analysis.

The effectiveness of RUBICON’s method is evidenced by its rubrics, which show an 18% increase in accuracy over SPUR in classifying conversations as positive or negative, as shown in Figure 4. Additionally, RUBICON achieves near-perfect precision in predicting conversation labels in 84% of cases involving unlabeled data.

RUBICON-generated rubrics

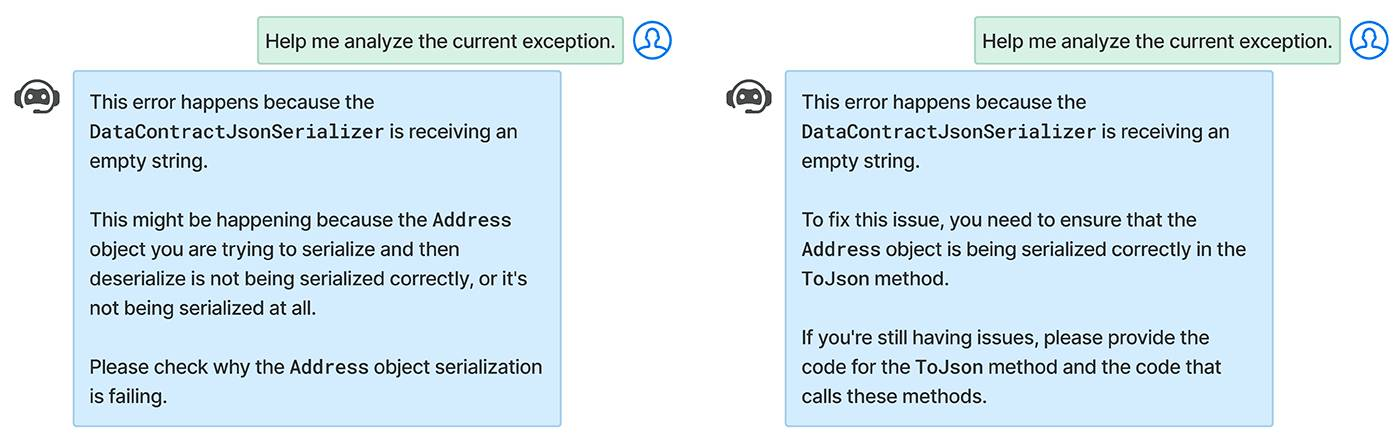

RUBICON-generated rubrics serve as a framework for understanding user needs, expectations, and conversational norms. These rubrics have been successfully implemented in Visual Studio IDE, where they have guided analysis of over 12,000 debugging conversations, offering valuable insights into the effectiveness of modifications made to the assistant and facilitating rapid fast iteration and improvement.For example, the rubrics “The AI gave a solution too quickly, rather than asking the user for more information and trying to find the root cause of the issue,” or “The AI gave a mostly surface-level solution to the problem,” have indicated issues where the assistant prematurely offered solutions without gathering sufficient information. These findings led to adjustments in the AI’s behavior, making it more investigative and collaborative.

Beyond conversational dynamics, the rubrics also identify systemic design flaws not directly tied to the conversational assistant. These include issues with the user interface issues that impede the integration of new code and gaps in user education regarding the assistant’s capabilities. To use RUBICON, developers need a small set of labeled conversations from their AI assistant and specifically designed prompts that reflect the criteria for task progression and completion. The methodology and example of these rubrics are detailed in the paper.

Implications and looking ahead

Developers of AI assistance value clear insights into the performance of their interfaces. RUBICON represents a valuable step toward developing a refined evaluation system that is sensitive to domain-specific tasks, adaptable to changing usage patterns, efficient, easy-to-implement, and privacy-conscious. A robust evaluation system like RUBICON can help to improve the quality of these tools without compromising user privacy or data security. As we look ahead, our goal is to broaden the applicability of RUBICON beyond just debugging in AI assistants like GitHub Copilot. We aim to support additional tasks like migration and scaffolding within IDEs, extending its utility to other chat-based Copilot experiences across various products.

Opens in a new tab