包阅导读总结

1. `LlamaIndex`、`LlamaExtract`、`RAG`、`Structured Extraction`、`Webinars`

2. 这是 2024 年 7 月 30 日的 LlamaIndex 新闻通讯,涵盖产品更新、办公时间活动、新功能推出、指南、教程及网络研讨会信息,重点包括 LlamaExtract 测试版上线和一系列结构化提取等新功能。

3.

– LlamaIndex 新闻通讯

– 办公时间

– 可就项目进行 15 – 30 分钟的 Zoom 聊天,有免费 swag

– 亮点

– LlamaExtract 测试版推出,用于非结构化文档的结构化数据提取,支持 UI 和 API

– 为 LLM 驱动的管道提供结构化提取功能,支持异步和流式操作

– 与 Ollama 集成用于工具调用

– 支持用 Mistral Large – 2 构建 LLM 应用

– 指南

– 用于 RAG 的自动结构化提取指南

– 教程

– 网络研讨会

– 与 ColPali 作者举办关于高效文档检索的网络研讨会

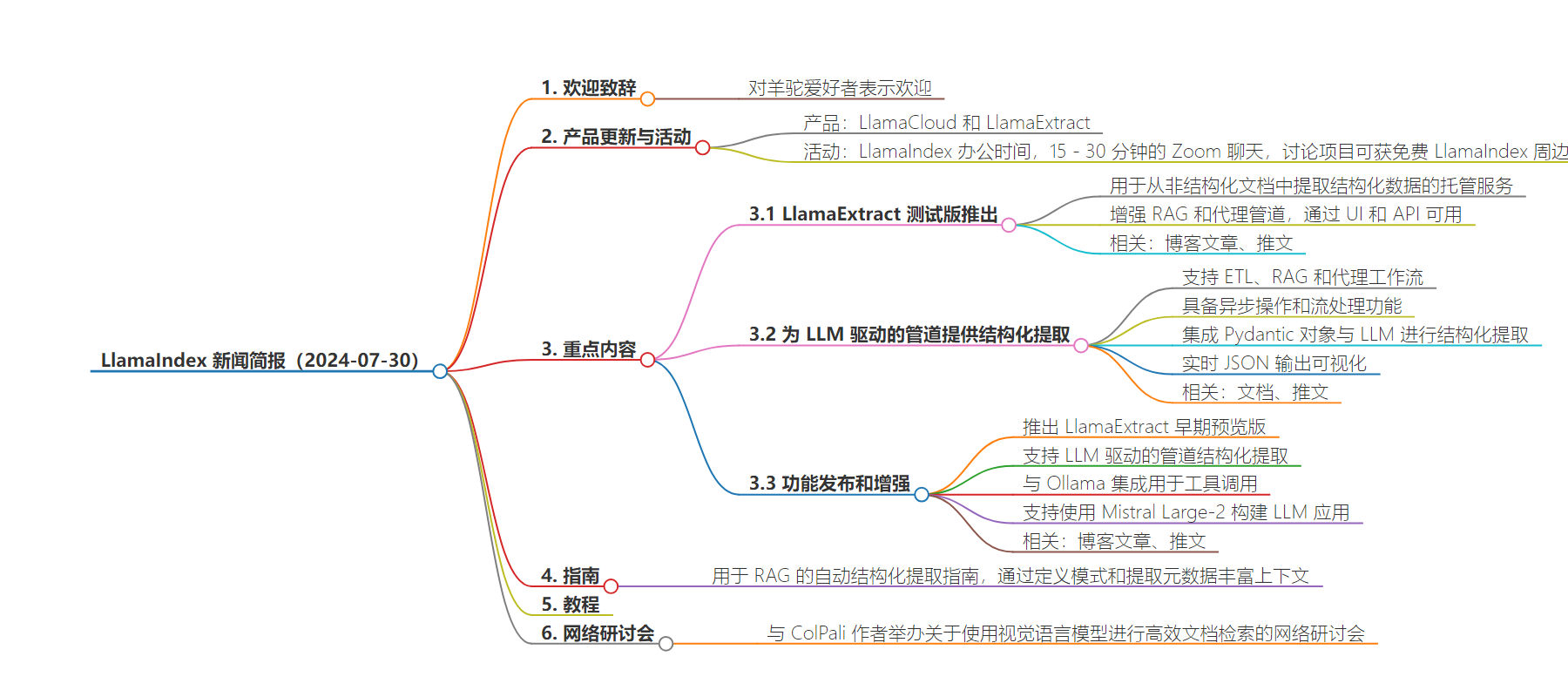

思维导图:

文章地址:https://www.llamaindex.ai/blog/llamaindex-newsletter-2024-07-30

文章来源:llamaindex.ai

作者:LlamaIndex

发布时间:2024/7/30 0:00

语言:英文

总字数:365字

预计阅读时间:2分钟

评分:93分

标签:LlamaIndex,数据提取,LLM 应用,RAG,代理管道

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Hello, Llama Enthusiasts! 🦙

Welcome to this week’s edition of the LlamaIndex newsletter! We’re excited to bring you the latest updates on our products, including LlamaCloud and LlamaExtract, comprehensive guides, detailed tutorials, and upcoming webinars.

LlamaIndex Office Hours:

Building agents or RAG applications? Join us for a 15-30 minute Zoom chat about your projects, and we’ll thank you with free LlamaIndex swag.

Sign up for our office hours today

🤩The highlights:

- LlamaExtract Beta Launched: Our managed service for structured data extraction from unstructured documents, enhancing RAG and agent pipelines via both UI and API. Blogpost, Tweet.

- Structured Extraction for LLM-powered Pipelines: Structured extraction capabilities for ETL, RAG, and agent workflows, featuring asynchronous operations and streaming. Integrate a Pydantic object with your LLM for structured extraction at the chunk or document level with real-time JSON output visualization. Docs, Tweet.

✨ Feature Releases and Enhancements:

- We have launched LlamaExtract as an early preview of our managed service for structured data extraction from unstructured documents, enhancing RAG and agent pipelines, now available in beta via UI and API. Blogpost, Tweet.

- We have launched structured extraction for LLM-powered ETL, RAG, and agent pipelines, featuring full support for asynchronous operations and streaming. Simply integrate a Pydantic object with your LLM using as_structured_llm(…), enabling chunk-level or document-level structured extraction and real-time JSON output visualization. Docs, Tweet.

- We have integrated with Ollama for tool calling. This let’s you build agents with local models like llama3.1. Docs, Tweet.

- We have day-0 support for building LLM applications with Mistral Large-2. Tweet.

🗺️ Guides:

- Guide to Automated Structured Extraction for RAG to improve retrieval and synthesis in RAG pipelines with LlamaExtract by defining schemas and extracting metadata for richer context.

✍️ Tutorials:

🎤Webinars:

- Webinar with ColPali authors on Efficient Document Retrieval with Vision Language Models.