包阅导读总结

1.

– `Prompty`、`LLM prompts`、`asynchronous operation`、`application`、`orchestrator`

2.

Prompty 用于构建和管理 LLM 提示,相关函数利用提示描述与 LLM 交互,可异步操作。应用中包含 Prompty 资产简单,虽目前支持的协调器有限,但可提交更多代码生成器。Prompty 现侧重云托管 LLM 提示构建,但正转向小型、更聚焦的工具。

3.

– Prompty 可用于构建和管理 LLM 提示

– 生成的函数利用提示描述与 LLM 交互,可包装为异步操作

– 使 AI 应用代码量少,分离提示定义便于更新 LLM 交互

– 在应用中包含 Prompty 资产

– 选择协调器并自动生成代码片段即可

– 目前支持的协调器有限,但开源可提交更多代码生成器

– Prompty 的现状和趋势

– 目前聚焦云托管 LLM 提示构建

– 正在向小型、更聚焦工具转变,如微软的 Phi Silica

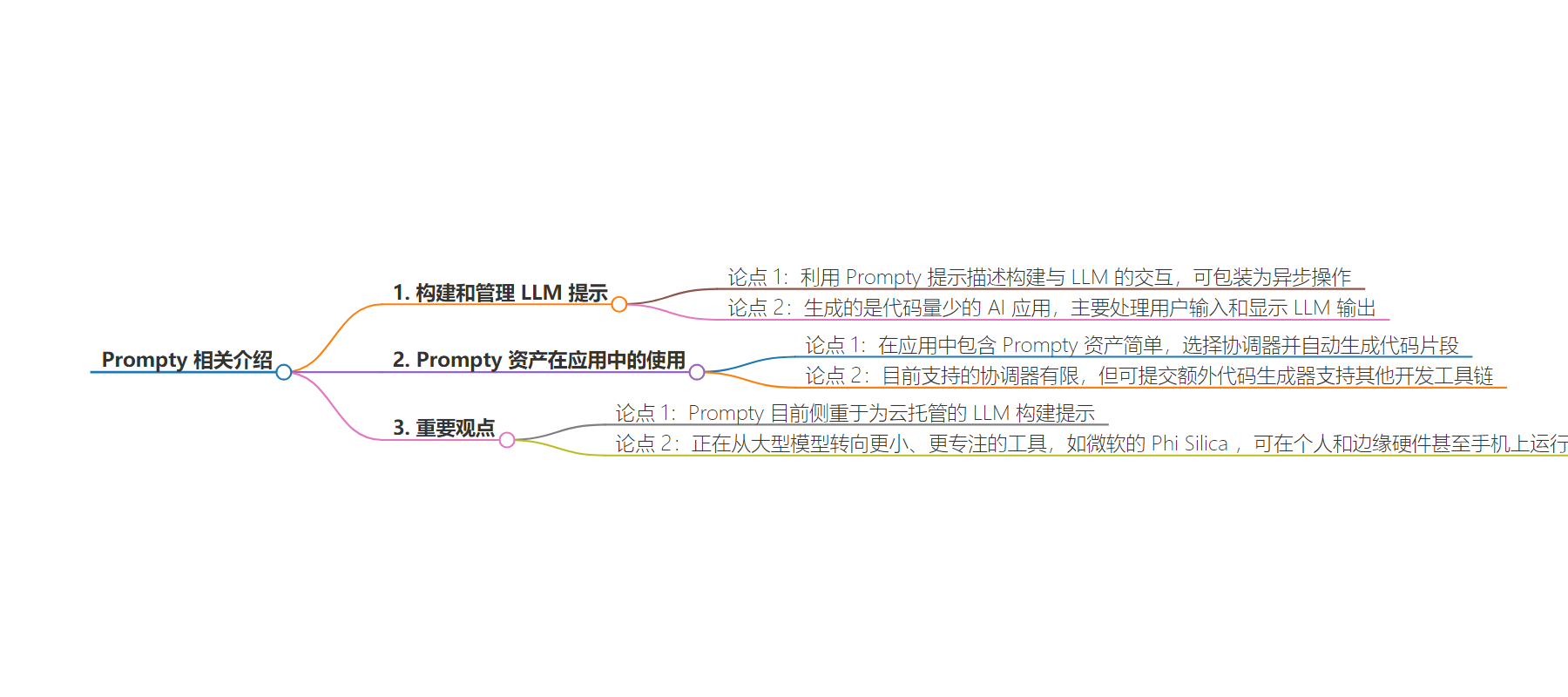

思维导图:

文章地址:https://www.infoworld.com/article/3477435/build-and-manage-llm-prompts-with-prompty.html

文章来源:infoworld.com

作者:InfoWorld

发布时间:2024/7/25 9:00

语言:英文

总字数:1312字

预计阅读时间:6分钟

评分:92分

标签:人工智能开发,提示工程,大型语言模型,微软,Visual Studio Code

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

The resulting functions use the Prompty prompt description to build the interaction with the LLM, which you can wrap in an asynchronous operation. The result is an AI application with very little code beyond assembling user inputs and displaying LLM outputs. Much of the heavy lifting is handled by tools like Semantic Kernel, and by separating the prompt definition from your application, it’s possible to update LLM interactions outside of an application, using the .prompty asset file.

Including Prompty assets in your application is as simple as choosing the orchestrator and automatically generating the code snippets to include the prompt in your application. Only a limited number of orchestrators are supported at present, but this is an open source project, so you can submit additional code generators to support alternative application development toolchains.

That last point is particularly important: Prompty is currently focused on building prompts for cloud-hosted LLMs, but we’re in a shift from large models to smaller, more focused tools, such as Microsoft’s Phi Silica, which are designed to run on neural processing units on personal and edge hardware, and even on phones.