包阅导读总结

1.

关键词:NVIDIA NIM、Hugging Face、Inference-as-a-Service、AI 模型、DGX Cloud

2.

总结:Hugging Face 推出由 NVIDIA NIM 支持的推理即服务功能,方便开发者使用 NVIDIA 加速推理流行的 AI 模型,目前仅支持部分 API,按计算时间计费,还在拓展功能,且与 NVIDIA 有更多合作。

3.

主要内容:

– NVIDIA NIM 推理即服务在 Hugging Face 上线

– 支持快速部署如 Llama 3 家族等领先大语言模型

– 需企业 Hub 组织访问权限和细粒度令牌认证

– 服务优势

– 优化计算资源,基于 NVIDIA DGX Cloud

– 帮助开发者快速原型和部署开源 AI 模型

– 服务现状和未来

– 目前支持有限 API,按计算时间计费

– 正扩展功能,增加模型

– 与 NVIDIA 合作集成 TensorRT-LLM 库

– 除新服务,还提供 Train on DGX Cloud 训练服务

– 各方反应

– Hugging Face CEO 表示兴奋

– Kaggle Master 称代码与 OpenAI API 兼容

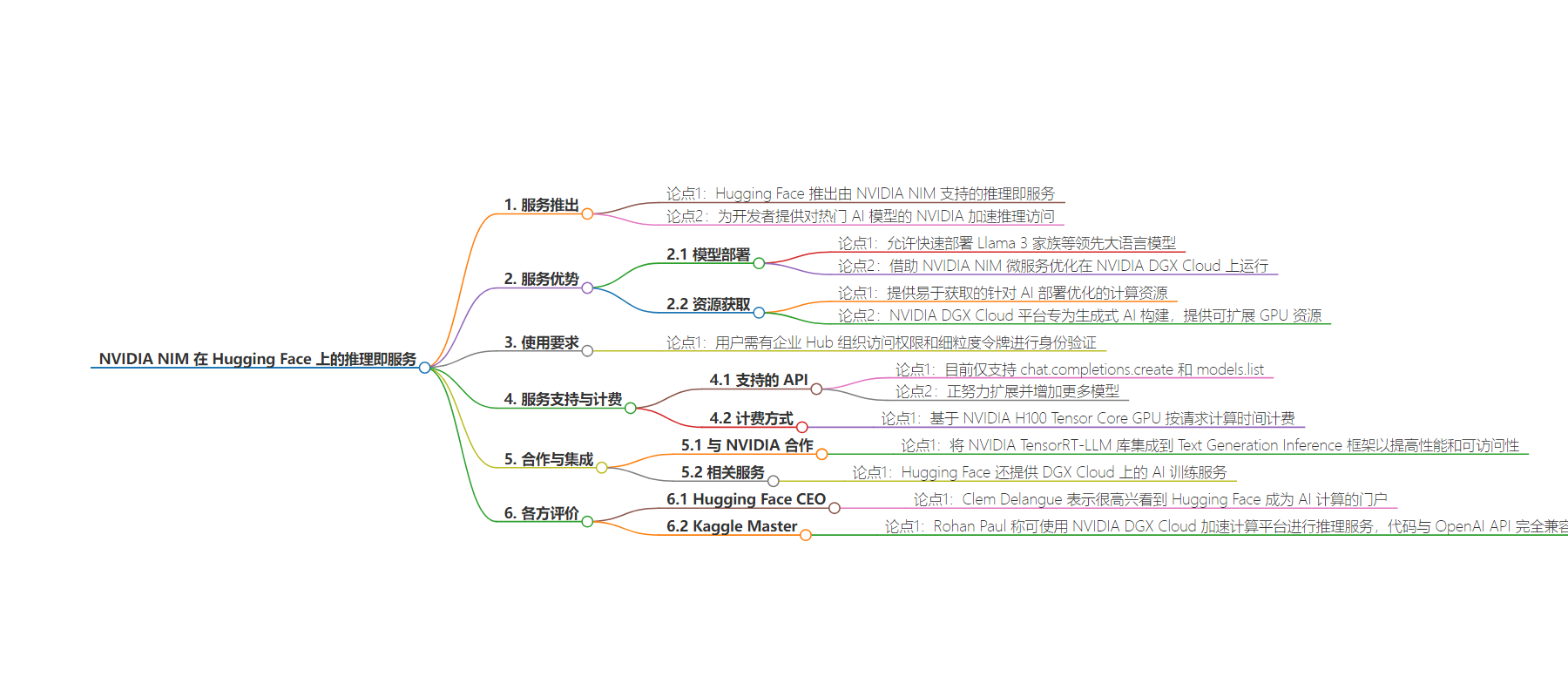

思维导图:

文章来源:infoq.com

作者:Daniel Dominguez

发布时间:2024/8/11 0:00

语言:英文

总字数:366字

预计阅读时间:2分钟

评分:84分

标签:AI 推理,NVIDIA NIM,Hugging Face,DGX Cloud,生成式 AI

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Hugging Face has announced the launch of an inference-as-a-service capability powered by NVIDIA NIM. This new service will provide developers easy access to NVIDIA-accelerated inference for popular AI models.

The new service allows developers to rapidly deploy leading large language models such as the Llama 3 family and Mistral AI models with optimization from NVIDIA NIM microservices running on NVIDIA DGX Cloud. This will help developers quickly prototype with open-source AI models hosted on the Hugging Face Hub and deploy them in production.

The Hugging Face inference-as-a-service on NVIDIA DGX Cloud powered by NIM microservices offers easy access to compute resources that are optimized for AI deployment. The NVIDIA DGX Cloud platform is purpose-built for generative AI and provides scalable GPU resources that support every step of AI development, from prototype to production.

To use the service, users must have access to an Enterprise Hub organization and a fine-grained token for authentication. The NVIDIA NIM Endpoints for supported Generative AI models can be found on the model page of the Hugging Face Hub.

Currently, the service only supports the chat.completions.create and models.list APIs, but Hugging Face is working on extending this while adding more models. Usage of Hugging Face Inference-as-a-Service on DGX Cloud is billed based on the compute time spent per request, using NVIDIA H100 Tensor Core GPUs.

Hugging Face is also working with NVIDIA to integrate the NVIDIA TensorRT-LLM library into Hugging Face’s Text Generation Inference (TGI) framework to improve AI inference performance and accessibility. In addition to the new Inference-as-a-Service, Hugging Face also offers Train on DGX Cloud, an AI training service.

Clem Delangue, CEO at Hugging Face, posted on his X account:

Very excited to see that Hugging Face is becoming the gateway for AI compute!

And Kaggle Master Rohan Paulshared a post on X saying:

So, we can use open models with the accelerated compute platform of NVIDIA DGX Cloud for inference serving. Code is fully compatible with OpenAI API, allowing you to use the openai’s sdk for inference.

At SIGGRAPH, NVIDIA also introduced generative AI models and NIM microservices for the OpenUSD framework to accelerate developers’ abilities to build highly accurate virtual worlds for the next evolution of AI.