包阅导读总结

1. 关键词:

– 营销分析

– 大型语言模型

– 语义搜索

– SQL 生成

– 表格数据分析

2. 总结:

本文介绍如何利用大型语言模型增强营销分析,包括对语言模型和营销分析的基本理解,阐述了语义搜索、SQL 生成、表格数据分析的实现方法及在营销分析管道中的集成,还提供了代码示例和实用见解,以帮助初学者提升营销分析能力。

3. 主要内容:

– 引言

– 指出人工智能对营销的影响,强调大型语言模型在营销分析中的潜力及面临的挑战。

– 理解基础

– 介绍大型语言模型及其用途。

– 阐述营销分析的概念和任务。

– 实施语义搜索

– 说明语义搜索的作用和设置方法,提供示例代码。

– SQL 生成与大型语言模型

– 准备数据集。

– 介绍模型微调的过程。

– 表格数据分析

– 准备数据集。

– 进行模型微调。

– 实际管道中的实施

– 给出营销分析管道的示例。

– 结论

– 总结利用大型语言模型提升营销分析能力的要点。

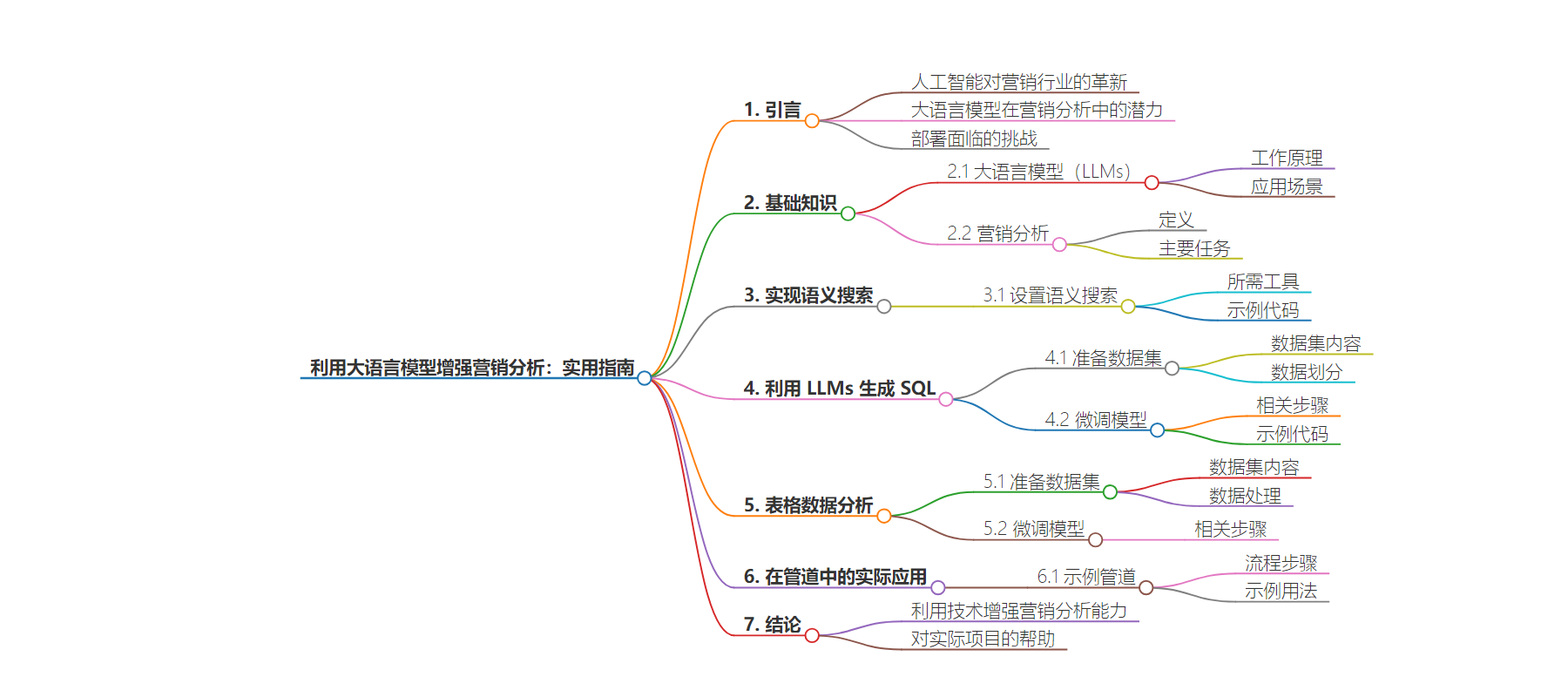

思维导图:

文章来源:javacodegeeks.com

作者:Sai Kumar Arava

发布时间:2024/7/25 11:31

语言:英文

总字数:964字

预计阅读时间:4分钟

评分:81分

标签:人工智能,营销分析,大型语言模型,语义搜索,SQL 生成

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Introduction

Artificial Intelligence (AI) has revolutionized various industries, and marketing is no exception. The ability to leverage AI for marketing attribution and budget optimization has become a critical asset for businesses. Recently, large language models (LLMs) such as GPT-4 have demonstrated significant potential in providing valuable marketing insights with reduced time and effort. However, deploying these models effectively requires overcoming several challenges, particularly in domain-specific tasks such as SQL generation and tabular analysis.

In this article, we will explore how beginners can leverage LLMs in marketing analytics pipelines by employing techniques such as semantic search, prompt engineering, and fine-tuning. We will provide example codes and practical insights to help you implement these techniques in your projects.

This is based on my experience with real world data at Adobe having worked with enterprise customers as well as trying to solve their use cases with new progresses in Generative AI.

1. Understanding the Basics

1.1 Large Language Models (LLMs)

LLMs, such as GPT-4 and Llama-2, are advanced machine learning models that understand and generate human-like text based on vast datasets. These models can be used to answer questions, generate code, and analyze data, making them ideal for marketing analytics.

1.2 Marketing Analytics

Marketing analytics involves analyzing data to evaluate the effectiveness of marketing campaigns and strategies. This includes tasks like marketing mix modeling and attribution, which help businesses understand the impact of different marketing channels on sales and conversions.

2. Implementing Semantic Search

Semantic search enhances information retrieval by understanding the intent and context of a query rather than just matching keywords. This is particularly useful in marketing analytics for retrieving relevant documents and data insights.

2.1 Setting Up Semantic Search

To implement semantic search, you need a knowledge base and a text embedding model. We will use OpenAI’s text-embedding-ada-002 and the FAISS library for this purpose.

import openaiimport faissimport numpy as np# Initialize OpenAI APIopenai.api_key = 'your-api-key'# Function to embed textdef embed_text(text): response = openai.Embedding.create( model="text-embedding-ada-002", input=text ) return np.array(response['data'][0]['embedding'])# Creating a knowledge basedocuments = ["Document 1 text", "Document 2 text", "Document 3 text"]embeddings = [embed_text(doc) for doc in documents]index = faiss.IndexFlatL2(512)index.add(np.array(embeddings))# Function to perform semantic searchdef semantic_search(query, k=3): query_embedding = embed_text(query) distances, indices = index.search(np.array([query_embedding]), k) return [documents[i] for i in indices[0]]# Example usagequery = "Explain marketing mix modeling"results = semantic_search(query)print(results)

3. SQL Generation with LLMs

Generating SQL queries from natural language questions is a common task in marketing analytics. Fine-tuning LLMs for this purpose can significantly improve accuracy.

3.1 Preparing the Dataset

First, prepare a dataset with natural language questions and corresponding SQL queries.

# Example datasetdata = [ {"question": "How many customers are from New York?", "sql": "SELECT COUNT(*) FROM customers WHERE city = 'New York';"}, {"question": "What is the average age of customers?", "sql": "SELECT AVG(age) FROM customers;"}]# Split data into training and evaluation setstrain_data = data[:-1]eval_data = data[-1:]

3.2 Fine-Tuning the Model

Fine-tuning involves training the model on a specific dataset to improve its performance on particular tasks.

from transformers import GPT2Tokenizer, GPT2LMHeadModel, Trainer, TrainingArguments# Load pre-trained model and tokenizertokenizer = GPT2Tokenizer.from_pretrained("gpt2")model = GPT2LMHeadModel.from_pretrained("gpt2")# Tokenize datadef tokenize_function(examples): return tokenizer(examples["text"], padding="max_length", truncation=True)# Create a dataset classclass SQLDataset(torch.utils.data.Dataset): def __init__(self, data): self.data = data def __len__(self): return len(self.data) def __getitem__(self, idx): item = self.data[idx] return {"text": item["question"] + " " + item["sql"]}train_dataset = SQLDataset(train_data)eval_dataset = SQLDataset(eval_data)# Fine-tune the modeltraining_args = TrainingArguments( output_dir="./results", evaluation_strategy="epoch", learning_rate=2e-5, per_device_train_batch_size=8, per_device_eval_batch_size=8, num_train_epochs=3, weight_decay=0.01,)trainer = Trainer( model=model, args=training_args, train_dataset=train_dataset, eval_dataset=eval_dataset, tokenizer=tokenizer,)trainer.train()

4. Tabular Data Analysis

Analyzing tabular data is crucial for tasks like attribution modeling in marketing. Fine-tuning LLMs to interpret and analyze tables can enhance their effectiveness.

4.1 Preparing the Dataset

Create a dataset with examples of tables and their corresponding analyses.

# Example tabular datadata = [ {"table": "Model: Lead, Channel: Display, Change: -82, Quality: 63, Frequency: -4, Cannibalization: -33", "analysis": "The absolute change of Display is -82%, targeting quality is a contributor with a score of 63%, contact frequency is not a factor with -4%, and ad cannibalization is a mitigating factor with -33%."},]# Tokenize datadef tokenize_function(examples): return tokenizer(examples["table"] + " " + examples["analysis"], padding="max_length", truncation=True)# Create a dataset classclass TabularDataset(torch.utils.data.Dataset): def __init__(self, data): self.data = data def __len__(self): return len(self.data) def __getitem__(self, idx): item = self.data[idx] return {"text": item["table"] + " " + item["analysis"]}}train_dataset = TabularDataset(data)

4.2 Fine-Tuning the Model

Fine-tuning the model on tabular data analysis tasks can significantly improve performance.

trainer = Trainer( model=model, args=training_args, train_dataset=train_dataset, tokenizer=tokenizer,)trainer.train()

5. Practical Implementation in Pipelines

Integrating these techniques into your marketing analytics pipelines involves setting up a robust architecture that combines semantic search, SQL generation, and tabular data analysis.

5.1 Example Pipeline

Here’s an example of how you might set up a pipeline to handle these tasks.

def marketing_analytics_pipeline(query): # Step 1: Semantic Search relevant_docs = semantic_search(query) # Step 2: SQL Generation sql_query = generate_sql(query) # Step 3: Execute SQL Query (Assuming a function execute_sql exists) results = execute_sql(sql_query) # Step 4: Tabular Data Analysis analysis = analyze_table(results) return analysis# Example usagequery = "What is the impact of display ads on sales?"result = marketing_analytics_pipeline(query)print(result)

Conclusion

By leveraging LLMs with techniques like semantic search, prompt engineering, and fine-tuning, beginners can significantly enhance their marketing analytics capabilities. The provided examples and practical insights should help you implement these techniques in your own projects, enabling more efficient and accurate marketing decisions.

References

Here are some references to the elaborate detailed work at Adobe published at IJCI Online. Presentation available at Video link