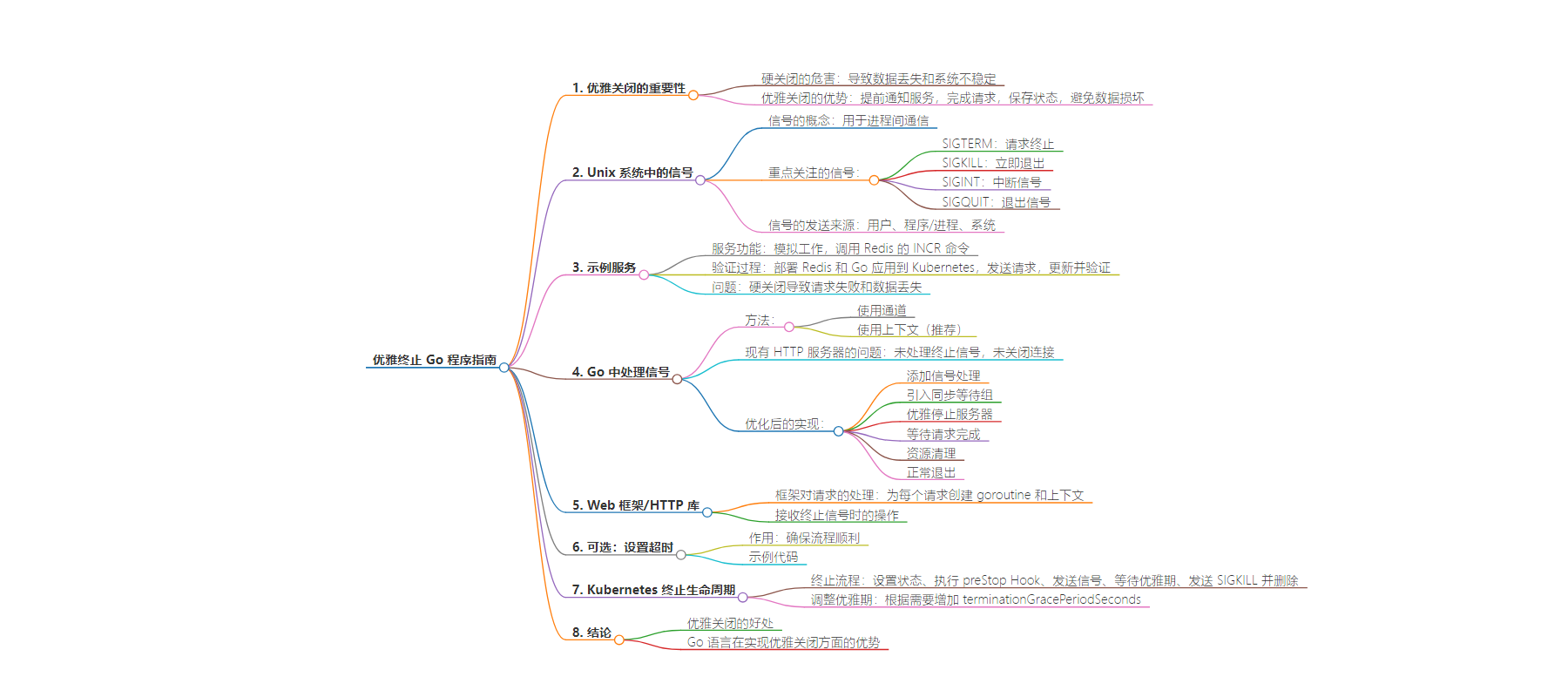

包阅导读总结

关键词:

`Go 程序`、`优雅关闭`、`信号处理`、`Kubernetes`、`数据完整性`

总结:

本文主要介绍了如何在 Go 程序中实现优雅关闭,特别是在 Kubernetes 环境下。强调了优雅关闭对于避免数据丢失和系统不稳定的重要性,通过示例代码和对相关概念的讲解,展示了处理信号、优化程序以确保完整处理请求等内容,最终得出优雅关闭能保障数据完整性等结论。

主要内容:

– 优雅关闭的重要性

– 类比计算机硬关机可能导致问题,软件中的硬关闭也会产生不良后果,而优雅关闭可避免。

– 实现优雅关闭的基础

– 介绍 Unix 系统中的信号概念,如 SIGTERM、SIGKILL、SIGINT、SIGQUIT 等。

– 创建简单服务作为示例,展示未实现优雅关闭时会丢失数据。

– 在 Go 中处理信号

– 提供使用通道和上下文两种处理信号的方式。

– 指出当前 HTTP 服务器实现的问题并给出改进后的代码。

– 相关框架和超时设置

– 提及 Web 框架处理请求的方式。

– 说明可设置超时确保进程终止顺利。

– Kubernetes 终止生命周期

– 介绍 Kubernetes 终止 Pod 的流程及相关设置。

– 结论

– 强调优雅关闭对数据完整性等方面的积极作用,指出 Go 适合实现优雅关闭。

思维导图:

文章地址:https://www.freecodecamp.org/news/graceful-shutdowns-k8s-go/

文章来源:freecodecamp.org

作者:Alex Pliutau

发布时间:2024/8/13 20:30

语言:英文

总字数:1334字

预计阅读时间:6分钟

评分:89分

标签:Go 语言,优雅关闭,Kubernetes,数据完整性,系统稳定性

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Have you ever pulled the power cord out of your computer in frustration? While this might seem like a quick solution to certain problems, it can lead to data loss and system instability.

In the world of software, a similar concept exists: the hard shutdown. This abrupt termination can cause problems just like its physical counterpart. Thankfully, there’s a better way: the graceful shutdown.

For applications deployed in orchestrated environments (like Kubernetes), graceful handling of termination signals is crucial.

By integrating graceful shutdown, you provide advance notification to the service. This enables it to complete ongoing requests, potentially save state information to disk, and ultimately avoid data corruption during shutdown.

In this guide, we’ll dive into the world of graceful shutdowns, specifically focusing on their implementation in Go applications running on Kubernetes.

Signals in Unix Systems

One of the key tools for achieving graceful shutdowns in Unix-based systems is the concept of signals. These are, in basic terms, a simple way to communicate one specific thing to a process, from another process.

By understanding how signals work, you can leverage them to implement controlled termination procedures within your applications, ensuring a smooth and data-safe shutdown process.

There are many signals, and you can find them here. But our concern in this article is only shutdown signals:

-

SIGTERM – sent to a process to request its termination. Most commonly used, and we’ll be focusing on it later.

-

SIGKILL – “quit immediately”, can not be interfered with.

-

SIGINT – interrupt signal (such as Ctrl+C)

-

SIGQUIT – quit signal (such as Ctrl+D)

These signals can be sent from the user (Ctrl+C / Ctrl+D), from another program/process, or from the system itself (kernel / OS). For example, a SIGSEGV aka segmentation fault is sent by the OS.

Our Guinea Pig Service

To explore the world of graceful shutdowns in a practical setting, let’s create a simple service we can experiment with. This “guinea pig” service will have a single endpoint that simulates some real-world work (we’ll add a slight delay) by calling Redis’s INCR command. We’ll also provide a basic Kubernetes configuration to test how the platform handles termination signals.

The ultimate goal: ensure our service gracefully handles shutdowns without losing any requests/data. By comparing the number of requests sent in parallel with the final counter value in Redis, we’ll be able to verify if our graceful shutdown implementation is successful.

We won’t go into details of setting up the Kubernetes cluster and Redis, but you can find the full setup in this Github repository.

The verification process is the following:

-

Deploy Redis and Go application to Kubernetes.

-

Use vegeta to send 1000 requests (25/s over 40 seconds).

-

While vegeta is running, initialize a Kubernetes Rolling Update by updating the image tag.

-

Connect to Redis to verify the “counter“, it should be 1000.

Let’s start with our base Go HTTP Server.

hard-shutdown/main.go:

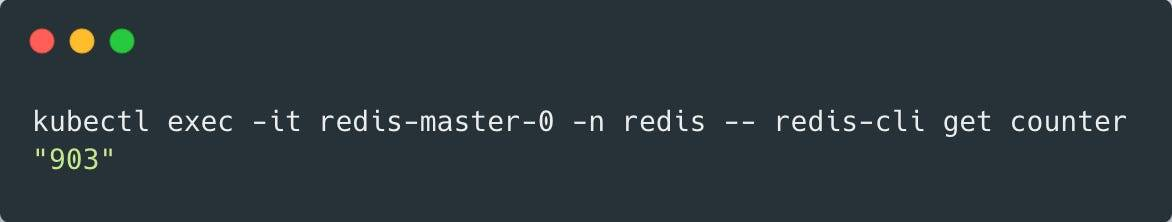

package mainimport ( "net/http" "os" "time" "github.com/go-redis/redis")func main() { redisdb := redis.NewClient(&redis.Options{ Addr: os.Getenv("REDIS_ADDR"), }) server := http.Server{ Addr: ":8080", } http.HandleFunc("/incr", func(w http.ResponseWriter, r *http.Request) { go processRequest(redisdb) w.WriteHeader(http.StatusOK) }) server.ListenAndServe()}func processRequest(redisdb *redis.Client) { time.Sleep(time.Second * 5) redisdb.Incr("counter")}When we run our verification procedure using this code, we’ll see that some requests fail and the counter is less than 1000 (the number may vary each run).

Which clearly means that we lost some data during the rolling update. 😢

How to Handle Signals in Go

Go provides a signal package that allows you to handle Unix Signals. It’s important to note that by default, the SIGINT and SIGTERM signals cause the Go program to exit. And in order for our Go application not to exit so abruptly, we need to handle incoming signals.

There are two options to do so.

The first is using channel:

c := make(chan os.Signal, 1)signal.Notify(c, syscall.SIGTERM)The second is using context (the preferred approach nowadays):

ctx, stop := signal.NotifyContext(context.Background(), syscall.SIGTERM)defer stop()NotifyContext returns a copy of the parent context that is marked done (its Done channel is closed) when one of the listed signals arrives, when the returned stop() function is called, or when the parent context’s Done channel is closed – whichever happens first.

There are few problems with our current implementation of HTTP Server:

-

We have a slow

processRequestgoroutine, and since we don’t handle the termination signal, the program exits automatically. This means that all running goroutines are terminated as well. -

The program doesn’t close any connections.

Let’s rewrite it.

graceful-shutdown/main.go:

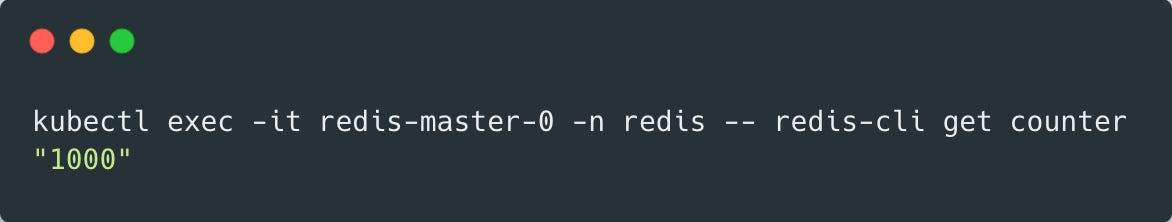

package mainvar wg sync.WaitGroupfunc main() { ctx, stop := signal.NotifyContext(context.Background(), syscall.SIGTERM) defer stop() http.HandleFunc("/incr", func(w http.ResponseWriter, r *http.Request) { wg.Add(1) go processRequest(redisdb) w.WriteHeader(http.StatusOK) }) go server.ListenAndServe() <-ctx.Done() if err := server.Shutdown(context.Background()); err != nil { log.Fatalf("could not shutdown: %v\n", err) } wg.Wait() redisdb.Close() os.Exit(0)}func processRequest(redisdb *redis.Client) { defer wg.Done() time.Sleep(time.Second * 5) redisdb.Incr("counter")}Here’s the summary of updates:

-

Added signal.NotifyContext to listen for the SIGTERM termination signal.

-

Introduced a sync.WaitGroup to track in-flight requests (processRequest goroutines).

-

Wrapped the server in a goroutine and used server.Shutdown with context to gracefully stop accepting new connections.

-

Used wg.Wait() to ensure all in-flight requests (processRequest goroutines) finish before proceeding.

-

Resource Cleanup: Added redisdb.Close() to properly close the Redis connection before exiting.

-

Clean Exit: Used os.Exit(0) to indicate a successful termination.

Now, if we repeat our verification process, we will see that all 1000 requests are processed correctly. 🎉

Web Frameworks / HTTP Library

Frameworks like Echo, Gin, Fiber and others will spawn a goroutine for each incoming request. This gives it a context and then calls your function / handler depending on the routing you decided. In our case, it would be the anonymous function given to HandleFunc for the “/incr” path.

When you intercept a SIGTERM signal and ask your framework to gracefully shutdown, two important things happen (to oversimplify):

Note: Kubernetes also stops directing incoming traffic from the loadbalancer to your pod once it has labelled it as Terminating.

Optional: Shutdown Timeout

Terminating a process can be complex, especially if there are many steps involved like closing connections. To ensure things run smoothly, you can set a timeout. This timeout acts as a safety net, gracefully exiting the process if it takes longer than expected.

shutdownCtx, cancel := context.WithTimeout(context.Background(), 10*time.Second)defer cancel()go func() { if err := server.Shutdown(shutdownCtx); err != nil { log.Fatalf("could not shutdown: %v\n", err) }}()select {case <-shutdownCtx.Done(): if shutdownCtx.Err() == context.DeadlineExceeded { log.Fatalln("timeout exceeded, forcing shutdown") } os.Exit(0)}Kubernetes Termination Lifecycle

Since we used Kubernetes to deploy our service, let’s dive deeper into how it terminates the pods. Once Kubernetes decides to terminate the pod, the following events will take place:

-

Pod is set to the “Terminating” State and removed from the endpoints list of all Services.

-

preStop Hook is executed if defined.

-

SIGTERM signal is sent to the pod. But hey, now our application knows what to do!

-

Kubernetes waits for a grace period (terminationGracePeriodSeconds), which is 30s by default.

-

SIGKILL signal is sent to pod, and the pod is removed.

As you can see, if you have a long-running termination process, it may be necessary to increase the terminationGracePeriodSeconds setting. This allows your application enough time to shut down gracefully.

Conclusion

Graceful shutdowns safeguard data integrity, maintain a seamless user experience, and optimize resource management. With its rich standard library and emphasis on concurrency, Go empowers developers to effortlessly integrate graceful shutdown practices – a necessity for applications deployed in containerized or orchestrated environments like Kubernetes.

You can find the Go code and Kubernetes manifests in this Github repository.