包阅导读总结

1. 关键词:

– 用户认证

– 生成式 AI

– 数据隐私

– RAG 技术

– 安全设计模式

2. 总结:

文本探讨了将用户认证融入生成式 AI 应用访问数据库的问题,指出 RAG 技术虽被广泛应用,但存在数据隐私风险,以 Cymbal Air 为例,提出通过用户认证的工具函数限制访问来保障数据安全的设计模式。

3. 主要内容:

– 生成式 AI 为企业带来变革潜力,但集成时存在用户数据保护问题

– RAG 技术可优化生成式 AI 模型准确性,但数据访问有风险

– Cymbal Air 案例

– 开发的 AI 助手需访问多种数据库,存在信息泄露风险

– 解决方案

– 限制代理访问权限

– 定义特定工具函数,附加用户认证头

– 让代理基于用户认证工具函数获取信息,保障安全

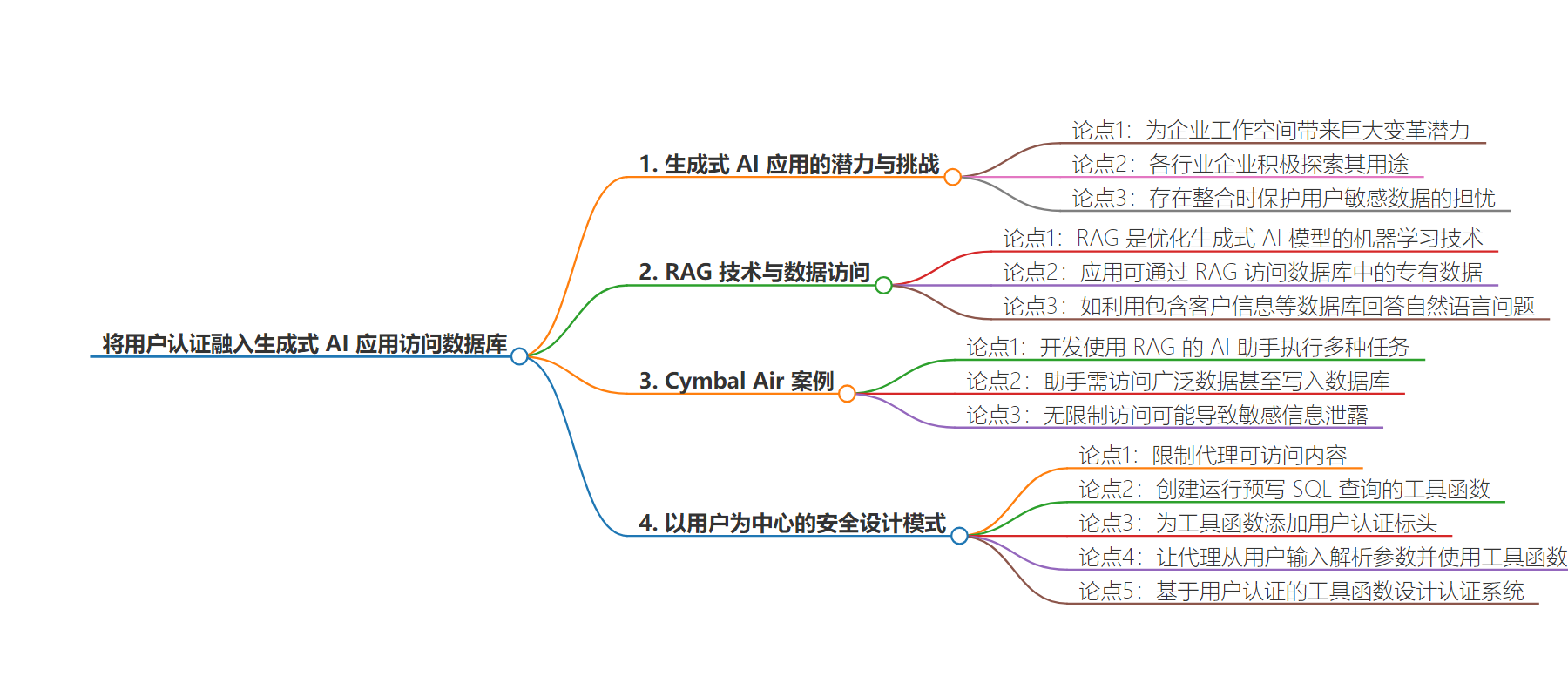

思维导图:

文章来源:cloud.google.com

作者:Wenxin Du,Jessica Chan

发布时间:2024/7/19 0:00

语言:英文

总字数:1386字

预计阅读时间:6分钟

评分:81分

标签:生成式 AI,RAG,用户身份验证,数据隐私,数据库安全

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Generative AI agents introduce immense potential to transform enterprise workspaces. Enterprises from almost every industry are exploring the possibilities of generative AI, adopting AI agents for purposes ranging from internal productivity to customer-facing support. However, while these AI agents can efficiently interact with data already in your databases to provide summaries, answer complex questions, and generate insightful content, concerns persist around safeguarding sensitive user data when integrating this technology.

The data privacy dilemma

Before discussing data privacy, we should define an important concept: RAG (Retrieval-Augmented Generation) is a machine-learning technique to optimize the accuracy and reliability of generative AI models. With RAG, an application retrieves information from a source (such as a database), augments it into the prompt of the LLM, and uses it to generate more grounded and accurate responses.

Many developers have adopted RAG to enable their applications to use the often-proprietary data in their databases. For example, an application may use a RAG agent to access databases containing customer information, proprietary research, or confidential legal documents to correctly answer natural language questions with company context.

A RAG use- case: Cymbal Air

Like any data access paradigm, without a careful approach there is risk. A hypothetical airline we’ll call Cymbal Air is developing an AI assistant that uses RAG to handle these tasks:

-

Book flight ticket for the user (write to the database)

-

List user’s booked tickets (read from user-privacy database)

-

Get flight information for the user, including schedule, location, and seat information (read from a proprietary database)

This assistant needs access to a wide range of operational and user data, and even potentially to write information to the database. However, giving the AI unrestricted access to the database could lead to accidental leaks of sensitive information about a different user. How do we ensure data safety while letting the AI assistant retrieve information from the database?

A user-centric security design pattern

One way of tackling this problem is by putting limits on what the agent can access. Rather than give the foundation model unbounded access, we can define specific tool functions that the agent uses to access database information securely and predictably. The key steps are:

-

Create a tool function that runs a pre-written SQL query

-

Attach a user authentication header to the tool function

-

When a user asks for database information, the agent parses parameters from user input, and feed the parameters to the tool function

In essence, we designed the authentication system based on the usage of user-authenticated tool functions that is opaque to the foundation model agent.