包阅导读总结

1.

关键词:LangChain、工具接口、文档改进、输入输出、错误处理

2.

总结:LangChain 团队过去几周专注于改进核心工具接口和文档,包括将代码转化为工具、处理不同类型输入输出、增强工具可靠性和错误处理,未来还将继续完善相关内容。

3.

主要内容:

– 工具在 LLM 中的作用

– 是可被模型调用的实用程序

– 具有定义明确的模式

– 核心工具接口和文档的改进

– 更易将任何代码转为工具

– 处理不同类型的输入和复杂输出

– 利用架构创建更可靠工具

– 简化工具集成

– 能将任何 LangChain 可运行对象转为工具

– 处理工具的输入来源

– 丰富工具输出

– 处理工具错误

– 未来计划

– 增加定义工具和设计架构的指南与最佳实践

– 刷新工具和工具包集成的文档

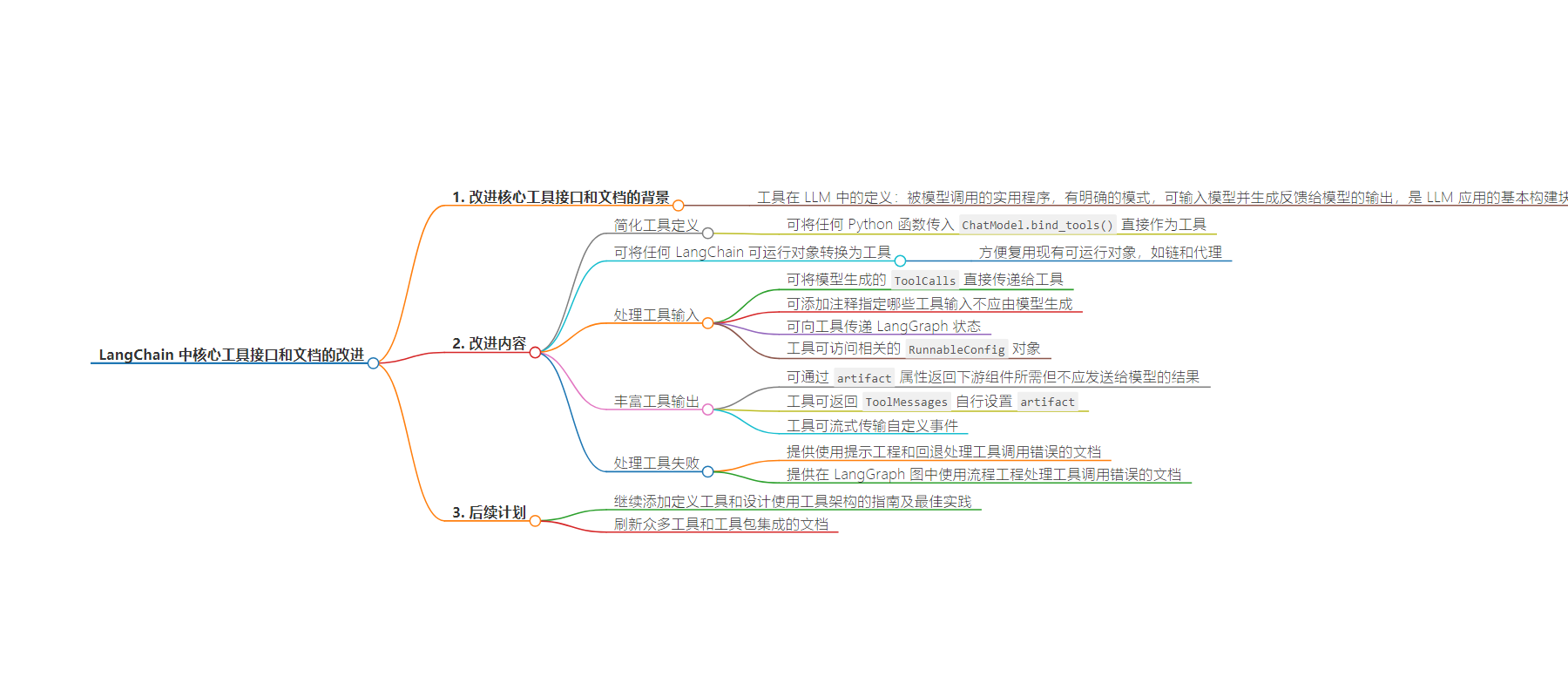

思维导图:

文章地址:https://blog.langchain.dev/improving-core-tool-interfaces-and-docs-in-langchain/

文章来源:blog.langchain.dev

作者:LangChain

发布时间:2024/7/19 7:10

语言:英文

总字数:910字

预计阅读时间:4分钟

评分:91分

标签:LangChain,AI 开发,工具集成,文档改进,LLM 应用

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

“Tools” in the context of LLMs are utilities designed to be called by a model. They have well-defined schemas that can be input to a model and generate outputs that can be fed back to the model. Tools are needed whenever you want a model to control parts of your code or call out to external APIs, making them an essential building block of LLM applications.

Over the past few weeks, we’ve focused on improving our core tool interfaces and documentation. These updates make it easier to:

- Turn any code into a tool

- Pass different types of inputs to tools

- Return complex outputs from tools

- Create more reliable tools using architectures

Let’s dive into these improvements for integrating, using, and managing tools in LangChain below.

Tool integration can be complex, often requiring manual effort like writing custom wrappers or interfaces. At LangChain, we’ve reduced complexity starting from tool definition.

- You can now pass any Python function into

ChatModel.bind_tools(), which allows normal Python functions to be used directly as tools. This simplifies how you define tools, as LangChain will just parse type annotations and docstrings to infer required schemas. Below is an example where a model must pull a list of addresses from an input and pass it along into a tool:

from typing import Listfrom typing_extensions import TypedDictfrom langchain_anthropic import ChatAnthropicclass Address(TypedDict): street: str city: str state: strdef validate_user(user_id: int, addresses: List[Address]) -> bool: """Validate user using historical addresses. Args: user_id: (int) the user ID. addresses: Previous addresses. """ return Truellm = ChatAnthropic( model="claude-3-sonnet-20240229").bind_tools([validate_user])result = llm.invoke( "Could you validate user 123? They previously lived at " "123 Fake St in Boston MA and 234 Pretend Boulevard in " "Houston TX.")result.tool_calls[{'name': 'validate_user', 'args': {'user_id': 123, 'addresses': [{'street': '123 Fake St', 'city': 'Boston', 'state': 'MA'}, {'street': '234 Pretend Boulevard', 'city': 'Houston', 'state': 'TX'}]}, 'id': 'toolu_011KnPwWqKuyQ3kMy6McdcYJ', 'type': 'tool_call'}]The associated LangSmith trace shows how the tool schema was populated behind the scenes, including the parsing of the function docstring into top-level and parameter-level descriptions.

Learn more about creating tools from functions in our how-to guides for Python and JavaScript.

- Additionally, any LangChain runnable can now be cast into a tool, making it easier to re-use existing LangChain runnables, including chains and agents. Reusing existing runnables reduces redundancies and allowing you to deploy new functionality faster. For example, below we equip a LangGraph agent with another “user info agent” as a tool, allowing it to delegate relevant questions to the secondary agent.

from typing import List, Literalfrom typing_extensions import TypedDictfrom langchain_openai import ChatOpenAIfrom langgraph.prebuilt import create_react_agentllm = ChatOpenAI(temperature=0)user_info_agent = create_react_agent(llm, [validate_user])class Message(TypedDict): role: Literal["human"] content: stragent_tool = user_info_agent.as_tool( arg_types={"messages": List[Message]}, name="user_info_agent", description="Ask questions about users.",)agent = create_react_agent(llm, [agent_tool])See how to use runnables as tools in our Python and JavaScript docs.

Tools must handle diverse inputs coming from varying data sources and user interactions. Validating these inputs can be cumbersome, especially determining which inputs should be generated by the model versus provided by other sources.

- In LangChain, you can now pass in model-generated ToolCalls directly to tools (see Python, JS docs). While this streamlines executing tools called by a model, there’s also cases where we don’t want all inputs to the tool to be generated by the model. For example, if our tool requires some type of user ID, this input will likely come from elsewhere in our code and not from a model. For these cases, we’ve added annotations that specify which tool inputs shouldn’t be generated by the model. See docs here (Python, JS).

- We’ve also added documentation on how to pass LangGraph state to tools in Python and JavaScript. We’ve also made it possible for tools to access the

RunnableConfigobject associated with a run. This is useful for parametrizing tool behavior, passing global params through a chain, and accessing metadata like Run IDs —which provide more control over tool management. Read the docs (Python, JS).

Enriching your tool outputs with additional data can help you use these outputs in subsequent actions or processes, increasing developer efficiency.

- Tools in LangChain can now return results needed in downstream components but that should not be part of the content sent to the model via an

artifactattribute in ToolMessages. Tools can also return ToolMessages to set theartifactthemselves, giving developers more control over output management. See docs here (Python, JS). - We’ve also enabled tools to stream custom events, providing real-time feedback that improves your tools’ usability. See docs here (Python, JS).

Tools can fail for various reasons — as a result, implementing fallback mechanisms and learning how to handle these failures gracefully is important to maintaining app stability. To support this, we’ve added:

- Docs for how to use prompt engineering and fallbacks to handle tool calling errors (Python, JS).

- Docs for how to use flow engineering in your LangGraph graph to handle tool calling errors (Python, JS).

What’s next

In the coming weeks we’ll continue adding how-to guides and best practices for defining tools and designing tool-using architectures. We’ll also refresh the documentation for our many tool and toolkit integrations. These efforts aim to empower users to maximize the potential of LangChain tools as they build context-aware reasoning applications.

If you haven’t already, check out our docs to learn more about LangChain for Python and JavaScript.

Updates from the LangChain team and community