包阅导读总结

1. 关键词:AIOps、LLMs、根因分析、效率提升、应用案例

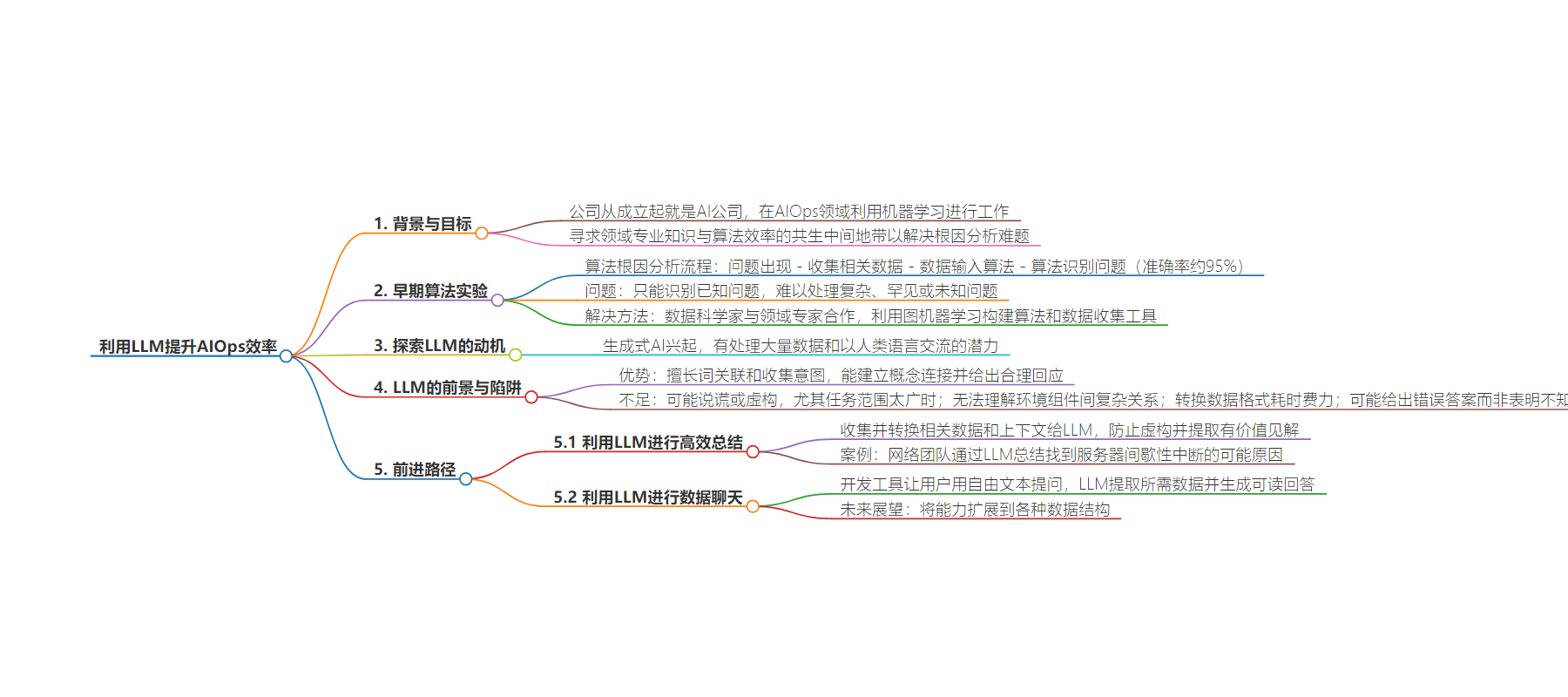

2. 总结:本文介绍了 Senser 公司在 AIOps 领域探索 LLMs 的应用,包括早期算法尝试,LLMs 的优势与局限,确定了总结和聊天两个早期适用的用例,旨在提升 AIOps 效率。

3. 主要内容:

– Supercharge AIOps Efficiency With LLMs

– 早期算法的根因分析及问题

– 早期算法能以约 95%准确率识别已知问题,但对复杂未知问题难有帮助。

– 高质量数据输入对算法成功重要,根因分析需领域专家与数据科学家合作。

– 探索 LLMs 的动机与考量

– 生成式 AI 兴起,考虑其在 AIOps 中的应用。

– LLMs 擅长词关联和意图收集,但可能说谎或产生幻觉。

– 确定的两个 LLMs 用例

– 高效总结节省时间:提供信息和引导,提取要点和洞察。

– 利用聊天能力与数据交互:识别问题意图,提取所需数据并回应。

思维导图:

文章地址:https://thenewstack.io/supercharge-aiops-efficiency-with-llms/

文章来源:thenewstack.io

作者:Avitan Gefen

发布时间:2024/8/2 16:27

语言:英文

总字数:1803字

预计阅读时间:8分钟

评分:86分

标签:AIOps,LLM,可观测性,根本原因分析,生成式AI

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Senser has been an AI company from day one. We operate within the AIOps category, using machine learning to graph production Cloud and IT environments and provide our customers with deep insights into the root cause and change impact. While the buzz around implementing generative AI capabilities in the observability market is recent and loud, we’ve been quietly evaluating the specific roles LLMs can play in bolstering our offering over the past year. This blog documents our process and what we have learned along the way.

Root cause analysis is one of the most challenging issues in observability. Because it requires an abundance of context and domain-specific knowledge, automation is extremely difficult to achieve. Application, network, and infrastructure-level data streams must be reliable and constantly monitored in relation to one another. Typically, the best root cause analysis requires a team of senior site reliability leaders and developers who know the system’s ins and outs and probably helped build it.

To scale those senior leaders (and hopefully make their lives easier), we sought to find the symbiotic middle ground where domain expertise meets algorithmic efficiency.

Pioneering With AI

Early Experiments With Algorithms

Our early attempts at algorithmic root cause analysis (in the pre-LLM days) went something like this:

- Issue occurs

- Data is gathered around the issue (time and proximity)

- Data is fed into an algorithm

- The algorithm identifies the issue with ~95% accuracy

Initially, this felt like a success. But then, we realized something important. The algorithm was only successful in identifying issues that were already known. How could it help determine the causes of more complex, rare, or previously unknown problems where domain expertise is critical?

Algorithms follow a simple principle: quality in, quality out. You are more likely to achieve high-quality results when you input high-quality data into a system. Developing a root cause algorithm that can handle “new” situations is difficult; the missing context is crucial.

To ensure an algorithm’s success, it’s essential to have a human domain expert feed it the appropriate data in the correct context. Finding the root cause is different from a typical data science problem. In classical supervised learning, having more high-quality data generally yields better results. However, the root cause of the problem space is vast and contains many unknowns. It also doesn’t fit neatly into unsupervised learning anomaly detection because there’s too much noise — many insignificant anomalies and crucial patterns that might not seem strange enough. Domain expertise is vital to narrow the problem space, sift through noise, and piece together significant patterns.

So, our data scientists teamed up with our domain experts to build a series of algorithms and data collection tools that could more accurately conduct root cause analysis. By leveraging Graph Machine Learning, Senser can map a network of components (nodes) and their interactions (edges) to gain insight into the dynamics of complex systems. While full automation is the goal, we have already made strides in developing a system that significantly reduces workloads for SREs while improving diagnostic outcomes.

Motivation for Exploring LLMs

Along comes generative AI, which has taken the world by storm and is redefining the way many of us work. People are finding new ways to increase their productivity and performance daily with LLMs. An immense amount of data must be constantly processed for observability and site reliability. On the surface, LLMs show promise in making sense of large swaths of information and communicating in human-understandable language.

Like many in our industry, we recognized immediately that LLMs would play a role in our industry’s future — the only question is how.

The Promise and Pitfalls of LLMs

With LLMs having arrived on the scene, we immediately started building a framework around incorporating them. First, we must consider how exactly generative AI algorithms “learn” and generate their responses. LLMs are experts in word association and gathering intent. They are excellent at developing connections between concepts and delivering reasonable-sounding responses to the prompter. However, they can also lie or hallucinate, especially when the task they are given is too broad in scope.

We considered what it would look like to deploy LLMs to root cause analysis. Retrieval-augmented generation (RAG), a technique for enhancing the accuracy and reliability of generative AI models with facts fetched from various sources, offered some promise here. Using RAG, the LLM could pull information from logs, metrics, and past incident reports and synthesize that information into a cohesive story.

But, even with RAG, it was only possible to feed the model some of the network, application, and infrastructure data continuously or affordably. Even if feasible, LLMs cannot understand the highly structured yet complex relationships between the various components of an environment. To start, it would take an impractical amount of time and effort to translate the data into a language-based prompt format. Then, an LLM could tell whether a particular metric is outside of the acceptable range, but it cannot infer which second or third-order cause might be responsible for the change. Worse, if the model needs more critical information, it is likelier to guess a wrong answer than to state that it does not know. That’s the intent part — LLMs have a compulsive need to answer the question asked, even if that means answering it incorrectly. In mission-critical situations, we can’t afford hallucinations.

With all these considerations in mind, we knew that severe constraints would be needed if LLMs were to play a role in our AIOps platform.

The Path Forward – 2 AIOps Use Cases for LLMs

Having deeply studied LLMs’ strengths and limitations through the lens of AIOps, we identified two early use cases that LLMs are ready to tackle today.

Saving Time With Summarization

One of the superpowers LLMs bring to the table is ultra-efficient summarization. Given a dense information block, generative AI models can extract the main points and actionable insights. Like with our earlier trials in algorithmic root cause analysis, we gathered all the data we could surrounding an observed issue, converted it into text-based prompts, and fed it to an LLM along with guidance on how it should summarize and prioritize the data. Then, the LLM was able to leverage its broad training and newfound context to summarize the issues and hypothesize about root causes. Constricting the scope of the prompt by providing the LLM the information and context it needs — and nothing more — we were able to prevent hallucinations and extract valuable insights from the model.

It still takes a domain expert to gather and transform the relevant data and context for the LLM and to hunt down and validate the potential root causes identified by the model. But there are massive efficiency gains in the middle.

Consider this hypothetical example: A network operations team notices intermittent outages affecting a segment of their servers. They collect logs, performance metrics, and historical incident reports and feed this data into an LLM with a prompt to identify patterns and potential causes of the outages. The LLM’s summarization highlights a possible correlation between high CPU usage and specific network traffic spikes. It also infers that a newly deployed software update could be causing the issue, as similar patterns are observed in post-deployment logs. The domain experts then investigate this hypothesis, confirming that the software update contains a bug that leads to resource exhaustion under specific conditions.

Combining powerful ML models with LLMs could reveal a winning combination for AI-based explainability. Extracting the information surrounding an issue from a graph-based model and then summarizing the feedback in text format will make AIOps more approachable, and it will also make insights easier to communicate horizontally and vertically within an organization.

Chatting With Data

Summarizing and hypothesizing about root causes is already proving to be a promising application of LLMs in AIOps. But LLMs possess another superpower that could be useful, too: chat.

Their friendly, intuitive interface is a huge reason for the widespread adoption of ChatGPT, Claude, Gemini, Llama, and other generalized models. We have all grown accustomed to search engines like Google, where we type questions and thousands of related links instantly populate the page. LLMs take it a step further, turning your questions into conversations, allowing for iteration, clarification, and memory.

We recognized an opportunity to leverage these chat capabilities in our product. While it is impractical and expensive to constantly provide LLMs with entire databases of observability data, it is not unreasonable to use them to “chat” with the data.

Earlier, we talked about how LLMs are great at recognizing intent. Leaning on that ability, we have developed a tool (in beta) that allows end users to ask specific questions in free text about their system data. The LLM, recognizing the intent of the question being asked, extracts the proper data needed to answer the question without drowning itself in unnecessary context and uses its generative capabilities to respond to the user in human-readable language. While this is a very reductive, simple way of using the technology, it is precisely the type of nuanced use case we, as an industry, are tuned to identify and leverage.

As LLMs progress even further, we look to extend this capability beyond text-based query databases to all types of data structures, including lists, trees, graphs, or any combination found in relational or non-relational databases. Leaping with LLMs from text-based data to these other formats will open exciting doors for the observability space.

The Next Frontier for LLMs in AIOps

Improving Model Performance

We continue to refine our models to improve their performance and accuracy in real-world scenarios. This involves ongoing research and experimentation and continually testing new models as they become available.

Extending the Models to New Use Cases (SLO Generation)

We are exploring how LLMs can be extended to other use cases, such as generating Service Level Objectives (SLOs) and other performance metrics.

Post-Mortem Report Generation

Another potential application of LLMs is automatically generating post-mortem reports after incidents. Documenting issues and resolutions is not only a best practice but also sometimes a compliance requirement. Rather than scheduling multiple meetings with different SREs, Developers, and DevOps to collect information, could LLMs extract the necessary information from the Senser platform and generate reports automatically?

Conclusions

When we say that LLMs are bad for root cause analysis, we mean it. But that doesn’t mean they won’t play a critical role. Our viewpoint is that they’ll serve a more nuanced function as an enabling technology rather than a holistic solution.

As innovators in the observability space, we constantly update our views and beliefs about emerging technologies. Our passion and curiosity lead us to experiment with the latest and greatest, and we love sharing what we have learned with like-minded technologists.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.