包阅导读总结

1. 关键词:Google Search、Fake Content、Generative Imagery、Removal Processes、Ranking Systems

2. 总结:谷歌搜索致力于应对未经同意的露骨假内容,基于专家和受害者反馈分享重大更新,包括简化移除流程和改进排名系统,以更好地保护人们。

3. 主要内容:

– 随着技术发展,生成露骨假内容增多,谷歌需应对。

– 此内容未经同意发布,令人困扰。

– 谷歌采取行动保护人们:

– 优化移除流程:方便大规模处理,成功移除后过滤相关搜索结果,扫描并移除重复图像。

– 改进排名系统:降低露骨假内容排名,对特定搜索提供高质量非露骨内容,区分真实和假的露骨内容,降低大量出现假内容网站的排名。

– 谷歌将继续努力解决此问题,投资行业合作和专家参与。

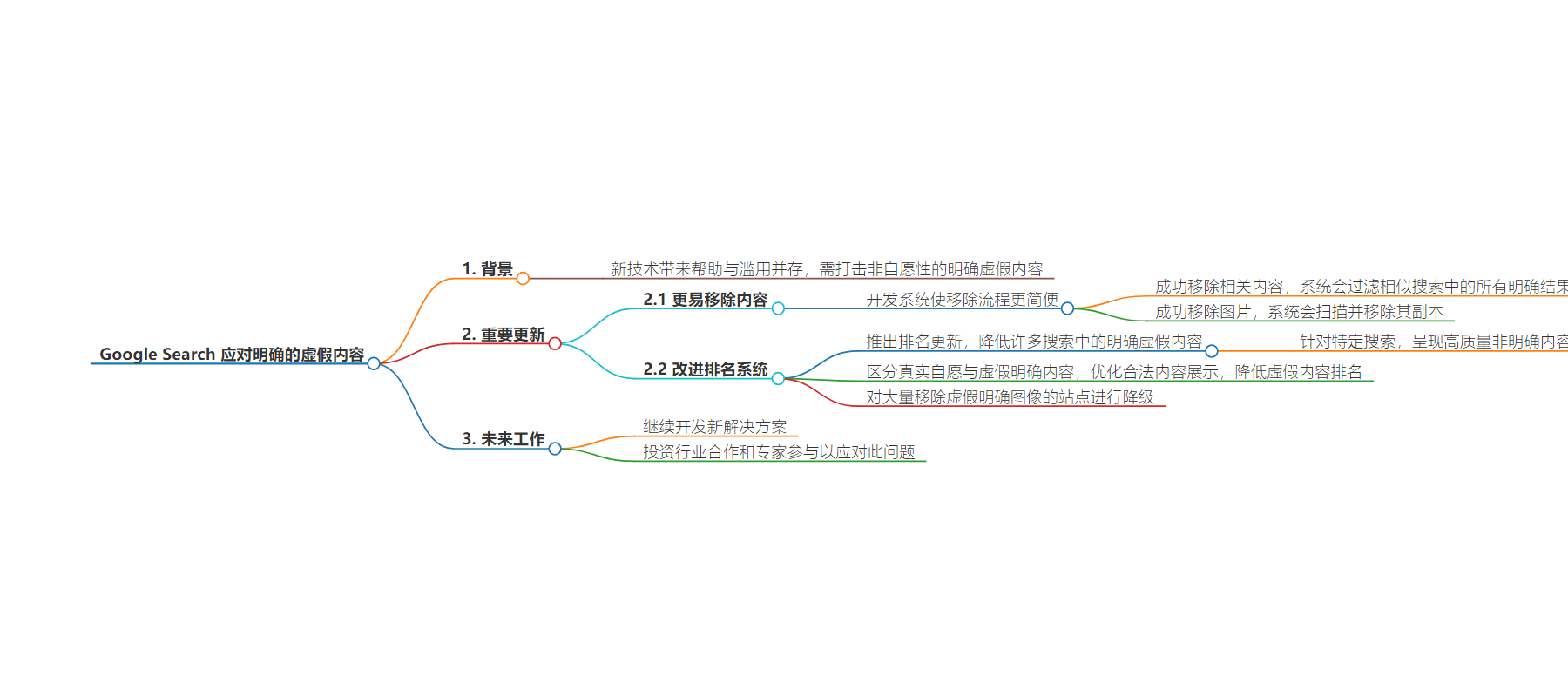

思维导图:

文章地址:https://blog.google/products/search/google-search-explicit-deep-fake-content-update/

文章来源:blog.google

作者:Emma Higham

发布时间:2024/7/31 13:00

语言:英文

总字数:659字

预计阅读时间:3分钟

评分:82分

标签:深度伪造,内容移除,搜索引擎政策,隐私保护,用户隐私

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

With every new technology advancement, there are new opportunities to help people — but also new forms of abuse that we need to combat. As generative imagery technology has continued to improve in recent years, there has been a concerning increase in generated images and videos that portray people in sexually explicit contexts, distributed on the web without their consent.

These are sometimes referred to as explicit “deepfakes,” and this content can be deeply distressing for people affected by it. That’s why we’ve invested in long-standing policies and systems to help people gain more control over this content.

Today, we’re sharing a few significant updates, which were developed based on feedback from experts and victim-survivors, to further protect people. These include: updates to our removal processes to make it easier for people to remove this content from Search and updates to our ranking systems to keep this type of content from appearing high up in Search results.

Easier ways to remove content

For many years, people have been able to request the removal of non-consensual fake explicit imagery from Search under our policies. We’ve now developed systems to make the process easier, helping people address this issue at scale.

When someone successfully requests the removal of explicit non-consensual fake content featuring them from Search, Google’s systems will also aim to filter all explicit results on similar searches about them. In addition, when someone successfully removes an image from Search under our policies, our systems will scan for – and remove – any duplicates of that image that we find.

These protections have already proven to be successful in addressing other types of non-consensual imagery, and we’ve now built the same capabilities for fake explicit images as well. These efforts are designed to give people added peace of mind, especially if they’re concerned about similar content about them popping up in the future.

Improved ranking systems

With so much content created online every day, the best protection against harmful content is to build systems that rank high-quality information at the top of Search. So in addition to improving our processes for reporting and removing this content, we are updating our ranking systems for queries where there’s a higher risk of explicit fake content appearing in Search.

First, we’re rolling out ranking updates that will lower explicit fake content for many searches. For queries that are specifically seeking this content and include people’s names, we’ll aim to surface high-quality, non-explicit content — like relevant news articles — when it’s available. The updates we’ve made this year have reduced exposure to explicit image results on these types of queries by over 70%. With these changes, people can read about the impact deepfakes are having on society, rather than see pages with actual non-consensual fake images.

There’s also a need to distinguish explicit content that’s real and consensual (like an actor’s nude scenes) from explicit fake content (like deepfakes featuring said actor). While differentiating between this content is a technical challenge for search engines, we’re making ongoing improvements to better surface legitimate content and downrank explicit fake content.

Generally, if a site has a lot of pages that we’ve removed from Search under our policies, that’s a pretty strong signal that it’s not a high-quality site, and we should factor that into how we rank other pages from that site. So we’re demoting sites that have received a high volume of removals for fake explicit imagery. This approach has worked well for other types of harmful content, and our testing shows that it will be a valuable way to reduce fake explicit content in search results.

These changes are major updates to our protections on Search, but there’s more work to do to address this issue, and we’ll keep developing new solutions to help people affected by this content. And given that this challenge goes beyond search engines, we’ll continue investing in industry-wide partnerships and expert engagement to tackle it as a society.