包阅导读总结

1.

关键词:DCPerf、基准测试套件、超大规模计算、数据中心、开源

2.

总结:Meta 开源了用于超大规模计算应用的基准测试套件 DCPerf,其代表了数据中心云部署的多样化工作负载类别。DCPerf 经过不断优化,可用于改进服务器设计、评估产品等,还与领先 CPU 厂商合作进行验证和优化,目前已在 GitHub 上可用。

3.

主要内容:

– DCPerf 开源

– 代表数据中心云部署中的多样化工作负载类别

– 希望被学术界、硬件行业和互联网公司广泛使用

– DCPerf 介绍

– 由 Meta 开发

– 设计参考了 Meta 生产服务器舰队中的大型应用

– 使用新技术确保基准代表性

– 不断增强以兼容不同指令集架构,支持多租户

– DCPerf 的使用

– 在 Meta 内部用于产品评估

– 协助决策数据中心平台部署

– 相比 SPEC CPU 等提供更丰富应用多样性和更好性能覆盖

– 与硬件行业合作

– 与领先 CPU 厂商合作验证和优化

– 识别性能优化领域

– 开放协作与未来

– 开源目标是创建协作开源参考基准

– 有望成为行业标准方法,在 GitHub 上可用

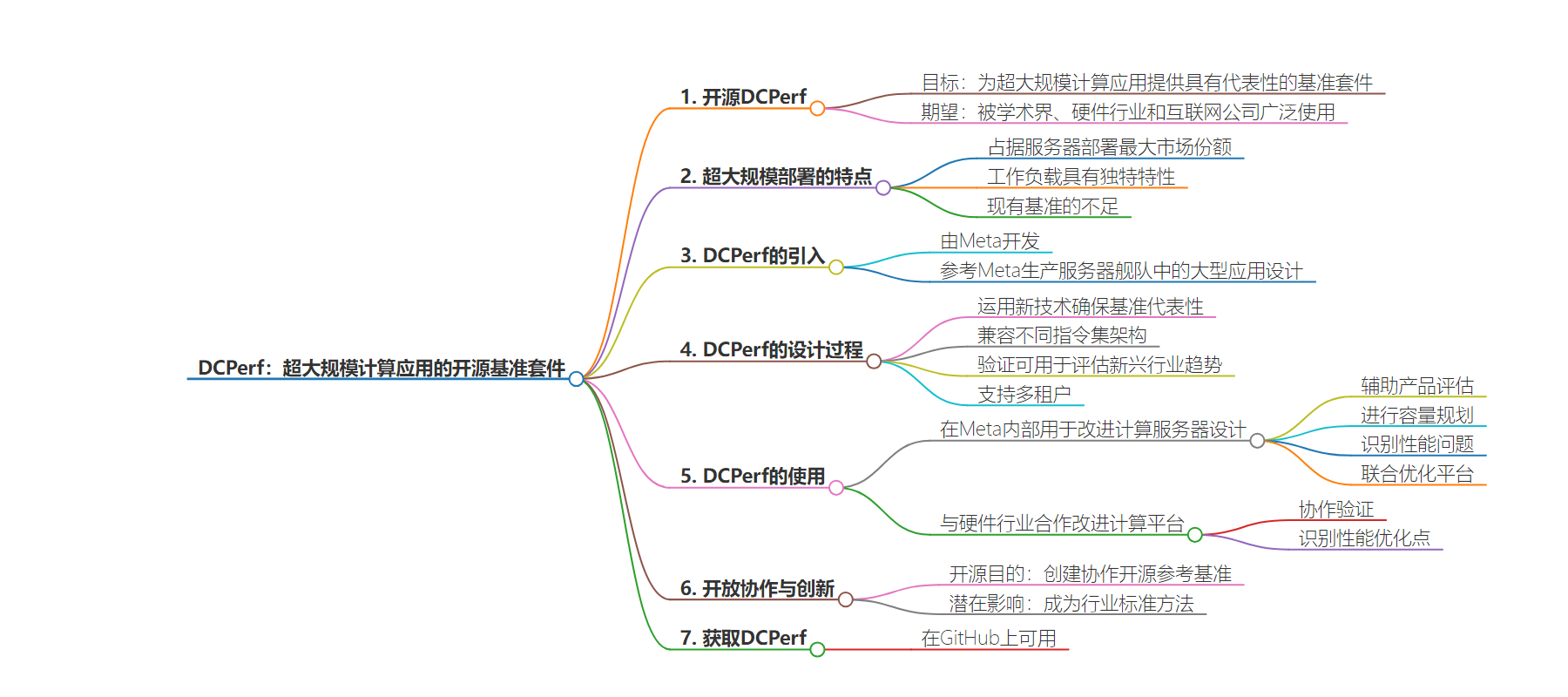

思维导图:

文章来源:engineering.fb.com

作者:Engineering at Meta

发布时间:2024/8/3 0:57

语言:英文

总字数:738字

预计阅读时间:3分钟

评分:86分

标签:超规模计算,数据中心优化,基准测试,开源,Meta

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

- We are open-sourcing DCPerf, a collection of benchmarks that represents the diverse categories of workloads that run in data center cloud deployments.

- We hope that DCperf can be used more broadly by academia, the hardware industry, and internet companies to design and evaluate future products.

- DCPerf is available now on GitHub.

Hyperscale and cloud datacenter deployments constitute the largest market share of server deployments in the world today. Workloads developed by large-scale internet companies running in their datacenters have very different characteristics than those in high performance computing (HPC) or traditional enterprise market segments. Therefore, server design considerations, trade-offs and objectives for datacenter use cases are also significantly different from other market segments and require a different set of benchmarks and evaluation methodology. Existing benchmarks fall short of capturing these characteristics and hence do not provide a reliable avenue to design and optimize modern server and datacenter designs.

Introducing DCPerf

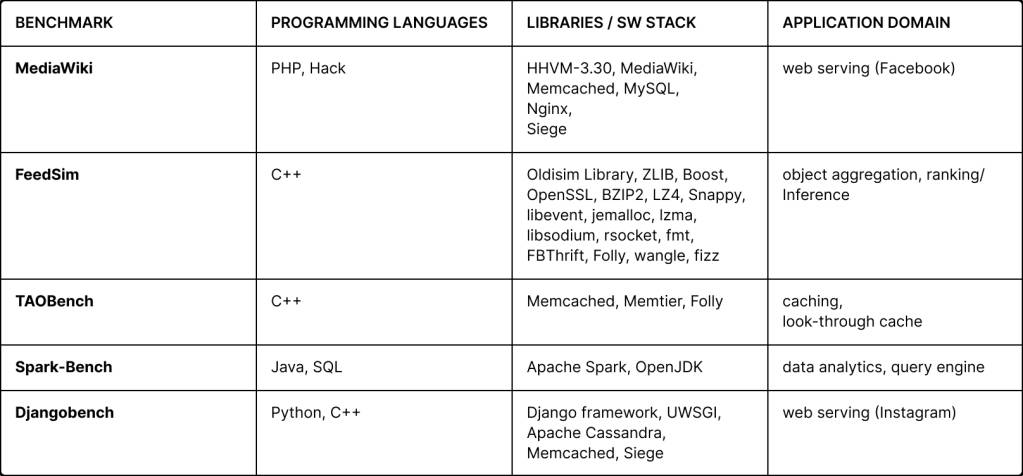

Meta developed DCPerf, a collection of benchmarks to represent the diverse categories of workloads that run in cloud deployments. Each benchmark within DCPerf is designed by referencing a large application within Meta’s production server fleet.

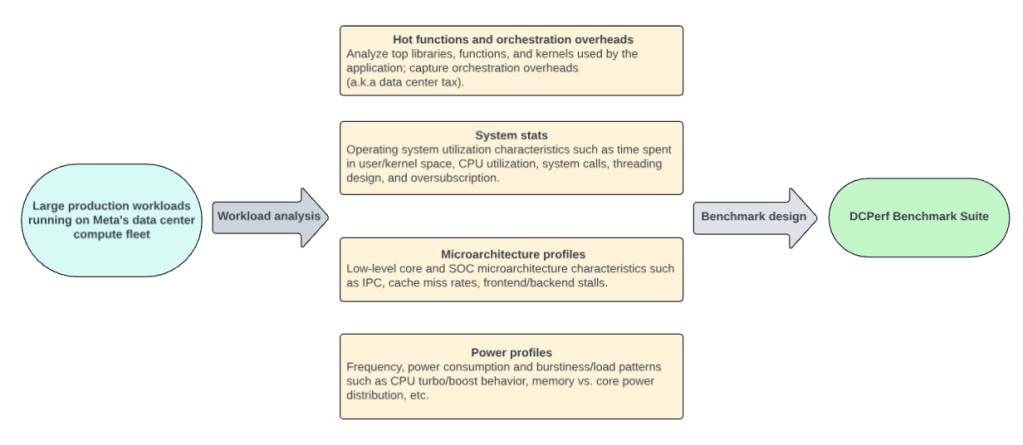

We used several new techniques to ensure benchmark representativeness, ranging from low-level hardware microarchitecture features to application and library usage profiles, to analyze production workloads and capture the important characteristics of these workloads in DCPerf. Designing and optimizing hardware and software on future server platforms using these benchmarks willmore closely translate into improved efficiency of hyperscaler production deployments.

Over the past few years, we have continuously enhanced these benchmarks to make them compatible with different instruction set architectures, including x86 and ARM. We also validated that the benchmarks can be used to evaluate emerging industry trends, (e.g., chiplet-based architectures), and added support for multi-tenancy so that benchmarks can scale and make use of rapidly increasing core counts on modern server platforms.

Using DCPerf to improve Meta’s compute server designs

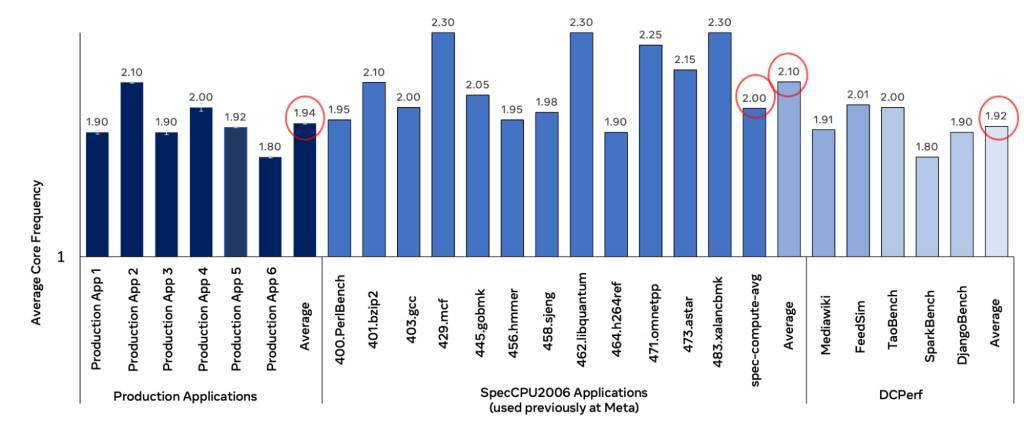

We have been using DCPerf internally, in addition to the SPEC CPU benchmark suite, for product evaluation at Meta to make the right configuration choices for our data center deployments. DCPerf also helps us make early performance projections that are used for capacity planning, identify performance bugs in hardware and system software, and jointly optimize the platform with our hardware industry collaborators.

DCPerf provides a much richer set of application software diversity and helps get better coverage signals on platform performance versus existing benchmarks such as SPEC CPU. Due to these benefits, we have also started using DCPerf to assist with our decision making process on which platforms to deploy in our data centers.

Improving state-of-the-art computing platforms with our hardware industry collaborators using DCPerf

Over the last two years we have collaborated with leading CPU vendors to further validate DCPerf on pre silicon and/or early silicon setups to debug performance issues and identify hardware and system software optimizations on their roadmap products. There have been multiple instances where we have been able to identify performance optimizations in areas such as CPU core microarchitecture settings and SOC power management optimizations.

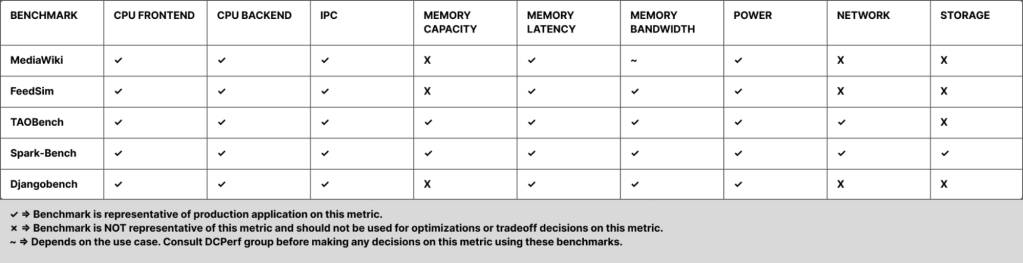

The graphic below shows areas of HW/SW design where we have seen DCPerf being representative of production usage and being beneficial for delivering relevant performance signals and help with optimizations as well as areas of future work.

We are thankful for our collaborators’ support and contributions using DCPerf to drive innovation in such an important and complex area and expect to continue improving the benchmarks with new version releases over time to adapt to emerging technologies.

Enabling innovations through open collaboration

Today, we are open-sourcing DCPerf with the goal to create a collaborative and open source reference benchmark that can be used to design, develop, debug, optimize, and improve state-of-the-art in compute platform designs for hyperscale.

As an open source benchmark suite, DCPerf has the potential to become an industry standard method to capture important workload characteristics of compute workloads that run in hyperscale datacenter deployments.

Get DCPerf on GitHub

DCPerf is available now on GitHub