包阅导读总结

1. 关键词:Least Privilege、LLMs、Access Control、Security、Probabilistic

2. 总结:过去应用安全和访问控制中的最小权限实践困难,存在诸多问题。如今,AI 和大型语言模型(LLMs)为最小权限带来新的动态和概率性应用思路,有望改善效率和安全性,减少各方摩擦,尽管人类在边缘情况仍需参与。

3. 主要内容:

– 传统最小权限的困境

– 虽为理想但实践难,公司实施角色访问控制,系统锁定带来安全但也有摩擦和僵化问题。

– 核心要求扩展差,成为问题,攻击者能利用管理系统。

– 最小权限痛苦的原因

– 权限限制问题:过严影响工作,过宽有安全风险。

– 权限瓶颈:非自动化导致等待,影响工作和士气。

– 权限复杂:现代环境复杂,动态角色和权限难管理。

– 基于 LLMs 的动态最小权限

– 部分公司实验新方法,LLMs 基于大量决策日志和数据提高精度。

– 新模型中权限可精细到请求级别,能动态调整。

– LLMs 使最小权限更优

– 改善效率和安全性,模式匹配能力强。

– 人类仍处理边缘情况,决策架构改进减少摩擦。

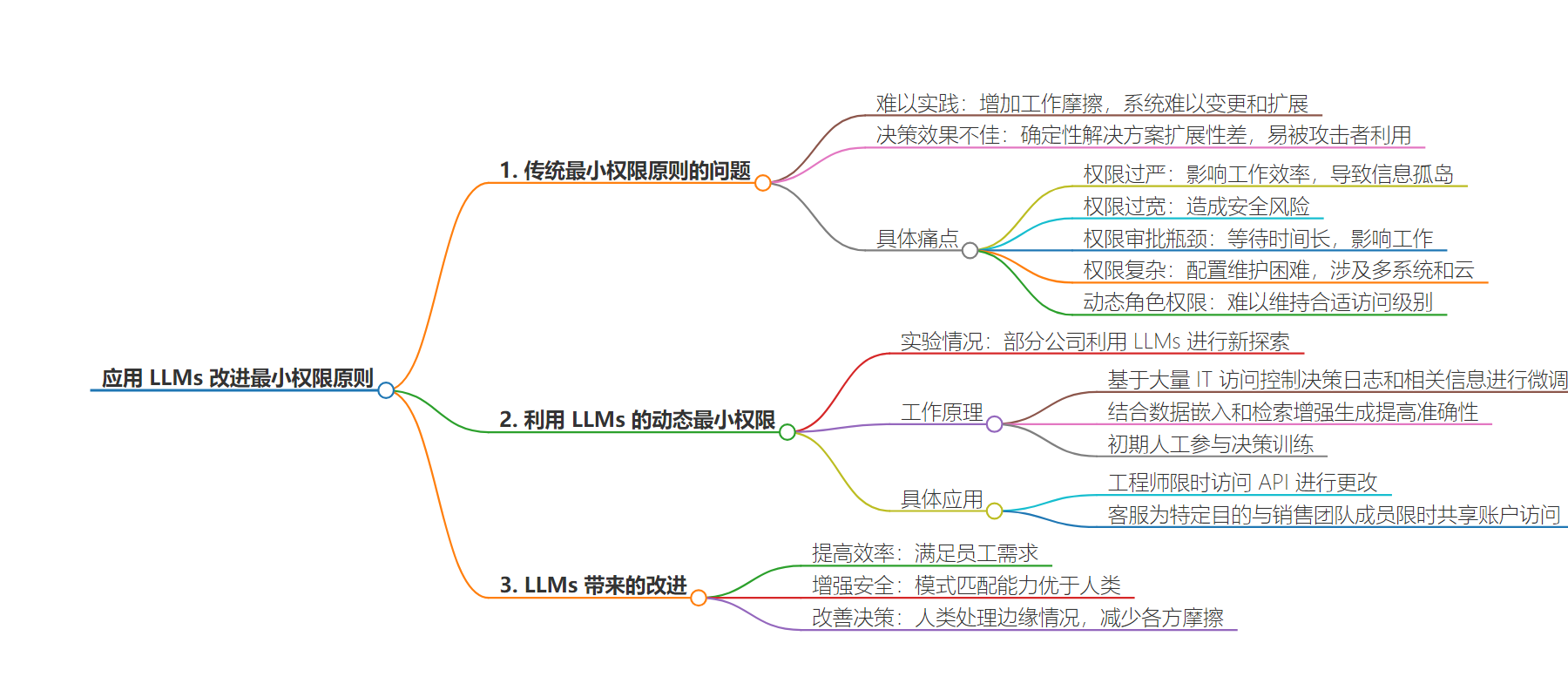

思维导图:

文章地址:https://thenewstack.io/rethinking-least-privilege-in-the-era-of-ai/

文章来源:thenewstack.io

作者:Liam Crilly

发布时间:2024/8/1 13:30

语言:英文

总字数:1532字

预计阅读时间:7分钟

评分:86分

标签:最小权限,人工智能,大语言模型,访问控制,安全

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

In the old world of application security and access control, least privilege was a lovely ideal but nearly unworkable in practice. Every company of a certain size instituted role-based access controls (RBACs). As soon as a firm is large enough to talk about any major form of compliance (like SOC2) or to build out considerable technology infrastructure, a lockdown of systems would ensue. This lockdown may have boosted security, but it also injected friction and created brittle systems that were hard to change and scale.

Even as access control continued to get better, the core requirement of access control — deciding who gets to use what — remained a deterministic solution that scaled poorly. Somewhere along the way, least privilege became as much a problem as a solution. Systems tasked with policing and managing least privilege not only became sources of friction that slowed down work, but also became so predictable that attackers learned to exploit them.

For example, attackers specifically targeted Okta’s customer support system, which inherently had elevated access to customer data. They gained data to access the system from an employee’s personal Google account on a work laptop, where they had saved account credentials, demonstrating this principle.

Today we are in the early days of a radical rethink of least privilege. AI and large language models (LLMs) are amazing prediction machines. Functioning at near-wire speed, they can make highly accurate guesses about whether a requester (machine or human) should be granted access to a system. This is leading to new applications of least privilege that are dynamic and probabilistic and decide not on a case-by-case but on an actual request-by-request basis.

Naturally, the very companies building the AI are leading the way. CISOs from OpenAI, Anthropic and Deep Mind discussed this in a deep-dive podcast. Should it prove effective, this new approach to least privilege could dramatically change the way that access control works and accelerate workflows across all verticals and technology silos.

The Many Reasons Why Least Privilege Is Painful

The principle of least privilege is a foundational concept in information security, originating from the early days of computing and access control mechanisms. It was formally articulated by Jerome Saltzer and Michael D. Schroeder in their seminal 1975 paper, “The Protection of Information in Computer Systems.”This principle was designed to minimize the attack surface within systems by ensuring that users and programs operate with the minimum level of access necessary to perform their tasks.

The legacy approach to least privilege often revolves around tightly restricting user access to only the resources and information necessary for their specific role. While this is fundamentally sound, it can lead to inefficiencies and frustration when users need to navigate complex systems to find the exact permissions they require.

- Overly restrictive permissions: Minimal permissions can sometimes be too restrictive, preventing employees from performing their jobs effectively. This can lead to frustration and decreased productivity as employees may need to frequently request additional access. If a company has a more conservative IT and security culture, restrictive permissions can encourage information silos and discourage collaboration and knowledge sharing.

- Excessive permissions: On the other hand, granting overly broad permissions can create significant security risks with users having access to systems outside their area of responsibility and even having default access to critical systems that they never touch. This can result in broader attack surfaces and higher chances of data breaches.

- Permission bottlenecks: If permissioning is not at least somewhat automated or streamlined, employees may need to wait for hours or days and weeks to get access to tools or systems they need to do their jobs. Disruptions in IT workflows or staffing problems can stretch queues out further. In many IT service systems, employees need to fill out specific requests or tickets to gain access, which must undergo human review. This always slows down operations, reduces productivity and hurts employee morale.

- Permission complexity: Configuring and maintaining permissions and controls is far more complicated in modern cloud computing environments that have many more operational systems. The microservices movement has splintered applications into numerous services, each with an API and each requiring privilege. To deal with this complexity, many organizations rely on service meshes, which adds another management face and additional complexity. Multicloud and hybrid architectures require permissions not only across systems, but also across clouds. The fastest-growing area of permissioning is machine-to-machine communications, which introduces additional protocols. All of these lead to permissioning drift and least privilege problems.

- Dynamic roles and permissions: In environments where roles and responsibilities frequently change, maintaining appropriate levels of access can be difficult. Temporary permissions may be necessary, but these can also introduce risks if not managed properly. Traditional RBAC systems enable privileges at the group level, based on directory services. But in modern cross-functional teams and matrix-style organizations, employees defy neat categorization and may cross groups in performing integrated work. Also, work today often involves people across multiple enterprises, which may warrant a federated, tiered access structure limited to a project and capabilities within a project.

Dynamic Least Privilege with LLMs

A handful of companies are experimenting with deploying LLMs to deliver an entirely new approach to least privilege that is more probabilistic and contextual. The logic makes sense. LLMs are probabilistic decision machines. They function best when there are relatively limited choices and decisions that are relatively easy to reinforce (either autonomously or with human input). Compared to, say, predicting how a protein will fold, deciding whether an access request is legitimate is relatively straightforward.

How might this work? An LLM would be fine-tuned on a large volume of IT access control decision logs that are correlated with additional information about requestors. The system could also use retrieval-augmented generation (RAG) based on data embeddings of the most recent system and access inventory to increase accuracy. Like code, access control involves decisions with limited surface area – yes, no, level, duration, permitted connection type. In the case of LLMs for access control, the AI could put a human in the decision loop for a training period until they are confident that the system works well on straightforward cases. All edge cases could still be routed to humans for privilege decisions.

In this new model, least privilege can function at the level of the request rather than the system. A new generation of generative AI systems trained on IT processes can explore old least privilege approaches in intriguing ways. Privilege can be dynamic down to the second and be corroborated by examinations of system records. This new approach to least privilege will finally make it possible to complete the zero trust circle by not only verifying identity but also providing least privilege access and authorization that is applied on a per-request or even a per-transaction basis.

Under this scenario, an engineer seeking to make a change to a specific API might only gain access to the API’s governance and code for a limited window until the change is pushed into the CI/CD system and then lose access after tests are completed. When the system starts throwing errors, the engineer might gain access until a fix is pushed. Or a customer service rep might request to share access to an account with a member of the sales team for a specific purpose and be limited to viewing records for a specific period, and only on specific product licenses or training materials.

Sound like science fiction? OpenAI is already putting some of these practices in place, as Matt Knight, head of security, described on a recent podcast.

“Imagine if you’re a developer and you need some narrowly scoped role to make a change to a service. But rather than going and trying to find the right role, you’re just going to ask for broad administrative access to the entire subscription or tenant,” said Knight, who describes this as the “easy button.”

According to Knight, LLMs efficiently match users and the actions they want to take to the correct internal resources and permissioning levels they need. “We’ve done this in a way that constrains them that if the model gets it wrong, there’s no impact. There’s still a human review,” Knight explained.

LLMs Will Make Least Privilege Faster and More Effective

Ultimately, this approach will not only improve efficiency and give employees what they want, but also improve security. While attackers will certainly try to game systems, the pattern-matching abilities of LLMs swamp those of human operators.

Deciding if a privilege is warranted is an act of pattern matching and predicting that the request is from a valid user and for a valid purpose. The LLM will be able to process a broader window of context while still being limited to specific actions. This will also allow employees to make natural language requests and potentially have the LLM suggest better approaches to privileging and collaboration.

Humans must remain involved on edge cases to provide judgment and intuition, but moving least privilege from descriptive to probabilistic decision architectures will reduce friction for all parties involved while improving decision-making.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.