包阅导读总结

1. 关键词:Google、Secure AI、CoSAI、Security Framework、AI 风险

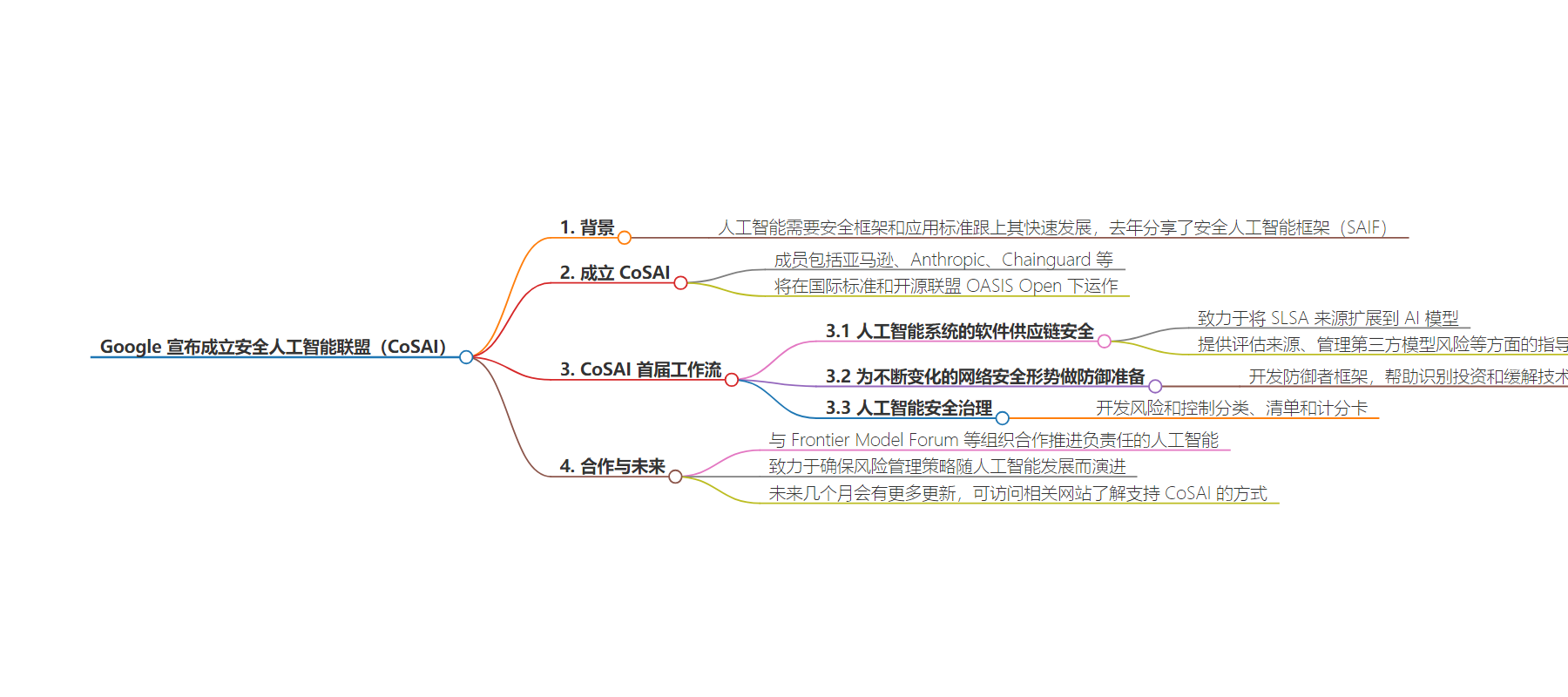

2. 总结:Google 宣布成立 Coalition for Secure AI(CoSAI),旨在推进 AI 安全的综合措施。介绍了其成员,以及将着手的三个重点领域,包括 AI 系统软件供应链安全、为网络安全防御者做准备、AI 安全治理,并表示期待未来更多进展。

3. 主要内容:

– Google 去年分享了 Secure AI Framework(SAIF)

– 今年在 Aspen Security Forum 宣布成立 Coalition for Secure AI(CoSAI)

– 成员包括亚马逊、Anthropic 等

– 将在 OASIS Open 下运作

– CoSAI 首届工作流

– AI 系统软件供应链安全:提供相关评估等的指导

– 为网络安全防御者准备:开发防御者框架

– AI 安全治理:开发风险分类等

– CoSAI 将与其他组织合作推进负责任的 AI

– 表示致力于确保 AI 风险管理策略发展,期待 CoSAI 未来更多更新

思维导图:

文章地址:https://blog.google/technology/safety-security/google-coalition-for-secure-ai/

文章来源:blog.google

作者:Heather Adkins

发布时间:2024/7/18 17:57

语言:英文

总字数:563字

预计阅读时间:3分钟

评分:84分

标签:人工智能安全,安全人工智能联盟,软件供应链安全,网络安全防御,人工智能安全治理

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

AI needs a security framework and applied standards that can keep pace with its rapid growth. That’s why last year we shared the Secure AI Framework (SAIF), knowing that it was just the first step. Of course, to operationalize any industry framework requires close collaboration with others — and above all a forum to make that happen.

Today at the Aspen Security Forum, alongside our industry peers, we’re introducing the Coalition for Secure AI (CoSAI). We’ve been working to pull this coalition together over the past year, in order to advance comprehensive security measures for addressing the unique risks that come with AI, for both issues that arise in real time and those over the horizon.

CoSAI includes founding members Amazon, Anthropic, Chainguard, Cisco, Cohere, GenLab, IBM, Intel, Microsoft, NVIDIA, OpenAI, Paypal and Wiz — and it will be housed under OASIS Open, the international standards and open source consortium.

Introducing CoSAI’s inaugural workstreams

As individuals, developers and companies continue their work to adopt common security standards and best practices, CoSAI will support this collective investment in AI security. Today, we’re also sharing the first three areas of focus the coalition will tackle in collaboration with industry and academia:

- Software Supply Chain Security for AI systems: Google has continued to work toward extending SLSA Provenance to AI models to help identify when AI software is secure by understanding how it was created and handled throughout the software supply chain. This workstream will aim to improve AI security by providing guidance on evaluating provenance, managing third-party model risks, and assessing full AI application provenance by expanding upon the existing efforts of SSDF and SLSA security principles for AI and classical software.

- Preparing defenders for a changing cybersecurity landscape: When handling day-to-day AI governance, security practitioners don’t have a simple path to navigate the complexity of security concerns. This workstream will develop a defender’s framework to help defenders identify investments and mitigation techniques to address the security impact of AI use. The framework will scale mitigation strategies with the emergence of offensive cybersecurity advancements in AI models.

- AI security governance: Governance around AI security issues requires a new set of resources and an understanding of the unique aspects of AI security. To help, CoSAI will develop a taxonomy of risks and controls, a checklist, and a scorecard to guide practitioners in readiness assessments, management, monitoring and reporting of the security of their AI products.

Additionally, CoSAI will collaborate with organizations such as Frontier Model Forum, Partnership on AI, Open Source Security Foundation and ML Commons to advance responsible AI.

What’s next

As AI advances, we’re committed to ensuring effective risk management strategies evolve along with it. We’re encouraged by the industry support we’ve seen over the past year for making AI safe and secure. We’re even more encouraged by the action we’re seeing from developers, experts and companies big and small to help organizations securely implement, train and use AI.

AI developers need — and end users deserve — a framework for AI security that meets the moment and responsibly captures the opportunity in front of us. CoSAI is the next step in that journey and we can expect more updates in the coming months. To learn how you can support CoSAI, you can visit coalitionforsecureai.org. In the meantime, you can visit our Secure AI Framework page to learn more about Google’s AI security work.