包阅导读总结

1. 关键词:

– CrowdStrike 灾难

– 软件问题

– 质量保证

– 灾难恢复

– 监测与响应

2. 总结:

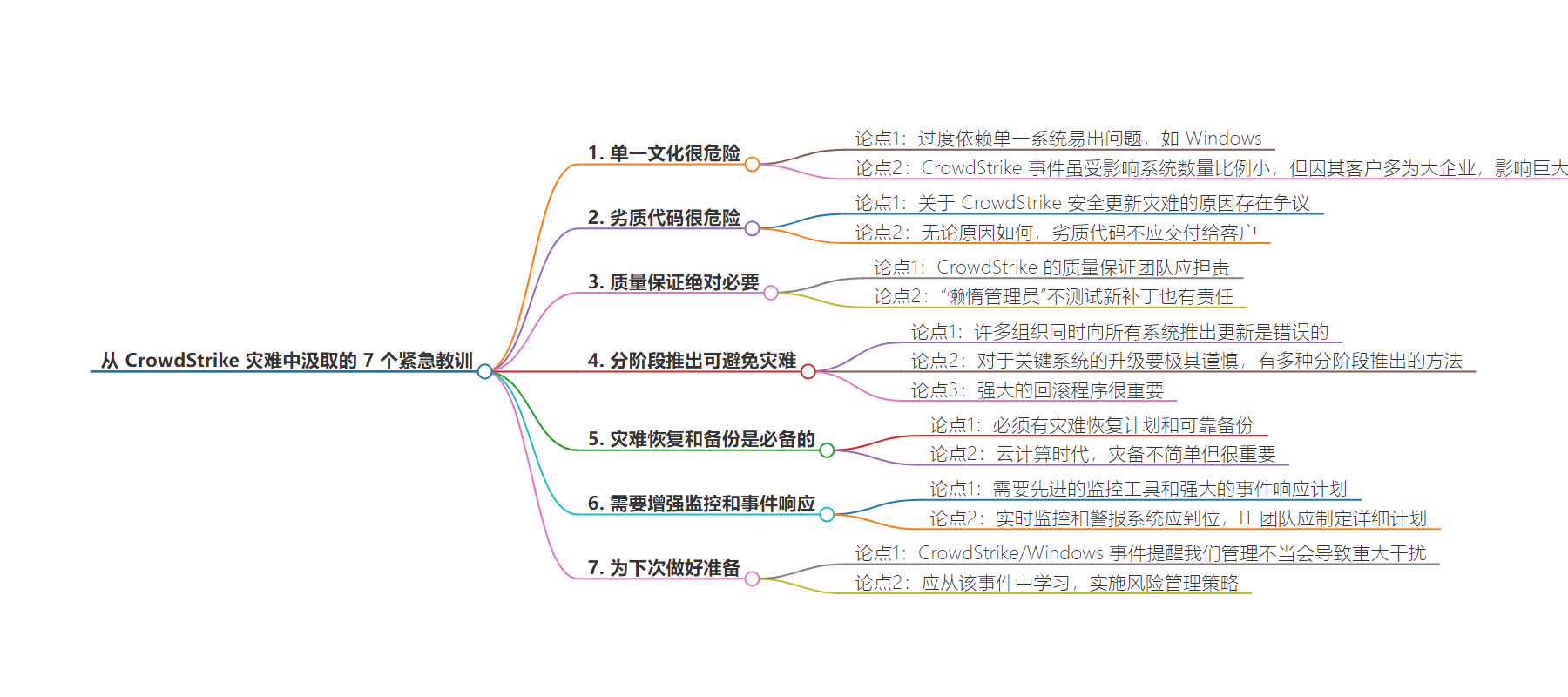

本文主要探讨了 CrowdStrike 灾难带来的教训,包括依赖单一系统的危险、不良代码的危害、质量保证的必要性、分阶段部署的重要性、灾难恢复和备份的必备性、增强监测和响应能力以及为下次类似事件做好准备等。

3. 主要内容:

– 引言

– 作者未受 CrowdStrike 崩溃直接影响,但其他人受到间接影响。

– 重要教训

– 单一系统依赖危险

– 以历史事件和 Windows 为例说明。

– 不良代码危险

– 对 CrowdStrike 安全更新的错误原因存在争议。

– 质量保证必要

– 质疑 CrowdStrike 质量保证团队,也指出管理员应测试新补丁。

– 分阶段部署避免灾难

– 指出同时全面更新系统是错误,介绍多种分阶段部署方式和回滚程序的重要性。

– 灾难恢复和备份必备

– 强调要有灾难恢复计划和可靠备份。

– 增强监测和响应

– 需先进监测工具和强大响应计划。

– 为下次做好准备

– 强调从本次事件中学习,做好风险管理。

思维导图:

文章地址:https://thenewstack.io/7-urgent-lessons-from-the-crowdstrike-disaster/

文章来源:thenewstack.io

作者:Steven J. Vaughan-Nichols

发布时间:2024/7/23 19:28

语言:英文

总字数:1487字

预计阅读时间:6分钟

评分:88分

标签:CrowdStrike,软件安全,质量保证,灾难恢复,风险管理

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Correction: This article has been changed to correct the spelling of Antony Falco’s first name.

Sitting here on my Linux desktop, with my Linux servers humming away in the background, the CrowdStrike crash didn’t affect me directly. Like pretty much everyone else on the planet, indirectly, it was another story.

Work buddies were stuck in airports. Colleagues, 48 hours after the incident, were still repairing one defunct Windows system after another, and friends had to use cash to buy groceries.

None of this had to happen.

“This is a reminder that we live in an increasingly digital world in which software underpins nearly every facet of our lives — from transportation and emergency services to banking, retail and even food services,” noted Jason Schmitt, general manager of the Synopsys Software Integrity Group in a statement released to the news media. “Software problems can lead to severe business problems — and, in some cases, problems that impact many of the necessities consumers take for granted.”

Let me repeat: None of this. Let me count the lessons.

1. Monocultures Are Dangerous.

Whether it’s potatoes in Ireland during the Great Famine, which brought my ancestors to the U.S., cotton in the American South before boll weevils showed up, or Windows, anytime everyone relies on a single system, you’re asking for trouble.

By Microsoft’s low count, only 8.5 million Windows devices, or less than one percent of all Windows machines, were affected. But those numbers don’t tell the whole story.

By the count of 6sense.com, the business data analysis company, CrowdStrike is the No. 1 business endpoint security company with over 3,500 customers. That may sound small, but it includes one in four companies that use endpoint security. These tend to be major businesses. So, while small in terms of the sheer number of systems stuck in endless reboots, the effects were massive.

“The scale of this outage highlights the risks associated with over-reliance on a single system or provider,” said Mark Boost, CEO of cloud computing company Civo, in a statement released to the news media. “It’s a sobering reminder that size and reputation do not guarantee invulnerability to significant technical issues or security breaches. Even the largest and most established companies must be vigilant, continuously updating and securing their systems.”

2. Bad Code Is Dangerous Code.

According to one popular theory proposed on X by Evis Drenova, CEO of NeoSync, a developer tool company,the root cause of the disastrous security update to its Falcon Sensor program was a null pointer error in its C++ code. CrowdStrike appears to deny this.

Tavis Ormandy, the well-known Google vulnerability researcher also disagrees via an X tweet. Ormandy and Patrick Wardle, creator of the Mac security website and tool suite Objective-See, who also weighed in on X, suspect the blame goes to a logic error.

Eventually, we’ll find out exactly what went wrong, but there can be no question whatsoever that this lousy code should never, ever should have been shipped to a customer.

3. Quality Assurance Is Absolutely Necessary.

This problem started at CrowdStrike. How the company’s quality assurance (QA) team ever let this update out the door is a question that will likely lead to numerous people being fired soon.

They’re not the only ones who should get the blame for this step towards disaster, though.

In a presentation at Open Source Summit North America in Seattle this past April, Jack Aboutboul, senior project manager at the Microsoft Linux Platforms Group, discussed the “lazy sysadmin” problem. The stereotypical lazy admin installs software, turns on automatic updates and deals with the latest urgent problem. That’s fine… until one of the updates crashes the system.

They should be testing each new patch when it comes in. In his presentation, Aboutboul was talking about Linux distro updates, but the same idea applies to all mission-critical software.

As Konstantin Klyagin, a founder of Redwerk and QAwerk, both software development and QA agencies, pointed out in a statement released to the news media, “Automated testing ensures that even minor changes don’t introduce new bugs. This is particularly important for large-scale updates, like the one from CrowdStrike, where manual testing alone would be insufficient.”

Who doesn’t do this!? It would appear at least some companies still don’t.

Did that many organizations really fail this basic step? Some suggest that CrowdStrike is to blame because this security data patch “was a channel update that bypassed client’s staging controls and was rolled out to everyone regardless” of whether they wanted it or not.

By bypassing the client’s rollout controls, far more companies were damaged. This strikes me as all too likely since so many businesses were smacked by this failure. Again, the problem remains: “Why would anyone let such a critical patch be deployed without question?”

4. Staged Rollouts Avoid Catastrophe.

A related production problem is that many organizations have simultaneously rolled out their updates to all their systems. This is such a basic blunder; it should never happen, but here we are.

Yes, there are arguments against staged rollouts — users can be confused when different teams are working with various versions. But when it comes to mission-critical systems where failure is unacceptable, you need to exercise extreme caution with any upgrade.

Besides, there are many ways to do a staged rollout. They include rolling updates, blue/green, canary, and A/B testing. Pick one. Make it work for your enterprise, just don’t put all your upgrades into one massive basket.

Besides, robust rollback procedures are essential to revert to a stable version if problems arise quickly. Wouldn’t you have liked just to hit a button and roll back to working systems? Tens of thousands of IT staffers must wish for that now.

5) Disaster Recovery and Backups Are Must-Haves.

This should go without saying, but you must have a disaster recovery plan and trustworthy backups.

“I’ve talked to several CISOs and CSOs who are considering triggering restore-from-backup protocols instead of manually booting each computer into safe mode, finding the offending CrowdStrike file, deleting it, and rebooting into normal Windows,” said Eric O’Neill, a public speaker and security expert in a press statement. “Companies that haven’t invested in rapid backup solutions are stuck in a Catch-22.”

Indeed, they are. True, in these days of cloud computing, disaster recovery, and backups aren’t as simple as they used to be. But they’re vitally important. And, in this case, old-school disaster recovery methods and backups would have been a major help.

6. You Need Enhanced Monitoring and Incident Response.

The outage’s global scale highlights the need for advanced monitoring tools and robust incident response plans. Real-time monitoring and alerting systems should be in place to catch issues as they occur. IT teams should develop detailed incident response plans with clear protocols for quick identification, isolation and resolution of issues. These plans should include root-cause analysis and post-incident reviews to improve response strategies continuously.

That’s easier said than done.

“Navigating the challenges of today’s digital era requires businesses to have proactive and practical strategies to mitigate outages and ensure resilience,” said Spencer Kimball, Cockroach Labs‘ CEO, and co-founder observed, in a statement released to the news media.

He added, “Outages are not a problem we’re going to solve completely. Cloud environments are only growing more complex and interconnected. This complexity at scale will continue to increase risk, particularly for businesses still in the initial stages of cloud adoption. Continuous monitoring and alerting are essential to detect and address issues before they escalate.”

Kimball’s thoughts were echoed by Antony Falco, vice president at Hydrolix, a company working on real-time query performance in an e-mail to The New Stack.

“The massive outage underscores the new reality companies face: Globally distributed software platforms that drive business today are a complex web of interdependencies, not all under any one actor’s control,” Falco said. “A modest mistake can literally grind global business to a halt.

“We need a new approach to observability — one that is real-time and can simplify the management of tremendous volumes of data streaming from myriad sources so events can be detected and mitigated before they spread.”

7. Get Ready Now for the Next Time.

The CrowdStrike/Windows incident is a stark reminder that even routine maintenance can lead to significant disruptions if not managed properly. It highlights the interconnected nature of modern IT systems and the far-reaching consequences of failures in widely used software.

By learning from this event and implementing robust risk management strategies, IT teams can better prepare for and mitigate the impact of similar incidents in the future.

We need to do better. We must do better. I’m old enough to have fought off the first major, widespread security problem, 1988’s Morris Worm. Then, technology problems only bothered those working in tech. We’ve gone long past those days.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.