包阅导读总结

1.

“`

LLM-as-a-Judge、Grading Notes、Databricks Assistant、Domain Knowledge、Evaluation

“`

2.

本文介绍了一种名为Grading Notes的技术,用于在专业领域进行高质量的LLM-as-a-Judge评估,在Databricks Assistant的开发中效果显著,提升了与人类判断的对齐率,同时也指出了研究的局限性。

3.

– 问题背景

– 快速准确评估LLM长格式输出对AI发展至关重要,但常见LLM-as-a-Judge方法有局限,尤其在专业领域。

– Grading Notes技术

– 不依赖固定提示,为每个问题注释简短的“grading note”,描述答案的期望属性。

– 简单易实施,对领域专家高效,优于固定提示。

– 在Databricks Assistant中的应用

– 采样约200个Assistant使用案例构建评估集。

– 应用Grading Notes评估效果显著,提升了LLM与人类判断的对齐率。

– 研究的局限性

– 人员重叠可能导致潜在的知识偏差。

– 缺少人类法官之间的对齐率对比。

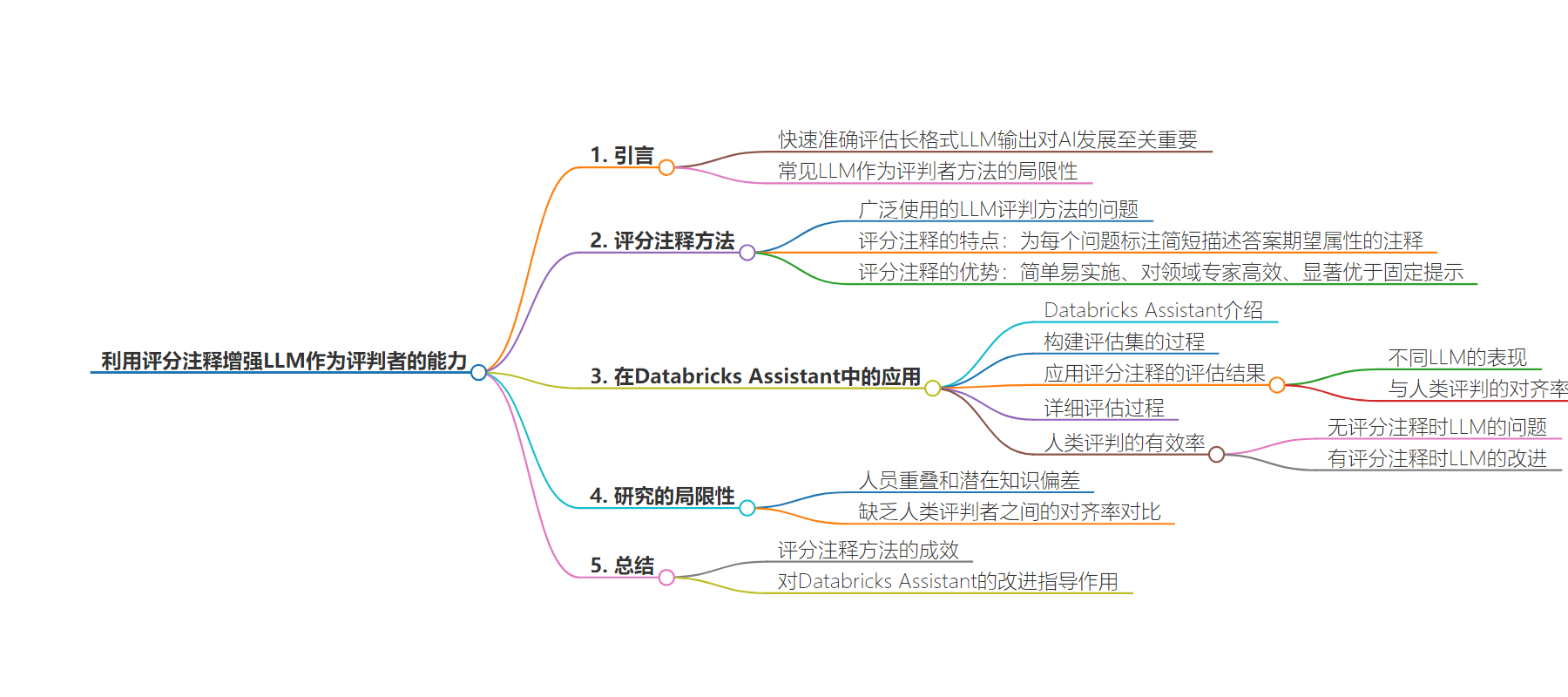

思维导图:

文章地址:https://www.databricks.com/blog/enhancing-llm-as-a-judge-with-grading-notes

文章来源:databricks.com

作者:Databricks

发布时间:2024/7/22 17:34

语言:英文

总字数:1568字

预计阅读时间:7分钟

评分:87分

标签:LLM 评估,评分笔记,特定领域 AI,AI 准确性,AI 一致性

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Evaluating long-form LLM outputs quickly and accurately is critical for rapid AI development. As a result, many developers wish to deploy LLM-as-judge methods that work without human ratings. However, common LLM-as-a-judge methods still have major limitations, especially intasks requiringspecialized domain knowledge.For example, coding on Databricks requires understanding APIs that are not well-represented in the LLMs’ training data. LLM judges that do not understand such a domain may simply prefer answers that sound fluent (e.g.1,2,3).

In this post, we describe a simple technique called Grading Notes that we developed for high-quality LLM-as-a-judge evaluation inspecialized domains. We have been using Grading Notes in our development ofDatabricks Assistantfor the past year to produce high-quality signals for its custom, technical domain (helping developers with Databricks), thereby producing a high-quality AI system.

Grading Notes

Most widely used LLM-as-judge methods (e.g.,1,2,3,4) rely on using a fixed prompt for the LLM judge over an entire dataset, which may ask the judge to reason step-by-step, score an answer on various criteria, or compare two answers. Unfortunately, these fixed-prompt methods all suffer when the LLM judge has limitedreasoning abilityin the target domain.Some methods also use “reference-guided grading,” where the LLM compares outputs to a gold reference answer for each question, but this requires humans to write detailed answers to all questions (expensive) and still fails when there are multiple valid ways to answer a question.

Instead, we found that a good alternative is to annotate a short “grading note” for each question that just describes the desired attributes ofitsanswer. The goal of these per-question notes is not to cover comprehensive steps but to “spot-check” the key solution ingredients and allow ambiguity where needed. This can give an LLM judge enough domain knowledge to make good decisions, while still enabling scalable annotation of a test set by domain experts. Below are two examples of Grading Notes we wrote for questions to the Databricks Assistant:

|

Assistant Input |

Grading Note |

|

How do I drop all tables in a Unity Catalog schema? |

The response should contain steps to get all table names then drop each of them. Alternatively the response can suggest dropping the entire schema with risks explained. The response should not treat tables asviews. |

|

Fix the error in this code: df = ps.read_excel(file_path, sheet_name=0) … “ArrowTypeError: Expected bytes, got a ‘int’ object” |

The response needs to consider that the particular error is likely triggered by read_excel reading an excel file with mixed format column (number and text). |

We found that this approach is simple to implement, is efficient for domain experts, and significantly outperforms fixed prompts.

Otherper-question guidance efforts have been presented recently, but they rely onLLM generation of criteria(which can still lack key domain knowledge) or are formulated asinstruction-followingrather than answering real domain questions.

Applying Grading Notes in Databricks Assistant

Databricks Assistantis an LLM-powered feature that significantly increases user productivity in Notebooks, the SQL Editor, and other areas of Databricks. People use Assistant for diverse tasks such as code generation, explanation, error diagnosis, and how-tos. Under the hood, the Assistant is a compound AI system that takes the user request and searches for relevant context (e.g., related code, tables) to aid in answering context-specific questions.

To build an evaluation set, we sampled ~200 Assistant use cases from internal usage, each consisting of user questions and their complete run-time context. We initially tried evaluating responses to these questions using state-of-the-art LLMs, but found that their agreement with human ratings was too low to be trustworthy, especially given the technical and bespoke nature of the Assistant, i.e. the need to understand the Databricks platform and APIs, understand the context gathered from the user’s workspace, only generate code in our APIs, and so on.

Evaluation worked out much better using Grading Notes. Below are the results of applying Grading Notes for evaluating the Assistant. Here, we swap the LLM component in Assistant to demonstrate the quality signals we are able to extract with Grading Notes. We consider two of the recent and representative open and closed-source LLMs:Llama3-70BandGPT-4o. To reduce self-preference bias, we useGPT-4andGPT-4-Turboas the judge LLMs.

|

Assistant LLM |

Judge Method |

||||

|

Human |

GPT-4 |

GPT-4 + |

GPT-4-Turbo |

GPT-4-Turbo + Grading Notes |

|

|

Positive Label Rate by Judge |

|||||

|

Llama3-70b |

71.9% |

96.9% |

73.1% |

83.1% |

65.6% |

|

GPT-4o |

79.4% |

98.1% |

81.3% |

91.9% |

68.8% |

|

Alignment Rate with Human Judge |

|||||

|

Llama3-70b |

– |

74.7% |

96.3% |

76.3% |

91.3% |

|

GPT-4o |

– |

78.8% |

93.1% |

77.5% |

84.4% |

Let’s go into a bit more detail.

We annotated the Grading Notes for the whole set (a few days’ effort) and built a configurable flow that allows us to swap out Assistant components (e.g. LLM, prompt, retrieval) to test performance differences. The flow runs a configured Assistant implementation with <run-time context, user question> as input and produces a <response>. The entire <input, output, grading_note> tuple is then sent to a judge LLM for effectiveness assessment. Since Assistant tasks are highly diverse and difficult to calibrate to the same score scale, we extracted binary decisions (Yes/No) via function calling to enforce consistency.

For each Assistant LLM, we manually labeled the response effectiveness so that we can compute the LLM judge <> human judge alignment rate and use this as the main success measure of LLM judges (bottom part of the table). Note that, in common development flow, we do not have to do this extra human labeling with established measurement.

For the LLM-alone and LLM+Grading_Notes judges, we use the prompt below and also experimented with both a slightly-modified MT-bench prompt and few-shot prompt variants. For the MT-bench prompt, we sweep the score threshold to convert the produced score into binary decisions with maximum alignment rate. For the few-shot variant, we include one positive and one negative example in different orders. The variants of LLM-alone judges produced similar alignment rates with human judges (< 2% difference).

... General Instructions ...Consider the following conversation, which includes a user message asking for help on an issue with Databricks notebook work, notebook runtime context for the user message, and a response from an agent to be evaluated.The user message is included below and is delimited using BEGIN_USER_MESSAGE and END_USER_MESSAGE...BEGIN_USER_MESSAGE{user_message}...{system_context}...{response}END_RESPONSEThe response assessment guideline is included below and is delimited using BEGIN_GUIDELINE and END_GUIDELINE.BEGIN_GUIDELINETo be considered an effective solution, {response_guideline}END_GUIDELINE"type": "string","description": "Why the provided solution is effective or not effective in resolving the issue described by the user message.""enum": ["Yes", "No", "Unsure"],"description": "An assessment of whether or not the provided solution effectively resolves the issue described by the user message."Alignment with Human Judge

The human-judged effective rate is 71.9% for Llama3-70b and 79.4% for GPT-4o. We consider the alignment rate by applying the majority label everywhere as the performance baseline: if a judge method simply rates every response as effective, it would align with the human judge for 71.9% and 79.4% of the time.

When LLM-as-a-judge is usedalone(without Grading Notes), its effective rate varies by the LLM choice (and also impacted by the prompt choice to a smaller extent).GPT-4is rating almost every response to be effective whileGPT-4-Turbois more conservative in general. This could be becauseGPT-4, while still powerful in reasoning, is behind the recent models in updated knowledge. But neither judge LLMs is doing significantly better than the baseline (i.e. majority label everywhere) when we look at the alignment rate with a human judge. Without Grading Notes, both judge-LLMs overestimate the effectiveness by a significant margin, likely indicating the gap in domain knowledge to criticize.

With Grading Notes introducing brief domain knowledge, both judge LLMs showed significant improvement in the alignment rate with humans, especially in the case ofGPT-4: alignment rate increased to 96.3% for Llama3 and 93.1% forGPT-4o, which corresponds to 85% and 67.5% reduction in misalignment rate, respectively.

Limitations of this study

In the ideal case, we want to have the human-judge process separated cleanly from the grading notes annotation process. Due to bandwidth limits, we have overlapping personnel and, more subtly, potential domain knowledge bias inherited in the group of engineers. Such bias could lead to an inflated alignment rate when a user question is ambiguous and a note is favoring a particular solution path. But this potential bias should also be mitigated by the brevity of Grading Notes: it is not trying to be comprehensive on the entire answer and is only specifying a few critical attributes – it thus helps reduce the case of forcing a specific path out of ambiguity. Another limitation of this study is that we took an iterative consensus-building process in the cross-annotation of Grading Notes and we do not have an alignment rate among human judges for comparison.

Wrapping Up

Grading Notes is a simple and effective method to enable the evaluation of domain-specific AI. Over the past year at Databricks,we’ve used this method to successfully guide many improvementsto the Databricks Assistant, including deciding on the choice of LLM, tuning the prompts, and optimizing context retrieval. The method has showngood sensitivity and has produced reliable evaluation signalsconsistent with case studies and online engagements.

We would like to thank Sam Havens, Omar Khattab, Jonathan Frankle for providing feedback and Linqing Liu, Will Tipton, Jan van der Vegt for contributing to the method development.