包阅导读总结

1.

关键词:Postgres、DuckDB、Analytics、OLAP、Extensions

2.

总结:Postgres 在新数据工作负载和数据库工具方面受青睐,但在大数据集的 OLAP 分析上有不足。Crunchy Data 利用 Postgres 扩展和 DuckDB 开发新解决方案,解决了数据存储和查询问题,为用户提供 Postgres 原生的分析体验和诸多便利。

3.

主要内容:

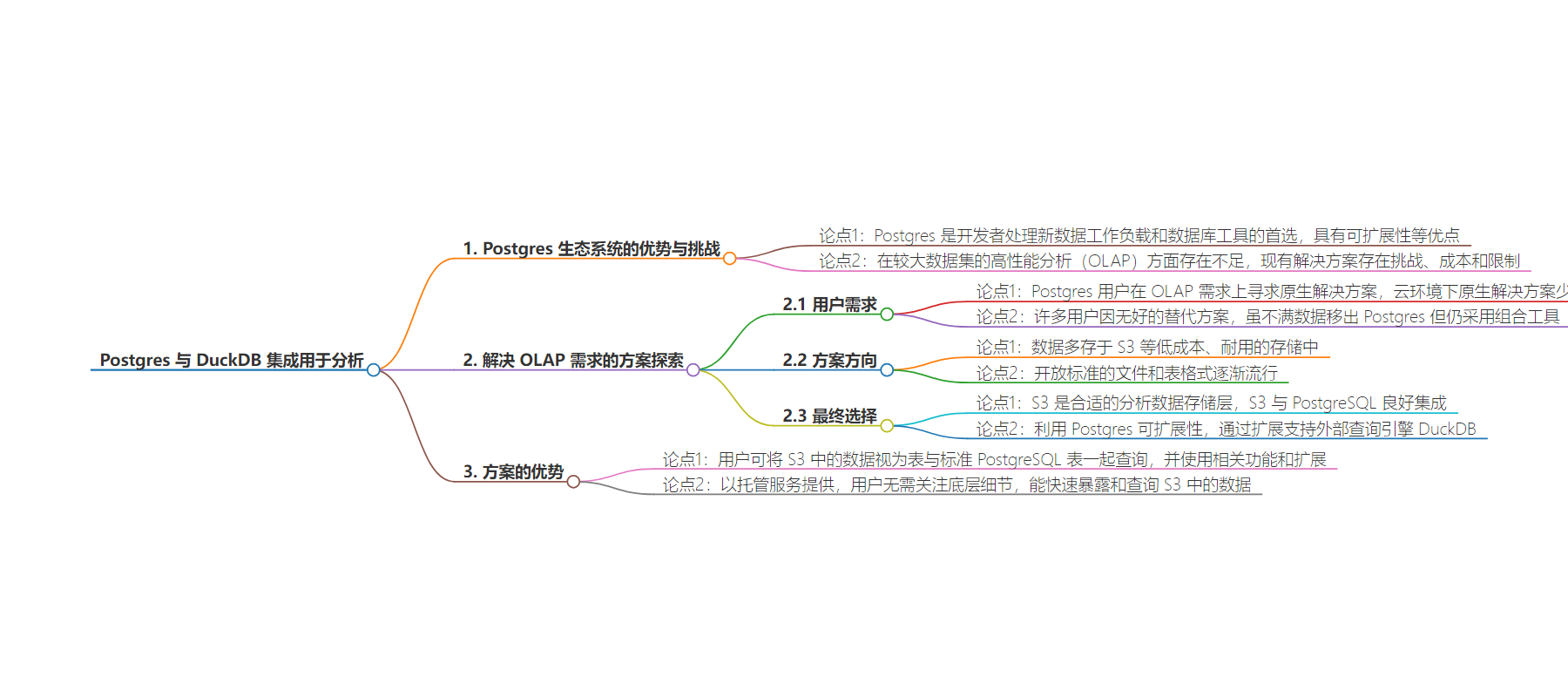

– 介绍 Postgres 生态系统受欢迎,但在 OLAP 分析上表现不佳

– 虽有多种解决方案,但存在挑战、成本和限制

– 为满足 OLAP 需求开发新方案

– 考虑用户需求和数据存储趋势

– 明确 S3 为合适存储层,需良好的 S3 与 PostgreSQL 集成

– Postgres 扩展性再次发挥优势

– 利用扩展支持外部查询引擎,以 DuckDB 为例

– 介绍 DuckDB 及其与 Postgres 集成方式

– Postgres 原生分析解决方案的好处

– 用户可像使用标准 Postgres 表一样查询 S3 中的数据,并享受多种功能

– 作为管理服务,用户无需操心技术细节,Crunchy Bridge 提供支持

思维导图:

文章地址:https://thenewstack.io/unleashing-postgres-for-analytics-with-duckdb-integration/

文章来源:thenewstack.io

作者:Paul Laurence

发布时间:2024/7/23 20:06

语言:英文

总字数:1080字

预计阅读时间:5分钟

评分:90分

标签:PostgreSQL,DuckDB,分析处理,OLAP,数据存储

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

The Postgres ecosystem is winning, making it a preferred choice for developers for new data workloads and database tools. Much has been written on “why” Postgres is winning, and there are a number of good reasons — including its extensibility — enabling Postgres to extend to new use cases as requirements emerge, demonstrated by pg vector‘s ability to address a variety of AI requirements.

Despite its popularity for OLTP workloads, Postgres has remained a stretch for performant analytics on larger data sets (OLAP). While there are a number of solutions that modify core Postgres to address OLAP workloads or that use portions of Postgres, each comes with inherent challenges, costs, and limitations associated with using a fork of Postgres.

With Postgres users increasingly looking for Postgres-native solutions to OLAP requirements, trends towards low-cost storage as the center of gravity for data, and the emergence of new standards for data formats, Crunchy Data set out to develop a new solution to extend Postgres to meet these needs. Our solution leverages the known power of Postgres extensions, with an emerging winner in embedded fast query engines — DuckDB.

Love Postgres, Need Analytics

We help a wide variety of organizations deploy Postgres. From this vantage point, users successfully deploy Postgres for various use cases. In fact, when we are questioned about the use cases Postgres addresses, we have a hard time answering because the answer is that we have really seen them all.

But that is in an OLTP context. OLAP has historically been “the other workload” where Postgres didn’t compete. Yes, there are some solutions out there, but in particular, as users move to the cloud and cloud native approaches, there are not many Postgres-native solutions.

We have seen this most pointedly firsthand: Many Crunchy Bridge customers — who love Postgres — were using Postgres for the OLTP workloads but using a combination of tools to replicate their data to various third-party analytic platforms for analytic queries. In talking to these customers about their requirements, they weren’t happy with moving the data out of Postgres but did not have a great alternative. Could we build one?

Processing Data Where It Lives, Analytics on Data in S3

To build a solution for Postgres-native analytics — it was clear that we needed a solution that addressed data where it lives and in the modern formats organizations use. Two significant trends shaped our direction:

- Data increasingly lives in S3. S3 — and similar cloud storage repositories — have exploded in adoption as low-cost, durable storage. They can scale to infinity and are accessible from anywhere. Users can expose their data to many different engines at the same time.

- Open standards for file and table formats are the emerging winners. While many data lakes remain “CSV files in S3,” analytics-optimized formats like Parquet and Iceberg are quickly gaining popularity.

Of course, the prospect of separating the query engine (compute) from the storage (data) has given rise to a number of database projects. This enables storing data in one place at a low cost while efficiently querying the data without moving it to the query engine.

Ultimately, we concluded that S3 is (with caching) the appropriate storage layer for analytical data, and solid S3 integration into PostgreSQL provides the means to address these use cases.

Postgres Extensibility Once Again Makes It a Winner

Postgres extensions enable Postgres to address new use cases as requirements emerge. Postgres becomes the leading database for managing spatial data by loading the PostGIS extension. Postgres can support advanced sharding with Citus or transform it into a vector database with pgvector. Every Postgres user has their favorite extension, and many likely use a collection of extensions without considering the power of the Postgres extension framework.

Extending Postgres to support an external query engine fully takes advantage of this extension capability. Using Postgres ‘hooks, we can transparently break down a query plan into parts that can be “pushed down” into this external separate query engine, enabling us to take advantage of the benefits of a specialized engine for these particular workloads. In this specific case, we use DuckDB, an emerging winner in the embedded query engine space.

For those who are less familiar, DuckDB was developed by Hannes Mühleisen and Mark Raasveldt at the Centrum Wiskunde & Informatica (CWI) and actively developed by DuckDB Labs with many community contributions. DuckDB has become a leading embeddable query engine, using modern OLAP techniques to run fast queries against Parquet and files in object storage. Parquet files support compressed columnar data, making that format well-suited for archiving historical time series rows out of transactional Postgres and into an efficient form for long-term OLAP use.

That means we can integrate DuckDB with Postgres by using Postgres extensions, recognizing the parts of the query plan that can be pushed down into DuckDB for vectorized, parallel execution, and constructing the appropriate SQL queries to pass to DuckDB. Again, we use a combination of PostgreSQL hooks to achieve that for filters, aggregates, joins, and more complex query structures. In some cases, the entire query can be pushed down; in others, we merge different subplans.

Benefits of a Postgres-Native Solution

But why Postgres for analytics? As Postgres adoption grows and users of all types learn Postgres for application development and OLTP requirements, providing a Postgres-native experience for these analytical queries has a number of benefits.

As a user, your data in S3 will appear as tables that you can query along with all your standard PostgreSQL tables and use in combination with the general simplicity of other PostgreSQL features and extensions, including:

- access controls

- views

- materialized views

- query performance insights with pg_stat_statements

- stored procedures with PL/pgSQL ()

- periodic jobs with pg_cron

- long-lived NVMe and in-memory caches

- dashboard tools

If extending Postgres to support an external query engine for analytic queries sounds a bit complicated, that is probably true. That said, by offering the result as a managed service, users can benefit from the power of this solution without having to worry about low-level details like hooks or query pushdowns. From a user perspective, all you see is the ability to quickly expose your data living in S3 to be queried using standard Postgres. Crunchy Bridge provides the full benefit of managed production-ready Postgres with a developer-focused UX, now with extended capabilities to support fast analytic queries powered by DuckDB.

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.