包阅导读总结

1. 关键词:Anaconda、Python、AI 操作系统、LLMs、法律框架

2. 总结:Anaconda 致力于解决生成式 AI 创新中的障碍,包括 Python 速度慢的问题,推出相关产品。同时在构建 AI 操作系统,涉及数据与代码融合。还提到 AI 需要新法律框架,应对复杂的所有权和使用概念等问题。

3. 主要内容:

– Anaconda 致力于解决生成式 AI 创新中的障碍

– 公司在数据密集和高性能计算方面有经验,准备助力构建新技术和传播最佳实践

– 相关产品

– Anaconda Toolbox 增强 Python 在 Excel 中的使用,预计 2024 年 Q3 全面可用

– High-Performance Python 促进高速计算和数据处理,旨在提升 Python 性能

– AI Navigator 让用户访问多种大型语言模型,帮助管理预训练模型和数据

– 法律框架

– 开源 AI 定义在调整,AI 与传统软件不同,需要新法律框架涵盖所有权和使用概念

– 以美国田纳西州的相关法律为例,说明 AI 法律的复杂性

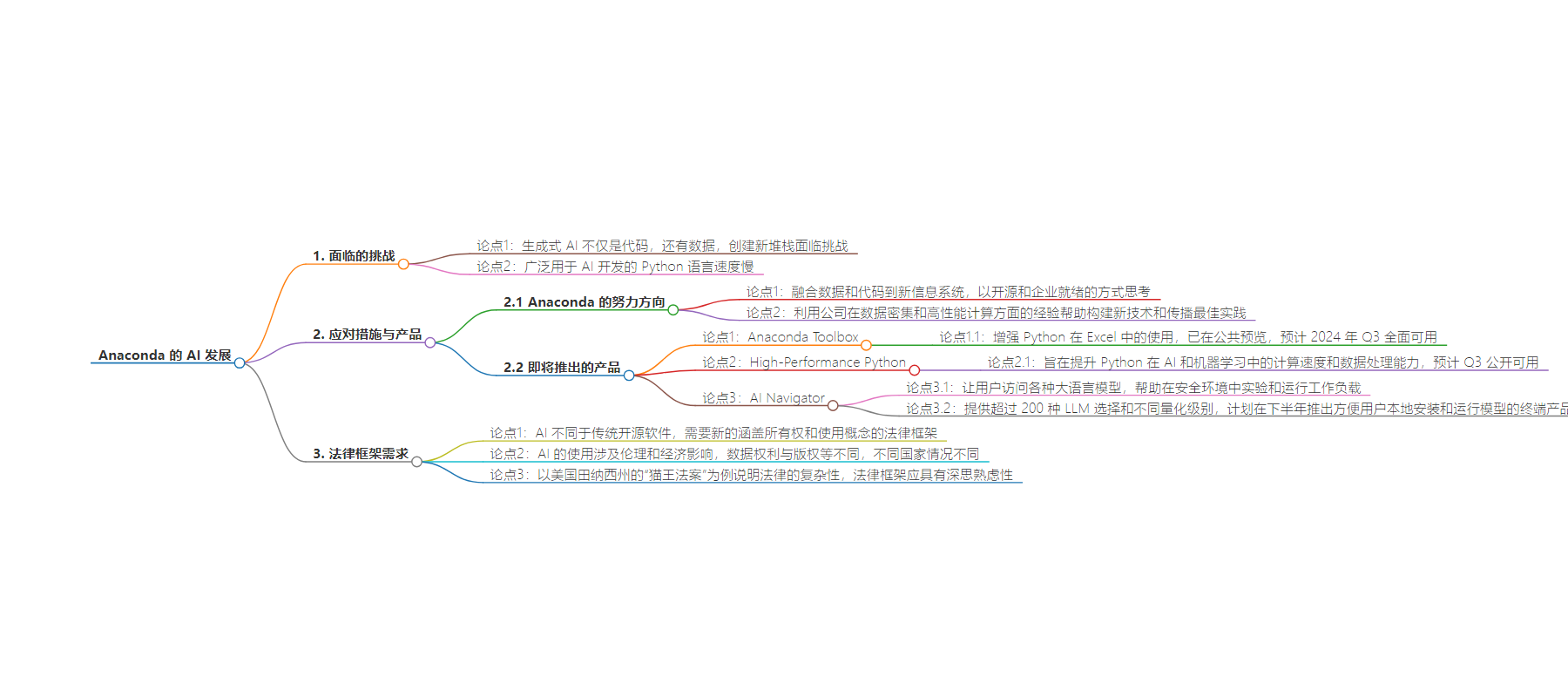

思维导图:

文章地址:https://thenewstack.io/faster-python-easier-access-to-llms-anacondas-ai-roadmap/

文章来源:thenewstack.io

作者:Heather Joslyn

发布时间:2024/7/24 18:52

语言:英文

总字数:1221字

预计阅读时间:5分钟

评分:90分

标签:Python,AI 开发,大语言模型,Anaconda,AI 平台

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

PITTSBURGH —Generative AI isn’t just code, it’s also data. And that’s a big challenge in creating a new stack for GenAI.

Another challenge: Python, the language widely used for AI development, is notoriously slow.

Anaconda is working on both of those obstacles to generative AI innovation, according to Peter Wang, co-founder and now chief AI and innovation officer at Anaconda

The 12-year-old company, makers of data science tools, championed the effort to bring Python out of the scientific realm and into business data analytics. Anaconda is now working on a number of fronts to “build the operating system for AI,” as its current messaging proclaims.

At PyCon US in May, Wang told The New Stack what that entails. Formerly Anaconda’s CEO, Wang took his new role in January, when the company also announced the creation of its AI Incubator, a research and development arm that Wang leads.

Building a new AI operating system is more than just software, he said, and it will require the fusion of data and code into a new information system. It will mean new thinking about “how to do that in both an open source way and an enterprise-ready way,” Wang said. “It involves a lot of tasks and a lot of different concerns that before had always been sort of separate.”

Anaconda’s history in data-intensive, high-performance computing, as well as “how all this stuff kind of comes together in an enterprise environment,” Wang said, means the company is prepared to help build new technologies and spread best practices, and “do these other kinds of things that provide what you need as a coherent set of abstractions, which is what an operating system actually is.”

Anaconda Toolbox and AI Navigator

Right now, three Anaconda products are poised for wider availability later this year. The projects represent Anaconda’s strategy for building that “AI operating system,” Wang said.

Anaconda Toolbox, a set of tools announced last September to enhance the usage of Python in Excel, by Microsoft, has been in public preview since August. Now in beta, the Excel integration is slated for general availability in Q3 of 2024, Wang said.

In June, Microsoft announced on its website that it is gradually rolling out Python in Excel to enterprise, business, education, and personal users running current channel (preview) builds on Windows. “This feature is rolling out to Excel for Windows, starting with Version 2406 (Build 17726.20016),” Microsoft’s website states.

High-Performance Python, slated to be publicly available in Q3, is a specialized set of Python packages designed to facilitate high-speed computations and advanced data processing in AI and machine learning. The packages include a high-performance Python interpreter and an ecosystem that includes hardware acceleration and compiler optimizations.

The goal, Wang said, is “to improve Python itself to be more scalable and to be more efficient, especially on new hardware,” like the new Nvidia chips built for AI workloads, Wang said.

A lot of the work Anaconda supports in making Python faster, he said, “is about providing accessibility to the Python stack from lots of different places. Whether it’s from a tablet, whether it’s from the browser, whether it’s from within Excel, we really want to take the hard, gnarly stuff and make it accessible to as many people as possible, wherever they are.”

He added, “You’ll see more announcements from us throughout the year regarding our efforts on making Python faster. That’s an area that’s getting even [venture capital] investment nowadays.”

AI Navigator, a desktop application now in beta, is designed to give users access to various large language models (LLMs) and helps them experiment and run workloads in secure environments.

It’s meant, Wang said, to help create “an easier way to manage the pre-trained models and the data that is actually needed to build API’s.

“Right now. It’s very much a wild West,” he said. “There are many places you can go and get a pre-trained model — Hugging Face, of course, is very, very well known for being a repository of all these kinds of things.”

But in talking to Anaconda’s enterprise customers, he said, the company found that they were looking for a trusted source for enterprise-ready LLMs, along with a way to impose their own governance on the models.

“So, in a way, we also are stepping into a model governance sort of capacity,” Wang said. “I think that’s absolutely just table stakes for enterprise adoption of this stuff.”

AI Navigator lets users choose from among more than 200 LLMs, each with four different quantization levels. “We’re doing a lot of the work ourselves to make them the right size and the right format to run on — on various machines, different types,” he said. “It’s actually pretty gnarly to get some big public model to run on various kinds of laptops.”

In the pipeline, Wang said: an end-user product intended to make it easy for people to install models locally and run them “in a performant way on whatever platform. We’ll have a beta of that toward the back half of the year.”

AI Needs a New Legal Framework

As the Open Source Initiative (OSI) hones its definition of open source AI, Wang echoed the concerns of OSI CEO Stefano Maffulli and others: AI is such a different beast than anything the open source world has dealt with previously.

In the case of traditional software, Wang said, “you own the software or it’s given to you in open source. And then what you do with it is a different concern from the ownership of the software.

“But with LLMs and technology, I don’t think we can actually fundamentally separate those things.”

And, of course, the usage of AI applications and their data “causes so much concern about ethics, around a lot of the economic implications.”

What’s going to be needed, he said is a new legal framework that can encompass both the ownership and usage concepts. “They’re challenging and nuanced,” he noted. “Because it’s not software at all — It’s not just software, it is actually a lot about the data itself.

“And data rights are different than copyright and fair use, and it’s different in different countries, even in the westernized industrial world.”

Even among individual American states. Wang noted Tennessee’s new law, the so-called Elvis Act (short for Ensuring Likeness Voice and Image Security), which is aimed at protecting musicians from unauthorized soundalikes generated by AI. The Elvis Act, the first in the U.S. to combat AI impersonation of sound, went into effect July 1.

Because of the complexity of AI, the legal framework built to surround it must be “contemplative,” Wang said.

“All intellectual property, from a moral rights theory perspective, is a temporary monopoly created for the purposes of incentivizing and advancing the arts — whether it’s a patent, whether it’s a trade secret, whether it’s a copyright, whatever it is,” he said. “The state is creating an artificial monopoly. That is not natural, not physical, it’s artificial for the purposes of incentivizing or creating a certain economic outcome.”

“So my sort of little soundbite is that LLMs are not new kinds of printers, new kinds of duplication technology,” Wang said. “We haven’t been here before.”

YOUTUBE.COM/THENEWSTACK

Tech moves fast, don’t miss an episode. Subscribe to our YouTubechannel to stream all our podcasts, interviews, demos, and more.