包阅导读总结

1.

关键词:Spring AI、Ollama、Tool Support、Function Calling、Java Developers

2.

总结:Spring AI(1.0.0-SNAPSHOT)引入了 Ollama 的工具支持新功能,使 Java 开发者能在应用中轻松利用,具有易集成、灵活配置等特点,还介绍了工作原理、使用方法及相关示例,虽有局限性但前景广阔。

3.

主要内容:

– Spring AI 引入 Ollama 工具支持

– 新功能:Ollama 为大语言模型提供工具支持

– Spring AI 集成此功能至 Spring 生态系统

– 关键特性

– 易集成:注册 Java 函数为 Spring beans 并使用

– 灵活配置

– 自动生成 JSON 模式

– 支持多函数

– 运行时函数选择

– 代码可移植性

– 工作原理

– 定义并注册自定义 Java 函数

– 模型决定调用函数生成 JSON 对象

– Spring AI 处理请求并返回结果

– 开始使用

– 先决条件:运行 Ollama 及拉取支持模型

– 依赖项:添加相关依赖到项目

– 示例:提供简单使用示例及完整代码链接

– OpenAI 兼容性

– Ollama 与 OpenAI API 兼容

– 可通过设置相关参数使用

– 局限性

– 当前 API 不支持流式工具调用和工具选择

– 结论

– 为 Java 开发者创建新方式

– 列举使用该功能的好处,鼓励尝试并参考官方文档

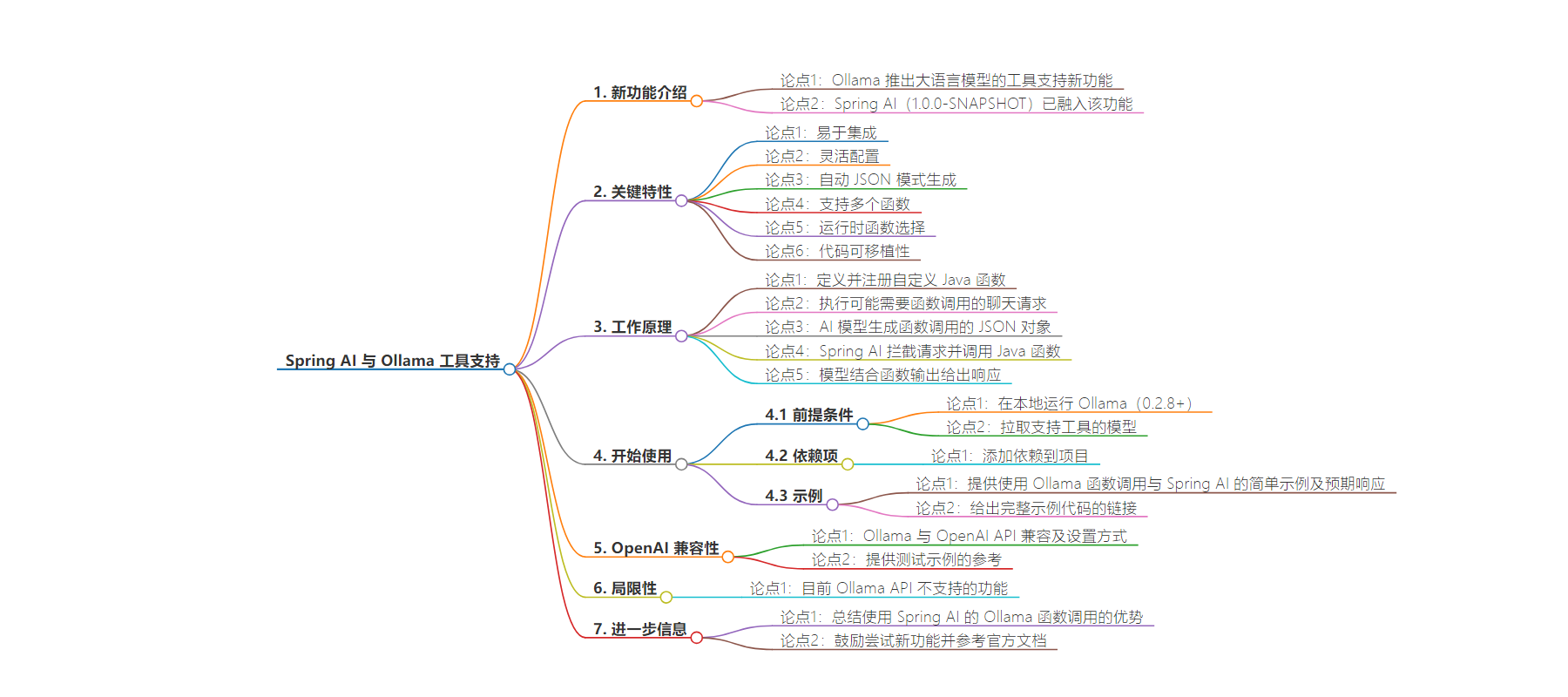

思维导图:

文章地址:https://spring.io/blog/2024/07/26/spring-ai-with-ollama-tool-support

文章来源:spring.io

作者:Christian Tzolov

发布时间:2024/7/26 0:00

语言:英文

总字数:825字

预计阅读时间:4分钟

评分:90分

标签:Spring AI,Ollama,函数调用,Java 开发,AI 集成

以下为原文内容

本内容来源于用户推荐转载,旨在分享知识与观点,如有侵权请联系删除 联系邮箱 media@ilingban.com

Earlier this week, Ollama introduced an exciting new feature: tool support for Large Language Models (LLMs).

Today, we’re thrilled to announce that Spring AI (1.0.0-SNAPSHOT) has fully embraced this powerful feature, bringing Ollama’s function calling capabilities to the Spring ecosystem.

Ollama’s tool support allows models to make decisions about when to call external functions and how to use the returned data. This opens up a world of possibilities, from accessing real-time information to performing complex calculations. Spring AI takes this concept and integrates it seamlessly with the Spring ecosystem, making it incredibly easy for Java developers to leverage this functionality in their applications. Key Features of Spring AI’s Ollama Function Calling Support includes:

- Easy Integration: Register your Java functions as Spring beans and use them with Ollama models.

- Flexible Configuration: Multiple ways to register and configure functions.

- Automatic JSON Schema Generation: Spring AI handles the conversion of your Java methods into JSON schemas that Ollama can understand.

- Support for Multiple Functions: Register and use multiple functions in a single chat session.

- Runtime Function Selection: Dynamically choose which functions to enable for each prompt.

- Code Portability: Reuse your application code without changes with different LLM providers such as OpenAI, Mistral, VertexAI, Anthropic, Groq without code changes.

How It Works

- Define custom Java functions and register them with Spring AI.

- Perform chat request that might need function calling to complete the answer.

- When the AI model determines it needs to call a function, it generates a JSON object with the function name and arguments.

- Spring AI intercepts this request, calls your Java function, and returns the result to the model.

- The model incorporates the function’s output into its response.

Getting Started

Prerequisite

You first need to run Ollama (0.2.8+) on your local machine. Refer to the official Ollama project README to get started running models on your local machine.Then pull a tools supporting model such as Llama 3.1, Mistral, Firefunction v2, Command-R +…A list of supported models can be found under the Tools category on the models page.

ollama run mistralDependencies

To start using Ollama function calling with Spring AI, add the following dependency to your project:

<dependency> <groupId>org.springframework.ai</groupId> <artifactId>spring-ai-ollama-spring-boot-starter</artifactId></dependency>Refer to the Dependency Management section to add the Spring AI BOM to your build file.

Example

Here’s a simple example of how to use Ollama function calling with Spring AI:

@SpringBootApplicationpublic class OllamaApplication { public static void main(String[] args) { SpringApplication.run(OllamaApplication.class, args); } @Bean CommandLineRunner runner(ChatClient.Builder chatClientBuilder) { return args -> { var chatClient = chatClientBuilder.build(); var response = chatClient.prompt() .user("What is the weather in Amsterdam and Paris?") .functions("weatherFunction") // reference by bean name. .call() .content(); System.out.println(response); }; } @Bean @Description("Get the weather in location") public Function<WeatherRequest, WeatherResponse> weatherFunction() { return new MockWeatherService(); } public static class MockWeatherService implements Function<WeatherRequest, WeatherResponse> { public record WeatherRequest(String location, String unit) {} public record WeatherResponse(double temp, String unit) {} @Override public WeatherResponse apply(WeatherRequest request) { double temperature = request.location().contains("Amsterdam") ? 20 : 25; return new WeatherResponse(temperature, request.unit); } }}In this example, when the model needs weather information, it will automatically call the weatherFunction bean, which can then fetch real-time weather data.

The expected response looks like this: “The weather in Amsterdam is currently 20 degrees Celsius, and the weather in Paris is currently 25 degrees Celsius.“

The full example code is available at: https://github.com/tzolov/ollama-tools

OpenAI Compatibility

Ollama is OpenAI API compatible and you can use the Spring AI OpenAI client to talk to Ollama and use tools.For this you need to use the OpenAI client but set the base-url: spring.ai.openai.chat.base-url=http://localhost:11434 and select one of the provided Ollama Tools models: spring.ai.openai.chat.options.model=mistral.

Check the OllamaWithOpenAiChatModelIT.java tests for examples of using Ollama over Spring AI OpenAI.

Limitations

As stated in the Ollama blog post, currently their API does not support Streaming Tool Calls nor Tool choice.

Once those limitations are resolved, Spring AI is ready to provide support for them as well.

Further Information

Conclusion

By building on Ollama’s innovative tool support and integrating it into the Spring ecosystem, Spring AI has created a powerful new way for Java developers to create AI-enhanced applications. This feature opens up exciting possibilities for creating more dynamic and responsive AI-powered systems that can interact with real-world data and services.

Some benefits of using Spring AI’s Ollama Function Calling include:

- Extend AI Capabilities: Easily augment AI models with custom functionality and real-time data.

- Seamless Integration: Leverage existing Spring beans and infrastructure in your AI applications.

- Type-Safe Development: Use strongly-typed Java functions instead of dealing with raw JSON.

- Reduced Boilerplate: Spring AI handles the complexities of function calling, allowing you to focus on your business logic.

We encourage you to try out this new feature and let us know how you’re using it in your projects. For more detailed information and advanced usage, check out our official documentation.

Happy coding with Spring AI and Ollama!